The Importance of Human in the Loop Systems for AI Safety

The Importance of Human-in-the-Loop Systems for AI Safety Engineering cannot be overstated in our rapidly advancing technological landscape. As someone deeply committed to AI ethics and digital safety, I’ve witnessed firsthand how the most sophisticated AI systems can fail catastrophically without proper human oversight. Whether you’re a concerned citizen, a business leader implementing AI solutions, or simply curious about technology’s future, understanding why humans must remain central to AI decision-making is crucial for building systems we can trust. This guide will walk you through practical steps for implementing human-in-the-loop (HITL) systems, explain why they’re essential for AI safety, and show you how to maintain this critical balance between automation and human judgment.

What Are Human-in-the-Loop Systems?

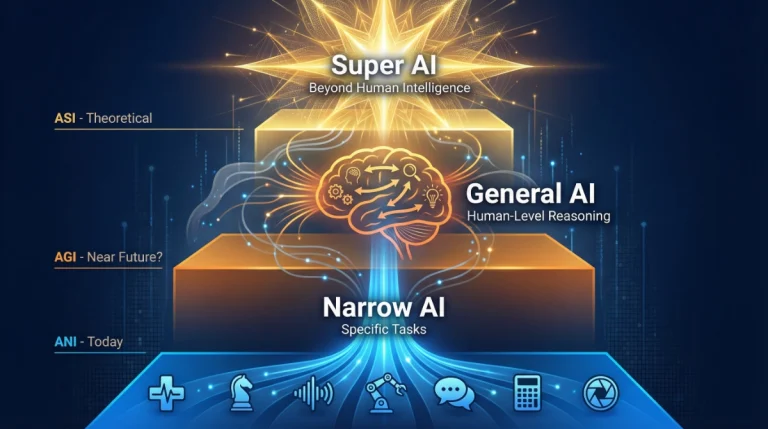

Before diving into implementation, let’s clarify what we mean by human-in-the-loop systems. These are AI frameworks where humans actively participate in the decision-making process rather than allowing algorithms to operate completely autonomously. Think of it as a safety net where human intelligence reviews, validates, or corrects AI outputs before they impact real-world outcomes.

In AI safety engineering, this approach serves as our primary defense against algorithmic errors, bias amplification, and unforeseen consequences. The human element acts as a critical checkpoint, ensuring that AI recommendations align with ethical standards, contextual understanding, and common sense—qualities that even the most advanced algorithms struggle to replicate consistently.

Why does this matter? No matter how sophisticated AI systems are, they lack a genuine understanding of human values, cultural context, and moral reasoning. They operate on patterns and probabilities, not wisdom or empathy.

Why Human Oversight Matters in AI Systems

Examining real-world failures crystallizes the necessity of human oversight in AI. In 2018, a pedestrian crossing the street at night was not recognized by an autonomous vehicle, leading to a fatal accident. The system’s sensors detected the person but classified her incorrectly, and there was no human operator monitoring the situation in real-time to intervene.

This tragic example illustrates a fundamental truth: AI safety measures must include human judgment, especially in high-stakes scenarios. Algorithms can process data faster than humans, but they cannot grasp the full weight of life-or-death decisions or navigate the gray areas that define so many critical situations.

In healthcare, financial services, criminal justice, and autonomous systems, the importance of human validation extends beyond error correction. It encompasses ethical accountability, transparency, and the preservation of human dignity in an increasingly automated world. When AI makes recommendations that affect people’s lives—approving loans, diagnosing diseases, or determining prison sentences—human experts must verify that these decisions are fair, accurate, and contextually appropriate.

Step-by-Step Guide to Implementing Human-in-the-Loop Systems

Step 1: Identify Critical Decision Points in Your AI System

The first step in building safe AI architectures is mapping where human intervention matters most. Not every AI decision requires human review—that would defeat the purpose of automation. Instead, focus on high-impact decisions where errors carry serious consequences.

Why this step matters: Understanding your system’s risk profile allows you to allocate human oversight resources efficiently. You’re building a safety framework tailored to actual vulnerabilities rather than adding blanket oversight that wastes time and resources.

How to do it: Create a decision matrix listing all outputs your AI system generates. Rate each by potential impact (low, medium, high, or critical) and confidence level. Any decision marked “high” or “critical” impact should trigger human review, especially when the AI’s confidence score falls below your established threshold (typically 85-95%, depending on the application).

For example, an AI system screening job applications might automatically advance candidates with clear qualifications but flag borderline cases for human recruiters. This preserves efficiency while preventing discriminatory or contextually inappropriate rejections.

Step 2: Design Clear Human Review Interfaces

Once you’ve identified where humans need to intervene, create intuitive interfaces that make oversight practical and effective. Your reviewers need to understand AI recommendations quickly, see the reasoning behind them, and make informed decisions without technical expertise.

Why this step matters: Poorly designed review systems lead to automation bias, where humans rubber-stamp AI decisions without genuine evaluation. This defeats the purpose of human-AI collaboration and creates a false sense of safety.

How to do it: Build dashboards that present AI recommendations alongside explanatory information: which data points influenced the decision, what alternative options the system considered, and what confidence level the AI assigned. Include visual indicators for unusual patterns or outliers that deserve extra scrutiny.

For instance, in a medical diagnosis support system, don’t just show doctors the AI’s suggested diagnosis. Display which symptoms, test results, and patient history factors contributed most heavily, and highlight any conflicting indicators the algorithm struggled to reconcile. This transparent AI decision-making empowers doctors to exercise genuine judgment rather than passive approval.

Step 3: Establish Clear Intervention Protocols

Create specific, documented procedures for when and how humans should override AI decisions. Ambiguity here creates inconsistency, undermines accountability, and frustrates the people tasked with oversight.

Why this step matters: Without clear protocols, different reviewers will intervene inconsistently, making it impossible to evaluate whether your HITL system actually improves safety. Moreover, unclear guidelines leave reviewers uncertain about their authority and responsibility, potentially leading them to defer to the AI even when they sense something is wrong.

How to do it: Document specific triggers for human intervention (confidence thresholds, edge cases, high-stakes decisions) and establish a clear escalation path. Define what information reviewers need, what authority they have to override the system, and what documentation they must provide when they do so.

Create scenario-based training that walks reviewers through common and edge-case situations. For example, in a content moderation system, specify exactly when borderline cases should go to human moderators, what context they should consider (cultural norms, satire, news value), and how to document their reasoning for quality assurance and continuous improvement.

Step 4: Implement Feedback Loops for Continuous Learning

The most effective human-in-the-loop AI systems don’t just catch errors—they learn from human corrections to become more accurate over time. Every human intervention represents valuable training data that can improve your AI’s future performance.

Why this step matters: Without feedback loops, you’re fixing the same problems repeatedly instead of eliminating their root causes. Your human reviewers become bottlenecks rather than teachers, and the system never evolves beyond its initial limitations.

How to do it: Build mechanisms that capture human decisions, the reasoning behind them, and the contextual factors that mattered. Please incorporate this information into your training pipeline to help the AI accurately recognize similar situations in the future.

For instance, when a human loan officer overrides an AI rejection because they recognize that a gap in employment history reflects maternity leave rather than instability, that correction should teach the system to factor in such life events appropriately. This is an example of responsible AI development—systems that get smarter with help from people.

Step 5: Train Your Human Reviewers Properly

Even the best-designed HITL system fails if the humans involved don’t understand their role, the AI’s capabilities and limitations, or the principles guiding their decisions. Effective training transforms reviewers from passive checkers into active safety engineers.

Why this step matters: Untrained reviewers either over-trust the AI (automation bias) or distrust it entirely (automation aversion), both of which undermine safety. They need to understand when AI excels, where it struggles, and how to recognize the subtle signs of algorithmic failure.

How to do it: Develop comprehensive training that covers the AI system’s architecture, common failure modes, bias patterns to watch for, and the ethical principles underlying your organization’s approach to AI. Include hands-on practice with real examples, especially edge cases and near misses.

Teach reviewers about algorithmic bias detection—how to spot when an AI system treats different demographic groups unfairly, even if the bias isn’t immediately obvious. For instance, a hiring AI might not explicitly discriminate based on gender, but if it learns to favor candidates who use assertive language more common among men, it effectively creates gender bias. Trained reviewers can catch these patterns and correct them before they cause harm.

Step 6: Monitor System Performance and Human Reviewer Quality

Implementation doesn’t end with deployment. Continuous monitoring ensures your human-in-the-loop architecture remains effective as AI systems evolve, edge cases emerge, and reviewer performance varies.

Why this step matters: Both AI systems and human reviewers can drift over time. AI models may degrade as real-world data distributions shift, while human reviewers can become fatigued, complacent, or inconsistent. Without monitoring, these problems compound silently until a major failure occurs.

How to do it: Track key metrics, including AI accuracy before and after human review, inter-reviewer agreement rates, intervention frequency, and decision reversal patterns. Set up automated alerts for anomalies like sudden spikes in AI confidence scores, unusual intervention patterns, or declining agreement among reviewers.

Conduct regular audits where senior reviewers or external evaluators assess a sample of decisions to verify quality. Create opportunities for reviewers to discuss challenging cases and calibrate their decision-making. This is continuous AI safety improvement—treating safety as an ongoing practice rather than a one-time implementation.

Step 7: Document Everything for Accountability and Compliance

Thorough documentation serves multiple critical functions: it enables accountability when things go wrong, supports continuous improvement through post-incident analysis, and ensures compliance with emerging AI governance regulations.

Why this step matters: In high-stakes domains, you’ll need to demonstrate that your AI system operates safely and fairly. Regulators, auditors, and the public increasingly demand transparency about how AI decisions are made and how humans maintain control. Without documentation, you cannot prove responsible operation or learn systematically from failures.

How to do it: Maintain detailed logs of AI decisions, human reviews, interventions, and outcomes. Record not just what decisions were made, but the reasoning behind them, especially for overrides and edge cases. Implement version control for your AI models and intervention protocols so you can trace any decision back to the system configuration that produced it.

Create regular reports summarizing system performance, intervention patterns, and lessons learned. These should be accessible to stakeholders at various technical levels, from executives needing high-level assurance to engineers requiring detailed diagnostic information. This level of AI transparency builds trust and enables meaningful oversight.

Common Mistakes to Avoid

As you implement human-in-the-loop systems, watch out for these frequent pitfalls that undermine safety:

Automation bias: This occurs when human reviewers over-trust AI recommendations and approve them without genuine evaluation. Combat this by training reviewers to actively look for problems, regularly introducing test cases with known errors, and creating a culture that values questioning and critical thinking.

Alert fatigue: If you flag too many decisions for human review, reviewers become overwhelmed and start rubber-stamping approvals just to keep up with the workload. Be strategic about intervention triggers, focusing on genuinely high-stakes or uncertain cases rather than creating blanket review requirements.

Insufficient authority: When reviewers feel they lack genuine authority to override AI decisions, or when overrides require extensive justification that slows them down unreasonably, they’ll defer to the system even when they shouldn’t. Ensure reviewers understand they’re not just there to approve AI decisions—they’re there to correct them when necessary.

Neglecting reviewer well-being: Some AI oversight work involves reviewing disturbing content or making emotionally taxing decisions. Support your reviewers with appropriate breaks, mental health resources, and rotation policies that prevent burnout. Their well-being directly impacts the quality of their safety work.

Best Practices for Long-Term Success

Beyond avoiding mistakes, embrace these practices for maintaining effective AI safety through human oversight:

Start with more human involvement and gradually increase automation as the system proves reliable. This “fail-safe” approach catches problems early when stakes are lower and builds institutional knowledge about how the AI actually performs.

Create diverse review teams that bring different perspectives, backgrounds, and expertise. Homogeneous teams are more likely to share the same blind spots as the algorithms they’re overseeing, while diverse teams catch a wider range of problems and biases.

Establish clear escalation paths for complex cases. Not every decision needs to go to a senior expert, but there should be a straightforward process for reviewers to escalate situations that exceed their expertise or comfort level.

Regularly update your intervention protocols based on emerging patterns and new research in AI safety engineering. The field evolves rapidly, and yesterday’s best practices may prove insufficient for tomorrow’s challenges.

Frequently Asked Questions

Taking Action: Your Next Steps

The Importance of Human in the Loop Systems for AI Safety Engineering extends far beyond technical implementation—it’s about preserving human agency and values in an automated world. Whether you’re building AI systems, using them in your organization, or simply engaging with them as a citizen, understanding and advocating for proper human oversight makes technology serve humanity rather than replacing or overruling it.

If you’re implementing AI in your organization, start by identifying your highest-risk decisions and building review processes around them before full deployment. Don’t wait for a failure to add safety measures—build them in from the beginning.

If you’re using AI systems built by others, ask questions about their safety measures. Does the company employ human reviewers? How are decisions audited? What recourse exists when the system makes mistakes? Organizations building responsible AI systems welcome these questions because they demonstrate the kind of thoughtful engagement that makes AI work better for everyone.

For those studying or entering the field of AI, consider specializing in AI safety and ethics. The technical challenges of building safe, aligned AI systems are among the most important and interesting problems in computer science, and the world desperately needs more people who can bridge the gap between technological capability and human values.

Remember: every powerful technology requires safeguards, and the most effective safeguard for AI is human wisdom, judgment, and values. By maintaining meaningful human involvement in AI decision-making, we ensure that these remarkable tools enhance rather than diminish our humanity. The future of AI isn’t about machines replacing humans—it’s about humans and machines working together, each contributing their unique strengths to create outcomes better than either could achieve alone.

Start small, be deliberate, and never compromise on safety. Your commitment to human-centered AI development contributes to a future where technology serves human flourishing rather than threatening it.

References:

AI Safety Research Institute. (2024). Critical Domains require Human Oversight in AI Systems.

Stanford Human-Centered AI Institute. (2024). Human-AI Collaboration Best Practices.

Partnership on AI. (2024). Guidelines for Human-in-the-Loop Machine Learning Systems.

About the Author

Nadia Chen is an expert in AI ethics and digital safety with over a decade of experience helping organizations implement responsible AI systems. Specializing in human-in-the-loop architectures and algorithmic accountability, Nadia works to ensure that AI technologies serve humanity while preserving privacy, fairness, and human agency. Through her writing and consulting work, she makes complex AI safety concepts accessible to non-technical audiences, empowering everyone to engage thoughtfully with artificial intelligence. When she’s not analyzing AI systems or developing safety frameworks, Nadia teaches workshops on digital ethics and advocates for stronger AI governance standards.