General AI: Human-Level Intelligence in Machines

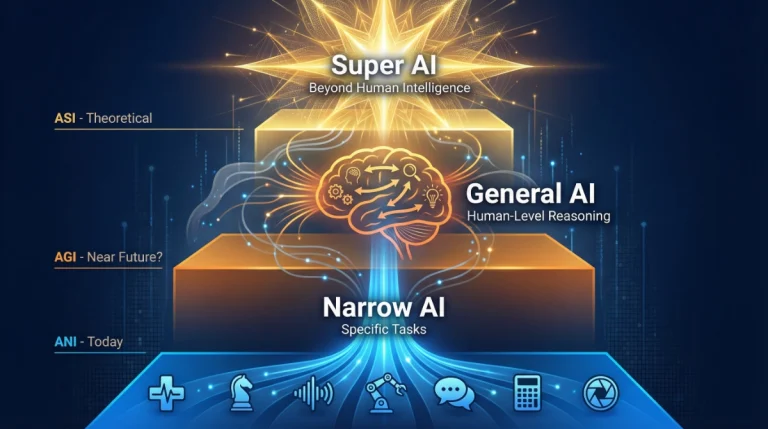

General AI (AGI) might sound like something straight out of science fiction, but it represents one of the most ambitious and debated goals in technology today. Unlike the AI tools you use daily—like voice assistants or recommendation algorithms—AGI would possess the ability to understand, learn, and apply knowledge across any domain, just like a human being. While current AI excels at specific tasks, AGI would think, reason, and adapt to entirely new situations without additional programming.

As someone deeply invested in AI ethics and digital safety, I want to guide you through this complex topic with clarity and honesty. Understanding AGI isn’t just about grasping cutting-edge technology; it’s about preparing for a future where the relationship between humans and machines could fundamentally change. Whether you’re curious, cautious, or both, this article will help you navigate the promise and perils of artificial general intelligence.

What Is General AI (AGI)? A Simple Definition

Let me start with the basics. Artificial General Intelligence, often abbreviated as AGI, refers to a type of artificial intelligence that can perform any intellectual task that a human can do. Think of it as the difference between a calculator and a mathematician. A calculator is brilliant at arithmetic but useless for writing poetry or diagnosing medical conditions. A mathematician, however, can tackle arithmetic, learn poetry, study medicine, and adapt to countless other challenges.

Current AI systems are what we call “narrow AI” or “weak AI.” They’re designed for specific functions: Siri answers questions, Netflix recommends shows, and spam filters sort your email. These systems are incredibly sophisticated within their domains, but they can’t transfer their knowledge to new areas. AGI, by contrast, would possess general intelligence—the flexibility to learn anything, reason through problems it has never encountered, and apply knowledge creatively across different contexts.

The key distinction lies in adaptability and understanding. Today’s AI recognizes patterns in data but doesn’t truly “understand” what it’s processing. An image recognition system can identify thousands of dog breeds but has no concept of what “dog” means beyond pixels and patterns. AGI would understand the essence of “dog”—that it’s a living creature, a companion, something that feels and behaves in certain ways—and could apply that understanding in countless situations.

How Would AGI Work? Understanding the Mechanics

The honest truth is that we don’t fully know how to build AGI yet, which is precisely why it remains one of the greatest challenges in computer science. However, researchers have several theoretical approaches they’re exploring, each with its own strengths and limitations.

Neural Networks and Deep Learning

Current AI relies heavily on neural networks—mathematical models inspired by how neurons in the human brain connect and process information. These networks learn by analyzing massive amounts of data and adjusting their internal parameters to recognize patterns. Deep learning, which uses multiple layers of these networks, has powered breakthroughs in image recognition, language translation, and game-playing.

For AGI, researchers believe we’ll need neural architectures far more sophisticated than what exists today. The human brain contains roughly 86 billion neurons with trillions of connections, constantly rewiring themselves as we learn. Current artificial neural networks, even the largest ones, operate on fundamentally different principles and lack the brain’s flexibility and efficiency.

Cognitive Architectures

Another approach attempts to replicate the structure of human cognition itself. These cognitive architectures try to model how humans perceive, remember, reason, and make decisions. Instead of just learning patterns from data, these systems would incorporate knowledge representation, symbolic reasoning, and goal-directed behavior—the kind of thinking humans use when solving complex problems or making plans.

Projects like SOAR and ACT-R have explored these architectures for decades, achieving impressive results in specific domains. However, scaling these systems to match human-level versatility remains extraordinarily difficult.

Hybrid Approaches

Many researchers now believe AGI will require combining multiple approaches. Imagine a system that uses neural networks for pattern recognition and learning, cognitive architectures for reasoning and planning, and evolutionary algorithms for adaptation and optimization. This hybrid approach mirrors how the human mind integrates different types of thinking—intuitive pattern matching alongside logical reasoning.

Real-World Examples: Where We Stand Today

While true AGI doesn’t exist yet, several projects and systems offer glimpses of what’s possible and highlight how far we still need to go.

GPT-4 and Large Language Models

Large language models like GPT-4 can write essays, answer questions, generate code, and engage in surprisingly human-like conversations. These systems demonstrate remarkable versatility across language-based tasks, leading some to wonder if they’re approaching AGI. However, these models lack genuine understanding, can’t learn after their training phase, have no awareness of the physical world, and make errors that reveal their fundamental limitations. They’re incredibly impressive narrow AI, but they’re not general intelligence.

AlphaGo and Game-Playing Systems

DeepMind’s AlphaGo stunned the world by defeating top human players at Go, a game considered far more complex than chess. Later versions like AlphaZero learned to master chess, shogi, and Go through self-play alone, without human guidance. These achievements show AI can develop sophisticated strategies and adapt within specific domains. Yet these systems can’t transfer their strategic thinking to other areas—AlphaGo can’t help you plan a business strategy or understand a novel.

Robotics and Embodied AI

Researchers increasingly believe AGI will require embodied intelligence—systems that interact with the physical world through robotic bodies. Companies like Boston Dynamics have created robots with impressive physical capabilities, while others work on systems that can manipulate objects and navigate environments. However, connecting physical interaction with high-level reasoning remains an enormous challenge. A robot might navigate a warehouse efficiently but struggle with tasks a toddler finds simple.

Current AI Limitations

Today’s most advanced AI systems share common limitations that reveal the gap to AGI:

They lack common sense reasoning—the everyday knowledge humans take for granted. They can’t truly learn continuously from experience the way humans do. They have no genuine understanding of causation, only correlation. They lack consciousness and self-awareness (though whether AGI requires consciousness remains debated). Most importantly, they can’t generalize their capabilities across fundamentally different domains.

The Potential Impact of AGI: Promises and Possibilities

If researchers eventually achieve AGI, the implications would be profound and far-reaching. Let me walk you through some possibilities while emphasizing that these scenarios remain speculative.

Transforming Healthcare and Medicine

AGI could revolutionize medical diagnosis and treatment by analyzing patient data, medical literature, and treatment outcomes simultaneously, potentially identifying diseases earlier and recommending personalized treatments. Such systems might accelerate drug discovery by simulating molecular interactions and predicting drug efficacy. They could help address the global shortage of healthcare professionals by providing preliminary diagnoses and treatment guidance, though human oversight would remain essential.

Accelerating Scientific Discovery

Scientific research involves connecting insights across disciplines, recognizing patterns in complex data, and generating creative hypotheses—areas where AGI could excel dramatically. Imagine an AI that reads every scientific paper ever published, identifies promising research directions humans might miss, and suggests novel experiments. This could accelerate progress in climate science, materials engineering, fundamental physics, and countless other fields.

Addressing Global Challenges

Climate change, poverty, resource scarcity, and pandemic preparedness involve overwhelming complexity with countless interacting variables. AGI might help by modeling complex systems, optimizing resource allocation, identifying early warning signs of crises, and proposing innovative solutions. However, we must remember that AI can only process the data and values we provide—it’s not a substitute for human wisdom and ethical judgment.

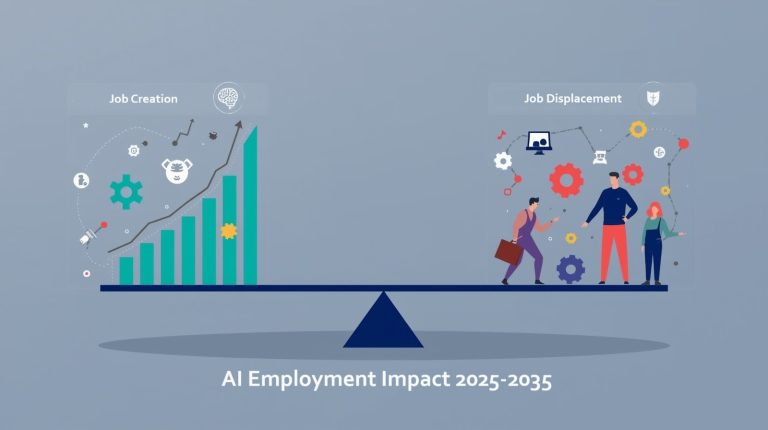

Economic and Workplace Transformation

The economic impact of AGI could be unprecedented. Unlike narrow AI, which automates specific tasks, AGI could potentially perform most intellectual work currently done by humans. This raises profound questions about employment, education, economic systems, and how society creates and distributes value. Some envision a future of abundance where AGI handles tedious work, freeing humans for creative and meaningful pursuits. Others worry about massive unemployment and economic disruption.

The Challenges: Why AGI Remains So Difficult

As someone focused on responsible technology development, I need to be honest about the immense challenges researchers face. AGI isn’t just years away—many experts believe it may be decades away, and some question whether it’s achievable at all with current approaches.

The Complexity of Human Intelligence

Human intelligence emerges from billions of years of evolution, shaped by the need to survive in complex environments. Our brains integrate multiple types of intelligence: spatial reasoning, social understanding, emotional processing, physical coordination, language, abstract thinking, and more. We don’t fully understand how biological brains produce consciousness, creativity, intuition, or common sense. Building artificial systems that replicate these capabilities requires first understanding them—a scientific challenge we’re still working on.

The Hardware Limitations

The human brain operates on roughly 20 watts of power—about the same as a dim light bulb. Current AI systems capable of human-level performance in specific tasks require massive data centers consuming megawatts of power. Creating AGI with practical computational requirements may require fundamentally new hardware architectures or computing paradigms, possibly inspired by biological systems.

The Data and Learning Problem

Humans learn remarkably efficiently from small amounts of experience. A child who touches a hot stove once learns to be careful around heat in countless future situations. Current AI systems require millions of examples to learn patterns, and that learning doesn’t transfer well to new contexts. Developing systems that learn as efficiently and flexibly as humans remains an unsolved problem.

Safety and Control Challenges

Perhaps the most serious challenge isn’t technical but ethical and practical: how do we ensure AGI systems remain safe and aligned with human values? Unlike narrow AI, which operates within defined boundaries, AGI would possess the flexibility to pursue goals in unexpected ways. Researchers worry about:

Value alignment—ensuring AGI systems actually want what we want. Corrigibility—maintaining the ability to correct or shut down AGI systems if needed. Interpretability—understanding why AGI systems make the decisions they make. Power concentration—preventing AGI from being misused by bad actors or concentrating power in few hands.

These aren’t abstract philosophical concerns but practical engineering challenges that must be solved before AGI becomes reality.

The Timeline Debate: When Might AGI Arrive?

Ask ten AI researchers when AGI will be achieved, and you’ll get ten different answers. Some optimists believe we might see AGI within 10-20 years. Others think it’s 50-100 years away or potentially never achievable with current approaches. This uncertainty reflects both the complexity of the challenge and our incomplete understanding of what intelligence truly is.

Recent advances in machine learning and neural networks have been impressive, leading some to predict accelerating progress. Others argue that current approaches face fundamental limitations and that achieving AGI will require breakthrough insights we haven’t discovered yet. The truth is that no one knows for certain—which is precisely why responsible development and ongoing research in AI safety are so crucial.

What we do know is that the journey toward AGI will likely involve incremental progress with occasional breakthroughs. Systems will become increasingly capable and general, blurring the line between narrow AI and AGI. Rather than a single moment when AGI suddenly exists, we may experience a gradual transition where AI systems become progressively more human-like in their capabilities.

Ethical Considerations and Responsible Development

As someone committed to digital safety and responsible technology, I believe the quest for AGI must be guided by careful ethical consideration at every step. The stakes are simply too high to prioritize speed over safety.

The Need for Inclusive Development

AGI development shouldn’t be limited to a handful of tech companies or wealthy nations. The impact will be global, so the development process should involve diverse perspectives, including ethicists, social scientists, policymakers, and representatives from communities that might be most affected. Different cultures and value systems should inform how we approach AGI design and deployment.

Transparency and Accountability

Organizations working on AGI should be transparent about their progress, safety measures, and potential risks. We need robust oversight mechanisms and accountability structures to ensure development proceeds responsibly. This doesn’t mean sharing every technical detail publicly, but it does mean maintaining open dialogue about goals, methods, and safeguards.

Prioritizing Safety Research

The AI research community has increasingly recognized that AI safety research deserves substantial investment and attention. Organizations like the Machine Intelligence Research Institute, the Center for Human-Compatible AI, and Anthropic’s own safety research team work specifically on ensuring advanced AI systems remain beneficial. This research must keep pace with—or ideally stay ahead of—capabilities research.

Preparing Society for Change

Even if AGI is decades away, societies should begin preparing for its potential arrival. This means rethinking education to emphasize skills AI can’t easily replicate, developing social safety nets for potential economic disruption, establishing regulatory frameworks for advanced AI, and fostering public understanding of both opportunities and risks.

Frequently Asked Questions About AGI

What You Can Do: Engaging Responsibly with AGI’s Future

You might wonder what role ordinary people can play in shaping the future of AGI. The answer is quite a lot. This technology will affect everyone, and everyone deserves a voice in how it develops.

Stay Informed

Follow reputable sources covering AI developments. Organizations like the Future of Humanity Institute, the AI Safety community, and academic institutions regularly publish accessible research and analysis. Understanding the basics helps you participate in important conversations and make informed decisions about how you interact with AI technologies.

Support Responsible Development

When possible, support companies and organizations prioritizing safety, transparency, and ethical AI development. Look for organizations committed to responsible practices, transparent about their work, investing in safety research, and engaging with diverse stakeholders. Your choices as a consumer and citizen can influence how technology develops.

Participate in Public Discourse

AGI development shouldn’t be left solely to technologists. Engage in conversations about AI in your community, contact policymakers about AI regulation, share concerns and perspectives, and support education initiatives that help others understand AI. Democracy works best when citizens engage with important issues, and AGI is certainly one of them.

Develop Complementary Skills

While AGI‘s timeline remains uncertain, developing skills that complement rather than compete with AI makes sense. This includes creative and artistic abilities, emotional intelligence and empathy, ethical reasoning and judgment, complex interpersonal communication, and adaptability and lifelong learning. These deeply human capabilities will likely remain valuable regardless of how AI technology evolves.

Advocate for Inclusive Benefits

Push for policies ensuring that AGI‘s benefits—if achieved—are shared broadly rather than concentrated. This might include supporting universal basic income proposals, education and retraining programs, equitable access to AI technologies, and regulations preventing AI-driven discrimination or harm. Technology’s impact depends not just on what we build but on how we choose to use it and distribute its benefits.

Looking Forward: A Balanced Perspective on AGI

As we conclude this exploration of Artificial General Intelligence, I want to leave you with a balanced perspective. AGI represents both humanity’s greatest technological ambition and one of our most serious responsibilities. The quest for human-level machine intelligence pushes the boundaries of computer science, neuroscience, philosophy, and ethics simultaneously.

Will we achieve AGI? Honestly, no one knows for certain. The technical challenges are immense, and we may discover fundamental barriers we haven’t anticipated. Or we might experience breakthroughs that accelerate progress beyond current expectations. What matters most isn’t predicting exactly when AGI might arrive but ensuring that however and whenever it develops, it does so responsibly, safely, and for the benefit of all humanity.

The future isn’t predetermined. The choices we make today—about research priorities, safety measures, governance structures, and societal preparation—will shape what that future looks like. You have a role in those choices, whether through staying informed, supporting responsible development, participating in public discourse, or simply thinking critically about the kind of future you want to see.

AGI isn’t just a technical problem to solve; it’s a challenge that calls us to think deeply about what we value, what makes us human, and what kind of world we want to create. That’s a conversation worth having, and I encourage you to be part of it.

Remember: technology should serve humanity, not the other way around. As we work toward increasingly capable AI systems, let’s ensure we never lose sight of that fundamental principle. The quest for General AI (AGI) will be one of the defining journeys of our time—let’s approach it with wisdom, care, and hope.

References:

Future of Humanity Institute, University of Oxford

Machine Intelligence Research Institute (MIRI)

Center for Human-Compatible AI, UC Berkeley

OpenAI Research Publications

Google DeepMind Research

Anthropic AI Safety Research

Stanford University Human-Centered Artificial Intelligence Institute

Partnership on AI

AI Safety Research Community

About the Author

Nadia Chen is an AI ethics researcher and digital safety advocate with over a decade of experience helping non-technical users understand and safely engage with emerging technologies. With a background in computer science and philosophy, Nadia specializes in making complex AI concepts accessible while emphasizing responsible use and ethical considerations. She regularly contributes to howAIdo.com, where she focuses on empowering everyday users to navigate the AI landscape with confidence and caution. When she’s not writing about AI safety, Nadia consults with organizations on ethical technology implementation and teaches digital literacy workshops in her community.