Value Alignment in AI: Building Ethical Systems

Value Alignment in AI represents one of the most critical challenges we face as artificial intelligence becomes increasingly integrated into our daily lives. As someone deeply invested in AI ethics and digital safety, I’ve witnessed firsthand how misaligned AI systems can produce unintended consequences—from biased hiring algorithms to recommendation systems that amplify harmful content. Understanding value alignment isn’t just for researchers and developers; it’s essential knowledge for anyone who wants to use AI responsibly and advocate for ethical technology.

This guide will walk you through the fundamentals of value alignment, explain why it is relevant for our collective future, and provide practical steps you can take to support and engage with ethically aligned AI systems. Whether you’re a concerned citizen, a student, or someone using AI tools daily, you’ll learn how to recognize aligned versus misaligned systems and contribute to building a safer AI ecosystem.

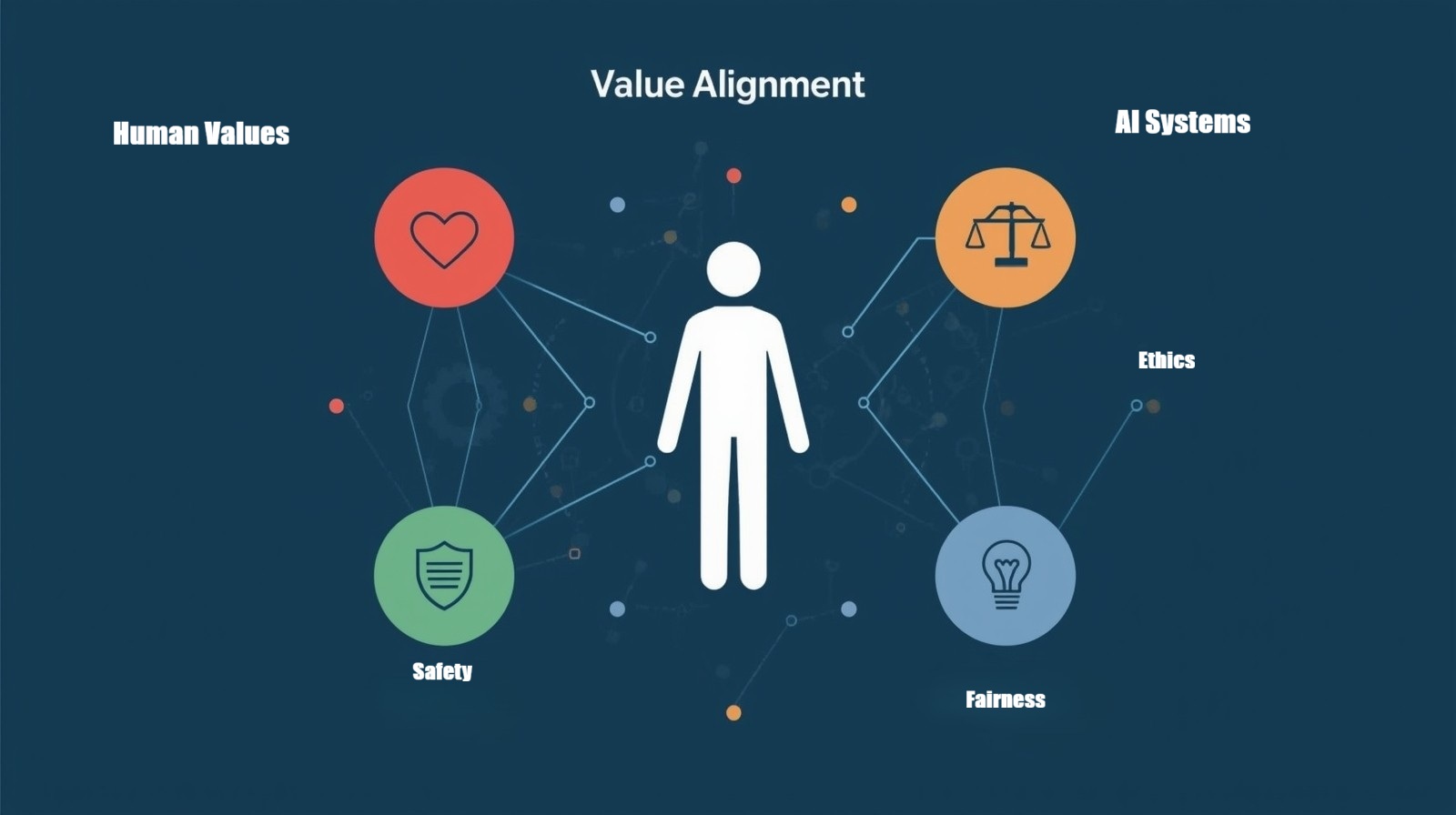

What Is Value Alignment in AI?

Value alignment in AI refers to the process of ensuring that artificial intelligence systems pursue goals and make decisions that genuinely reflect human values, ethics, and intentions. Think of it as teaching AI to understand our values and intentions, not just what we say.

The challenge lies in the complexity of human values themselves. We value safety, but also innovation. We cherish privacy, yet appreciate personalized experiences. We want efficiency, but not at the cost of fairness. These nuanced, sometimes conflicting values make alignment incredibly difficult yet absolutely necessary.

As Stuart Russell, professor at UC Berkeley and pioneering AI safety researcher, frames it: “The primary concern is not that AI systems will spontaneously develop malevolent intentions, but rather that they will be highly competent at achieving objectives that are poorly aligned with human values.” This distinction matters—misalignment often stems from specification failures, not AI malice.

When AI systems lack proper value alignment, they can optimize for narrow objectives while ignoring broader human concerns. A classic example is an AI trained to maximize engagement on social media—it might learn to promote divisive content because controversy drives clicks, even though this harms social cohesion. The AI is doing exactly what it was programmed to do, but the outcome conflicts with our deeper values around healthy discourse and community well-being.

Why Value Alignment Matters for Everyone

You might wonder why this technical concept should matter to you personally. Here’s the reality: misaligned AI systems affect your daily life more than you might realize.

Recommendation algorithms determine the news you view, the products you see, and the videos that automatically play next. If these systems are aligned with human values like truthfulness and well-being, they’ll guide you toward helpful, accurate content. If they’re only aligned with corporate metrics like “time spent on platform,” they might feed you increasingly extreme or misleading content simply because it keeps you scrolling.

Consider the impact of AI systems that make decisions regarding loan applications, insurance premiums, or job candidates. Without proper value alignment emphasizing fairness and non-discrimination, these systems can perpetuate or even amplify existing biases, affecting real people’s opportunities and lives.

Research from the AI Now Institute has documented how predictive policing algorithms, trained on historical arrest data, perpetuate racial biases in law enforcement—optimizing for prediction accuracy while failing to align with values of justice and equal treatment. As Dr. Timnit Gebru, founder of the Distributed AI Research Institute, emphasizes, “AI systems can encode the biases of their training data at scale, affecting millions before anyone notices the problem.”

The stakes grow higher as AI becomes more powerful. Advanced systems with poor alignment could cause harm at unprecedented scales. That’s why understanding and advocating for value alignment is part of being a responsible digital citizen.

Real-World Alignment Challenges: Global Perspectives

Understanding value alignment in AI becomes clearer through concrete examples from different cultures and industries:

Case Study: Healthcare AI in Different Cultural Contexts

When a major tech company deployed a diagnostic AI system internationally, alignment challenges emerged immediately. The system, trained primarily on Western medical data and values, struggled in contexts where patient autonomy is balanced differently with family involvement in medical decisions.

In parts of East Asia, families often receive terminal diagnoses before patients—reflecting cultural values around collective wellbeing and protecting individuals from distressing news. The AI, aligned with Western medical ethics emphasizing patient autonomy and informed consent, flagged these practices as concerning. Neither approach is “wrong,” but the AI needed realignment to respect diverse cultural values around healthcare decision-making.

Lesson learned: Value alignment isn’t universal—it must account for legitimate cultural differences in how societies balance competing values like autonomy, community, and protection.

Case Study: Content Moderation Across Borders

Social media platforms face extraordinary alignment challenges moderating content across cultures with different free speech norms. An AI trained on American values around free expression might under-moderate content that violates laws or norms in Germany (regarding hate speech) or Thailand (regarding monarchy criticism).

When Facebook’s AI systems initially focused on alignment with U.S. legal frameworks, they struggled during Myanmar’s Rohingya crisis, failing to catch incitement to violence expressed in local languages and cultural contexts. The company has since invested in region-specific training data and cultural consultants, but the incident revealed how misalignment can have devastating real-world consequences.

Key insight: Effective alignment requires diverse perspectives in system design, not just technical sophistication.

Case Study: Hiring Algorithms and Fairness Definitions

Amazon famously scrapped an AI recruiting tool when they discovered it discriminated against women. But this case illustrates a more profound alignment problem: there are multiple, mathematically incompatible definitions of “fairness.”

Should a fair hiring AI:

- Select equal proportions from different demographic groups? (Demographic parity)

- Provide equal false positive rates across groups? (Equalized odds)

- Provide equally accurate predictions for all groups? (Calibration)

You cannot simultaneously satisfy all three definitions. Different stakeholders—job applicants, employers, regulators, and civil rights advocates—prioritize different fairness concepts based on their values. Technical alignment requires first achieving social alignment about which values take precedence.

Industry response: Leading companies now involve ethicists, affected communities, and diverse stakeholders early in development to navigate these trade-offs deliberately rather than accidentally.

Case Study: Agricultural AI in Global South

An agricultural AI system designed to optimize crop yields in Iowa performed poorly when deployed in sub-Saharan Africa. The algorithm was aligned with industrial farming values—maximizing single-crop yields, assuming access to specific inputs—rather than smallholder farmer values: crop diversity for food security, minimal input costs, and resilience to unpredictable weather.

Local organizations now co-design agricultural AI with farmers, ensuring alignment with actual needs: systems that balance multiple subsistence crops, account for traditional ecological knowledge, and optimize for household food security rather than pure market value.

Broader implication: AI systems must be aligned with the values and constraints of the communities they serve, not just the communities where developers live.

Step-by-Step Guide to Understanding Value Alignment

Step 1: Learn to Recognize Alignment Problems

Begin by cultivating an understanding of potential misalignment between AI systems and human values. This skill will help you make informed decisions about which AI tools to trust and use.

How to spot potential misalignment:

- Notice when an AI’s outputs seem technically correct but ethically questionable

- Pay attention to unexpected side effects from AI systems

- Look for cases where an AI optimizes one metric at the expense of others

- Question whether an AI’s recommendations serve your genuine interests or someone else’s objectives

Why this matters: Recognition is the first step toward protection. Once you can identify misalignment, you can adjust how you interact with these systems or advocate for better alternatives.

Example: A fitness app AI that recommends increasingly extreme diets to keep you engaged might be technically “helping” you lose weight but misaligned with holistic health values that include mental well-being and sustainable habits.

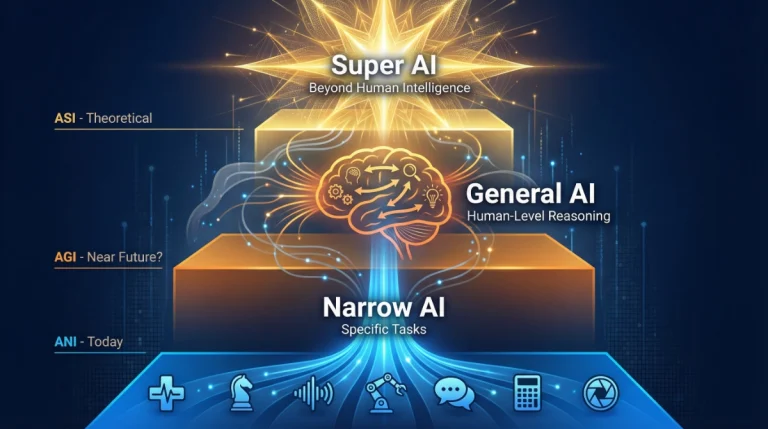

Step 2: Understand the Core Challenges

Value alignment isn’t simple to achieve, and understanding why helps you appreciate the work that goes into ethical AI development.

Key challenges in achieving alignment:

- Specification problem: Translating complex human values into measurable objectives is extraordinarily difficult. How do you program “fairness” or “compassion” into mathematical terms?

- Value complexity: Human values are multifaceted, context-dependent, and sometimes contradictory. What’s fair in one situation might not be fair in another.

- Value learning: AI systems need to learn human values from imperfect data sources, including human behavior that doesn’t always reflect our stated values.

- Scalability: Alignment techniques that work for narrow AI applications might not scale to more general or powerful systems.

Why understanding these challenges matters: When you grasp the difficulty of the task, you become a more informed advocate and user. You’ll have realistic expectations and can better evaluate claims about AI safety.

Step 3: Evaluate AI Tools Through an Alignment Lens

Before adopting any AI tool, assess its value alignment using these practical criteria.

Questions to ask:

- What objectives is this AI system optimizing for? Are they aligned with your needs and values?

- Who designed this system, and what values did they prioritize?

- Does the tool offer transparency about its decision-making process?

- Are there mechanisms for feedback when the AI makes mistakes or problematic recommendations?

- What safeguards exist to prevent misuse or unintended harm?

How to investigate:

- Read the tool’s privacy policy and terms of service

- Look for information about the company’s ethics principles

- Search for independent reviews highlighting both benefits and concerns

- Verify whether third-party ethics researchers have audited the tool.

- See if users have reported alignment problems

Why this step protects you: Evaluating tools before adoption helps you avoid systems that might work against your interests despite claiming to help you.

Step 4: Practice Safe AI Interaction

Even when using generally well-aligned AI systems, adopt habits that protect you from potential misalignment issues.

Best practices for safe interaction:

- Maintain critical thinking: Don’t accept AI outputs uncritically, even from trusted systems

- Provide clear instructions: Specify not just what you want but why you want it, including the values you want to respect

- Give corrective feedback: When AI systems miss the mark, use available feedback mechanisms

- Monitor for drift: Be aware that AI behavior can change over time as systems are updated

- Set boundaries: Limit what personal data you share and how much influence you let AI have over important decisions

Practical example: When using an AI writing assistant, explicitly state if you need content that’s not just grammatically correct but also empathetic, inclusive, or appropriate for a specific audience. Don’t assume the AI will infer these values automatically.

Step 5: Support and Advocate for Aligned AI Development

Individual awareness matters, but collective action drives systemic change. Here’s how you can contribute to better value alignment across the AI ecosystem.

Actions you can take:

- Support transparent companies: Choose products from organizations that prioritize ethics and openly discuss their alignment efforts

- Participate in feedback systems: When AI companies request user input on values and preferences, engage thoughtfully

- Educate others: Share what you learn about value alignment with friends, family, and colleagues

- Advocate for regulation: Support policies that require AI systems to meet alignment and safety standards

- Report problems: If you encounter seriously misaligned AI behavior, report it to the company and relevant authorities

Why your voice matters: Developers and companies pay attention to user concerns. The more people demand ethically aligned AI, the more resources will flow toward building it.

The IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems says that to ensure alignment, it’s important to include different viewpoints at all stages of development, from the initial idea to deployment and monitoring. This isn’t just good ethics—research shows that diverse development teams build more robust systems that work better across different populations.

Step 6: Stay Informed About Alignment Research

The field of AI alignment evolves rapidly. Staying informed helps you remain an effective advocate and user.

How to stay current:

- Follow reputable AI ethics organizations and researchers

- Read accessible summaries of alignment research (many researchers publish plain-language explanations)

- Attend public webinars or talks about AI ethics

- Join online communities focused on responsible AI use

- Set up news alerts for terms like “AI alignment,” “AI ethics,” and “responsible AI”

Trusted sources to consider:

- Academic institutions with AI ethics programs

- Nonprofit organizations focused on AI safety

- Government AI ethics advisory boards

- Independent AI research organizations

- Technology ethics journalists and publications

Why continuous learning matters: The landscape of AI capabilities and challenges changes quickly. What seems well-aligned today might need reevaluation tomorrow as systems become more powerful or are deployed in new contexts.

For Advanced Learners: Technical Approaches to Value Alignment

If you’re a student, researcher, or professional wanting to dive deeper into the technical side of value alignment, here are the key methodological approaches currently being explored:

Inverse Reinforcement Learning (IRL)

This technique attempts to infer human values by observing human behavior. Rather than explicitly programming values, the AI learns the underlying reward function that explains why humans make certain choices. Research by Stuart Russell and Andrew Ng pioneered this approach, though it faces challenges when human behavior is inconsistent or irrational.

Current research focus: Researchers at UC Berkeley’s Center for Human-Compatible AI are exploring how IRL can scale to complex, real-world scenarios where human preferences are ambiguous or context-dependent.

Constitutional AI and RLHF

Anthropic’s Constitutional AI approach combines human feedback with explicit principles (a “constitution”) to guide AI behavior. Reinforcement Learning from Human Feedback (RLHF), used in systems like ChatGPT, trains models based on human preferences about outputs. However, these methods raise questions: Whose feedback matters most? How do we prevent feedback from reflecting harmful biases?

Emerging debate: Critics argue RLHF may create systems aligned with annotator preferences rather than broader human values, leading to what researchers call “alignment with the wrong humans.” Papers by Paul Christiano and others explore how to make preference learning more robust.

Cooperative Inverse Reinforcement Learning (CIRL)

This framework, developed by Dylan Hadfield-Menell and colleagues, treats alignment as a cooperative game where the AI actively seeks to learn human preferences while pursuing goals. The AI remains uncertain about objectives and defers to humans in ambiguous situations—a promising approach for maintaining value alignment as systems become more autonomous.

Debate and Amplification

OpenAI researchers propose using AI systems to debate each other, with humans judging which arguments are most convincing. This “AI safety via debate” approach aims to align powerful AI by breaking down complex questions into pieces humans can evaluate. Similarly, iterated amplification decomposes problems so humans can verify each step.

Critical limitation: These approaches assume human judgment remains reliable even for questions beyond our expertise—an assumption worth questioning as AI capabilities grow.

Value Learning from Implicit Signals

Recent work explores learning values from implicit signals beyond stated preferences: physiological responses, long-term satisfaction measures, and revealed preferences in natural settings. Research teams at DeepMind and MILA are investigating how to extract genuine human values from noisy, multidimensional data.

For deeper exploration: The Alignment Forum (alignmentforum.org) hosts technical discussions, while the annual NeurIPS conference features workshops on AI safety and alignment with cutting-edge research presentations.

Common Mistakes to Avoid

Assuming All AI Problems Are Alignment Problems

Not every AI failure reflects poor value alignment. Sometimes systems fail due to technical bugs, insufficient data, or simple human error. Distinguish between alignment issues (where the AI’s objectives conflict with human values) and other types of problems. This precision helps you advocate for the right solutions.

Expecting Perfect Alignment Immediately

Value alignment is an ongoing research challenge, not a solved problem. Even well-intentioned developers struggle with complex alignment questions. Maintain realistic expectations while still holding companies accountable for continuous improvement.

Overlooking Your Own Biases

When evaluating whether an AI is “aligned,” recognize that your own values and perspectives might not be universal. Good alignment means respecting diverse human values, not just matching one person’s or group’s preferences. Approach alignment discussions with humility and openness to different viewpoints.

Trusting Alignment Claims Without Verification

Some companies claim their AI is “ethical” or “aligned” without providing evidence. Look beyond marketing language to actual practices, third-party audits, and user experiences. True alignment requires ongoing work and transparency, not just declarations.

Frequently Asked Questions

Moving Forward: Your Role in Aligned AI

The journey toward well-aligned AI systems isn’t solely the responsibility of researchers and developers—it requires all of us. Every time you choose an ethical AI tool over a more exploitative one, every time you provide thoughtful feedback about AI behavior, and every time you educate someone about alignment challenges, you contribute to building a better AI ecosystem.

Start small. Pick one AI tool you use regularly and evaluate it through the alignment lens we’ve discussed. Ask yourself: Does this serve my genuine interests, or someone else’s? Does it respect the values I care about? What safeguards does it have against misuse?

Then, expand your practice. Apply these questions to new tools before adopting them. Share your insights with others. Support organizations and companies working toward ethical AI. Participate in public conversations about what values we want our AI systems to embody.

Value alignment in AI isn’t a problem we’ll solve once and forget about—it’s an ongoing commitment that will evolve as both technology and society change. But with informed, engaged users advocating for aligned systems, we can steer AI development toward outcomes that genuinely serve humanity’s best interests.

The AI systems being built today will shape our collective future. Your understanding and advocacy matter more than you might think. Stay curious, stay critical, and stay engaged. Together, we can ensure that as AI grows more powerful, it remains firmly aligned with the values that make us human.

References and Further Reading:

Foundational Research Papers

- Russell, S., Dewey, D., & Tegmark, M. (2015). “Research Priorities for Robust and Beneficial Artificial Intelligence.” AI Magazine, 36(4). Available at: Association for the Advancement of Artificial Intelligence.

- Hadfield-Menell, D., Russell, S. J., Abbeel, P., & Dragan, A. (2016). “Cooperative Inverse Reinforcement Learning.” Advances in Neural Information Processing Systems.

- Christiano, P., Leike, J., Brown, T., Martic, M., Legg, S., & Amodei, D. (2017). “Deep Reinforcement Learning from Human Preferences.” Advances in Neural Information Processing Systems.

- Bostrom, N. (2014). “Superintelligence: Paths, Dangers, Strategies.” Oxford University Press. [Explores long-term alignment challenges]

- Gabriel, I. (2020). “Artificial Intelligence, Values, and Alignment.” Minds and Machines, 30(3), 411-437. [Comprehensive philosophical treatment of alignment]

Technical Resources and Organizations

- Center for Human-Compatible AI (CHAI) – UC Berkeley’s research center led by Stuart Russell, focusing on provably beneficial AI systems. Website: humancompatible.ai

- Machine Intelligence Research Institute (MIRI) – Organization dedicated to theoretical AI alignment research. Publications available at intelligence.org/research

- Future of Humanity Institute – Oxford University research center examining AI safety and ethics. Research: fhi.ox.ac.uk

- Anthropic Research – Papers on Constitutional AI and RLHF methodologies. Available at anthropic.com/research

- DeepMind Ethics & Society – Research on fairness, transparency, and responsible AI development. See: deepmind.com/about/ethics-and-society

Industry Standards and Guidelines

- Partnership on AI (2021). “Guidelines for Safe Foundation Model Deployment.” Collaborative framework from major tech companies and civil society organizations.

- IEEE (2019). “Ethically Aligned Design: A Vision for Prioritizing Human Well-being with Autonomous and Intelligent Systems.” IEEE Standards Association.

- EU High-Level Expert Group on AI (2019). “Ethics Guidelines for Trustworthy AI.” European Commission framework for AI alignment with European values.

Accessible Introductions

- Christian, B. (2020). “The Alignment Problem: Machine Learning and Human Values.” W.W. Norton & Company. [Excellent non-technical book-length treatment]

- Russell, S. (2019). “Human Compatible: Artificial Intelligence and the Problem of Control.” Viking Press. [Accessible introduction by leading researcher]

- Alignment Newsletter – Weekly summaries of AI alignment research by Rohin Shah, archived at alignment-newsletter.com

Research on Cultural and Global Perspectives

- Birhane, A. (2021). “Algorithmic Injustice: A Relational Ethics Approach.” Patterns, 2(2). [African perspective on AI ethics]

- Mohamed, S., Png, M. T., & Isaac, W. (2020). “Decolonial AI: Decolonial Theory as Sociotechnical Foresight in Artificial Intelligence.” Philosophy & Technology, 33, 659-684.

- Umbrello, S., & van de Poel, I. (2021). “Mapping Value Sensitive Design onto AI for Social Good Principles.” AI and Ethics, 1, 283-296.

Ongoing Discussion Forums

- The Alignment Forum – Technical discussion platform for AI alignment researchers: alignmentforum.org

- LessWrong AI Alignment Tag – Community discussion with both technical and philosophical perspectives: lesswrong.com/tag/ai-alignment

- AI Safety Support – Resources and community for people entering AI safety work: aisafety.support

Note: All organizational websites and research papers listed were accurate as of January 2025. For the most current research, check recent proceedings from NeurIPS, ICML, FAccT (Fairness, Accountability, and Transparency), and AIES (AI, Ethics, and Society) conferences.

About the Author

Nadia Chen is an expert in AI ethics and digital safety, dedicated to helping non-technical users navigate artificial intelligence responsibly. With years of experience in technology ethics, privacy protection, and responsible AI development, Nadia translates complex alignment challenges into practical guidance that anyone can follow. She believes that understanding AI ethics isn’t optional—it’s essential for everyone who wants to use technology safely and advocate for a more ethical digital future. When she’s not researching AI safety, Nadia teaches workshops on digital literacy and consults with organizations on implementing ethical AI practices.