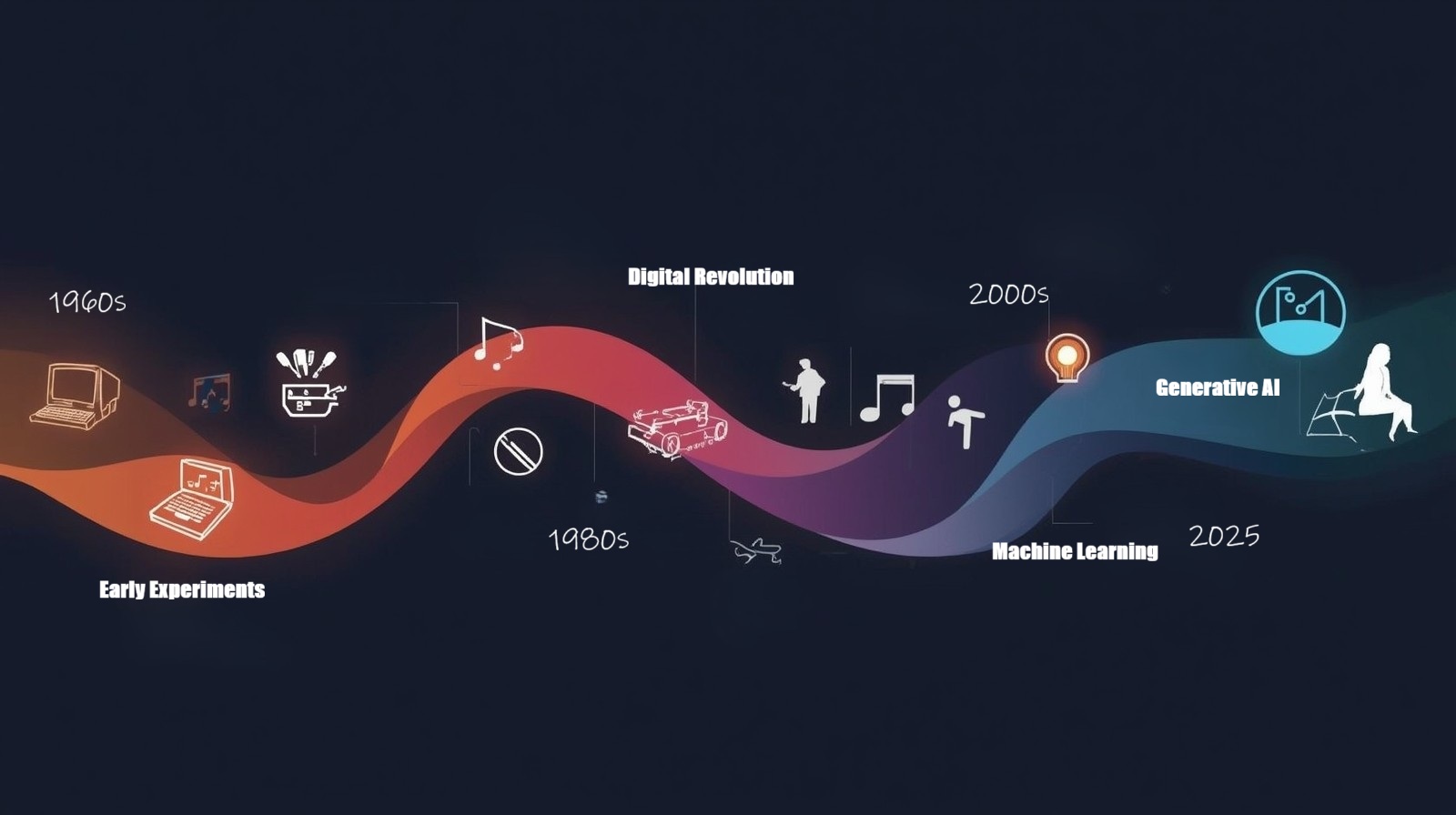

AI Creative Collaboration: From 1960s to Today

The Evolution of AI in Creative Collaboration represents one of the most fascinating journeys in modern technology—a story that spans over six decades of innovation, experimentation, and occasional controversy. When I first started researching this topic, I expected a straightforward timeline. Instead, I discovered a rich tapestry of human ambition, artistic vision, and technological breakthrough that continues to reshape how we think about creativity itself.

Today, we witness AI-generated artwork selling at major auction houses, algorithms composing symphonies, and writing assistants helping authors craft bestselling novels. But this didn’t happen overnight. The path from early computer experiments to sophisticated creative partnerships involved countless researchers, artists, and visionaries who dared to ask, “Can machines be creative?”

This article takes you through that remarkable journey, exploring how artificial intelligence evolved from calculating basic patterns to becoming a genuine collaborator in creative endeavors. Whether you’re an artist curious about AI tools, a writer exploring new technologies, or simply someone fascinated by innovation, understanding this evolution helps us appreciate both where we’ve been and where we’re heading.

The Pioneering Years: 1960s–1970s

When Computers First Met Creativity

The seeds of creative AI collaboration were planted in an era when computers filled entire rooms and operated on punch cards. In 1965, German mathematician Frieder Nake created what many consider among the first computer-generated artworks—simple geometric patterns that challenged our understanding of authorship and creativity.

These weren’t the sophisticated AI art generators we know today. Early creative computing involved painstaking programming of mathematical algorithms that could produce visual patterns or simple melodies. The computer acted more like an elaborate drawing compass than a creative partner.

The Victoria and Albert Museum’s digital art collection, which started acquiring computer art in 1969, now contains over 3,000 digital art and design objects spanning decades. The museum’s collection includes works by pioneering artists such as Vera Molnar, Manfred Mohr, and Frieder Nake, documenting the earliest explorations of computer-generated creativity.

AARON, developed by artist Harold Cohen starting in the late 1960s at the University of California, San Diego, and named in the early 1970s, represents a landmark achievement from this era. Unlike simple pattern generators, AARON could create original drawings based on rules about composition and form. Cohen spent decades refining AARON, and according to the Computer History Museum, the system is “one of the longest-running, continually maintained AI systems in history.”

What made AARON special wasn’t just its output—it was Cohen’s insistence that the computer was his collaborator, not merely his tool. Cohen himself described the relationship: “perhaps AARON would be better described as an expert’s system than as an expert system: not simply because I have served as both knowledge engineer and as resident expert, but because the program serves as a research tool for the expansion of my own expert knowledge rather than to encapsulate that knowledge for the use of others.”

Music’s Early Digital Experiments

AI in music composition also took its first tentative steps during this period. In 1957, even before the creative AI boom of the 1960s, Lejaren Hiller and Leonard Isaacson composed the “Illiac Suite”—the first piece of music composed by a computer algorithm. The piece used probability calculations and compositional rules derived from classical music theory.

These early experiments taught researchers something crucial: creativity isn’t just randomness. It requires structure, rules, and often constraints. This insight would shape AI creative tools for decades to come.

The Knowledge-Based Era: 1980s–1990s

From Patterns to Understanding

The 1980s brought a fundamental shift in how AI approached creativity. Instead of simple rule-following, researchers began building systems that incorporated knowledge about creative domains. This era emphasized expert systems—AI programs that captured human expertise in structured ways.

David Cope’s EMI (Experiments in Musical Intelligence), created in 1981, could analyze the style of composers like Bach or Mozart and generate new compositions in their manner. When EMI’s compositions were played alongside genuine Bach pieces, audiences often couldn’t tell the difference. This raised fascinating questions: What is musical creativity? Is style replication a form of creation?

The Desktop Revolution

The late 1980s and 1990s democratized creative computing in ways that would prove essential for future AI collaboration tools. Personal computers became affordable and powerful enough to run creative software. Adobe Photoshop launched in 1990, and while not AI-powered initially, it established the model of digital tools augmenting human creativity.

This period also saw the rise of procedural generation in video games—algorithms creating landscapes, levels, and content. Games like “Elite” (1984) generated entire universes from compact code, demonstrating how computational creativity could scale beyond what humans could manually create.

The Machine Learning Revolution: 2000s–2015

When AI Started Learning From Examples

The Evolution of AI in Creative Collaboration accelerated dramatically with the rise of machine learning. Instead of programming explicit rules, researchers could now train AI systems on vast collections of creative works, allowing algorithms to discover patterns independently.

In 2015, Google’s DeepDream captured public imagination by transforming photographs into surreal, dream-like images. While initially a visualization tool for understanding neural networks, artists quickly adopted DeepDream for creative expression. This marked a turning point: AI wasn’t just a research curiosity anymore—it was becoming an artistic medium.

Neural style transfer, introduced by researchers in 2015, allowed anyone to apply the artistic style of famous paintings to their photographs. Suddenly, your vacation photo could look like a Van Gogh painting or a Picasso cubist work. These techniques relied on convolutional neural networks analyzing and reapplying artistic patterns.

Music Gets Smarter

AI music generation evolved significantly during this period. Systems like Sony CSL’s Flow Machines (developed throughout the 2010s) could compose melodies in various genres by learning from existing music databases. In 2016, Flow Machines co-created “Daddy’s Car,” a song in the style of The Beatles that demonstrated how far algorithmic composition had progressed.

[SVG_PROMPT] Generate a downloadable svg image following directives below: File: ai-creative-evolution-timeline.svg Icons: Add emojis as icons if convenable. Title: Major Milestones in AI Creative Collaboration Type: Timeline infographic with branching paths for different creative domains Data to visualize:

Writing Gets an Assistant

AI writing tools emerged more gradually than their visual counterparts. Early systems focused on grammar checking (like Grammarly, founded in 2009) and predictive text. The real breakthrough came with language models that could generate coherent text.

OpenAI’s GPT-2, released in 2019, demonstrated that neural networks could write surprisingly human-like text on almost any topic. While initially controversial due to concerns about misinformation, GPT-2 showed that AI-assisted writing could move beyond spell-checking to actual content generation.

The Generative AI Explosion: 2016–2023

GANs Change Everything

Generative Adversarial Networks (GANs), introduced by Ian Goodfellow in 2014 but refined throughout the late 2010s, revolutionized AI art creation. GANs work through an ingenious setup: one neural network generates images while another judges their quality, creating a competitive improvement loop.

In 2018, a GAN-generated portrait titled “Edmond de Belamy” sold at Christie’s auction house for $432,500. Whether this represented genuine art or an expensive novelty sparked heated debate, but it undeniably marked AI’s arrival in the traditional art world.

StyleGAN, developed by NVIDIA researchers, pushed photorealism to new heights. By 2020, GAN-generated faces looked indistinguishable from photographs of real people. Artists began using StyleGAN not just to generate images but to explore concepts of identity, reality, and algorithmic bias.

DALL-E and the Text-to-Image Revolution

2022 brought the breakthrough that made AI creative collaboration accessible to everyone: text-to-image models like DALL-E 2, Midjourney, and Stable Diffusion.

OpenAI released DALL-E 2 in April 2022, Midjourney launched its first beta version in July 2022, and Stability AI released Stable Diffusion in August 2022. For the first time, anyone could describe a scene in plain language and watch an AI generate detailed, creative images within seconds.

These tools didn’t just generate pretty pictures—they sparked genuine human-AI creative partnerships. Artists used AI to rapidly prototype ideas, explore variations, and push beyond their habitual styles. Designers incorporated AI into professional workflows. The technology moved from novelty to utility remarkably quickly.

The impact was immediate and widespread. In August 2022, Jason Allen’s Midjourney-generated artwork “Théâtre D’opéra Spatial” won first place in the digital art competition at the 2022 Colorado State Fair, sparking intense debate about AI’s role in art. Major publications, including The Economist and Corriere della Sera, began using Midjourney for covers and illustrations.

Language Models Mature

Large language models reached new levels of sophistication. GPT-3 (2020) and GPT-4 (2023) could write articles, stories, poetry, and code with impressive fluency. These models understood context, maintained consistency across long texts, and could adapt their style based on instructions.

Writers initially feared replacement but soon discovered a different reality: AI writing assistants excel at different tasks than humans. They’re brilliant at first drafts, brainstorming, and overcoming writer’s block. Humans remain essential for emotional depth, lived experience, and editorial judgment.

Modern Creative AI: 2024–Present

Multimodal Models and Cross-Domain Creation

The latest chapter in The Evolution of AI in Creative Collaboration features multimodal models—AI systems that work seamlessly across text, images, audio, and video. GPT-4V can analyze images and generate text about them. Models like Gemini integrate multiple creative capabilities in single platforms.

Sora and similar video generation AI tools emerged in 2024, allowing creators to generate entire video clips from text descriptions. While still imperfect, these tools hint at a future where video production becomes as accessible as image creation is today.

Music Reaches New Heights

AI music generation achieved remarkable sophistication. Platforms like Suno, Udio, and Google’s MusicLM can generate complete songs—vocals, instruments, and arrangement—from text prompts. You can request “an upbeat jazz piece with piano and saxophone” or “a melancholic indie folk song” and receive professional-quality results.

According to a 2025 survey by LANDR of over 1,200 music makers, 87% of artists have incorporated AI into at least one part of their process, with 29% already using AI song generators. The AI music market is experiencing explosive growth, with projections showing it will reach $38.7 billion by 2033 from $3.9 billion in 2023, representing a compound annual growth rate of 25.8%.

These tools serve different creative needs: hobbyists create personalized songs, filmmakers generate custom soundtracks, and musicians use AI for rapid prototyping before human refinement.

Real-Time Collaboration

Perhaps the most exciting development is real-time creative collaboration between humans and AI. Tools like Photoshop’s Generative Fill allow designers to sketch rough concepts and have AI fill in photorealistic details instantly. Copilot helps programmers write code by predicting their intentions and suggesting implementations.

This represents a fundamental shift from AI as a tool to AI as a collaborative partner. The creative process becomes a conversation: humans provide vision, direction, and judgment, while AI offers execution speed, variation, and technical skill.

Key Lessons From History

What the Journey Teaches Us

Looking back at The Evolution of AI in Creative Collaboration, several patterns emerge that help us understand both past developments and future possibilities.

Augmentation, Not Replacement: Throughout history, creative AI has enhanced human capabilities rather than replacing them entirely. Photography didn’t kill painting; synthesizers didn’t eliminate acoustic instruments. Each new technology expanded creative possibilities while humans retained essential roles in vision, emotion, and meaning-making.

Democratization Drives Adoption: The most successful creative AI tools made previously elite skills accessible. Text-to-image models gave everyone access to visual creation. Music generation democratized composition. This accessibility often sparked backlash from professionals but ultimately expanded creative communities.

Quality Emerges Gradually: Early computer art looked primitive compared to human work. Today’s AI creations often rival or exceed human output in technical quality. This progression from novelty to utility typically takes longer than enthusiasts predict but arrives faster than skeptics expect.

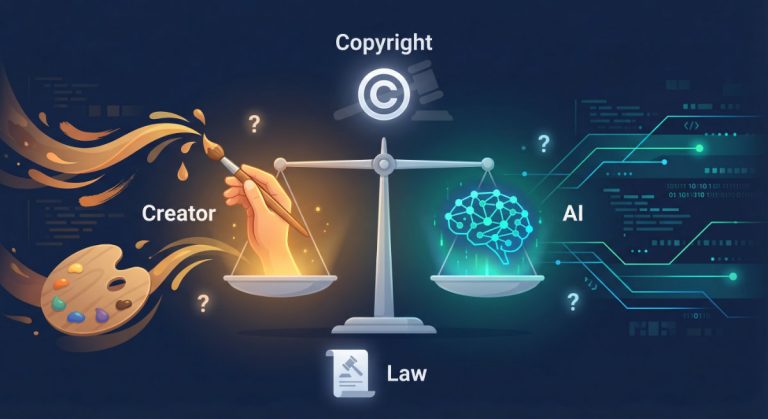

Ethical Questions Persist: Every generation of creative AI raises concerns about authorship, copyright, artistic value, and job displacement. These aren’t new issues—they’re recurring themes that each technological wave must address anew.

The Human Element Remains Central

Why Humans Still Matter

Despite remarkable technical progress, human creativity remains irreplaceable in key ways. AI excels at pattern recognition, technique application, and rapid iteration. Humans provide lived experience, cultural context, intentionality, and emotional resonance.

The most successful creative work using AI combines both strengths. A photographer uses AI to enhance lighting but decides on composition and subject. A writer uses AI to draft options but provides the insight that makes writing meaningful. A musician uses AI to generate backing tracks but performs the melody with human expression.

The Evolution of AI in Creative Collaboration ultimately tells a story not of replacement but of partnership—an ongoing negotiation between human vision and machine capability that continues reshaping creative possibility.

FAQ: Understanding AI in Creative Collaboration

Getting Started With Creative AI

Practical Next Steps

If you’re inspired to explore AI creative collaboration yourself, here’s how to begin:

Start With Free Tools: Platforms like Bing Image Creator, ChatGPT (free tier), and various music generators offer excellent starting points without financial commitment. Experiment widely before investing in premium subscriptions.

Learn Effective Prompting: The quality of AI output depends heavily on how you communicate your vision. Practice describing what you want clearly, providing examples, and iterating on results. Think of prompting as a new creative skill worth developing.

Combine Human and AI Strengths: Use AI for tasks it handles well—generating variations, handling technical execution, overcoming creative blocks—while you focus on direction, emotional resonance, and refinement. The best results come from genuine collaboration.

Study the Ethics: Understand ongoing debates about training data, copyright, attribution, and fair compensation. Make informed choices about which tools and practices align with your values.

Join Creative Communities: Online forums, Discord servers, and social media groups dedicated to AI creativity offer support, inspiration, and practical advice. Learning from others accelerates your own development.

Maintain Your Creative Voice: Let AI handle technical challenges while you provide the unique perspective, lived experience, and emotional truth that only you can contribute. Technology amplifies but doesn’t replace authentic creative vision.

Looking Forward

The Future of Creative Partnership

The Evolution of AI in Creative Collaboration continues accelerating. Emerging developments suggest even more integrated partnerships ahead: real-time collaborative creation, personalized AI that learns individual creative styles, and seamless cross-domain tools working across text, image, music, and video simultaneously.

Yet the fundamental dynamic remains constant: humans bringing vision, meaning, and purpose while AI provides capability, speed, and technical execution. This partnership, refined over six decades, continues evolving into new forms that expand creative possibility for everyone.

The story isn’t finished—it’s just entering its most exciting chapter, one where you can participate directly by exploring these remarkable tools yourself.

References:

– Computer History Museum. “Harold Cohen and AARON—A 40-Year Collaboration” (September 24, 2019). https://computerhistory.org/blog/harold-cohen-and-aaron-a-40-year-collaboration/

– Victoria and Albert Museum. “A history of collecting digital objects at the V&A” (2025). https://www.vam.ac.uk/articles/a-history-of-collecting-digital-objects-at-the-va

– Whitney Museum of American Art. “Harold Cohen: AARON” (February 3–May 19, 2024). https://whitney.org/exhibitions/harold-cohen-aaron

– LANDR. “New Survey Reveals How 87% of Artists Really Use AI” (November 20, 2025). https://www.hypebot.com/hypebot/2025/11/new-survey-reveals-how-87-of-artists-really-use-ai.html

– Music Mentor. “AI Music Adoption Rates 2025: Industry Growth, Artist Usage, and Genre Trends” (2025). https://musicmentor.ai/ai-music-adoption-rates-2025-industry-growth-artist-usage-and-genre-trends/

– Wikipedia. “Midjourney” (Updated November 2025). https://en.wikipedia.org/wiki/Midjourney

– Creative Bloq. “Midjourney vs Dall-E 3 vs Stable Diffusion: which AI image generator is best?” (June 12, 2024). https://www.creativebloq.com/ai/ai-art/midjourney-vs-dall-e-3-vs-stable-diffusion-which-ai-image-generator-is-best

– Tokenized. “Stable Diffusion Release Date & Timeline” (November 29, 2022). https://tokenizedhq.com/stable-diffusion-release-date/

About the Author

Abir Benali is a friendly technology writer passionate about making AI accessible to everyone. With a background in both creative writing and technology education, Abir specializes in translating complex AI concepts into clear, actionable guidance for non-technical users. Through howAIdo.com, Abir helps thousands of readers discover practical ways to use AI tools in their daily creative work, always emphasizing the human element that makes technology meaningful. When not writing about AI, Abir enjoys experimenting with new creative tools and exploring how technology can empower rather than replace human creativity.