Microsoft’s Rho-alpha: Robots That Learn Like Humans

Key Points

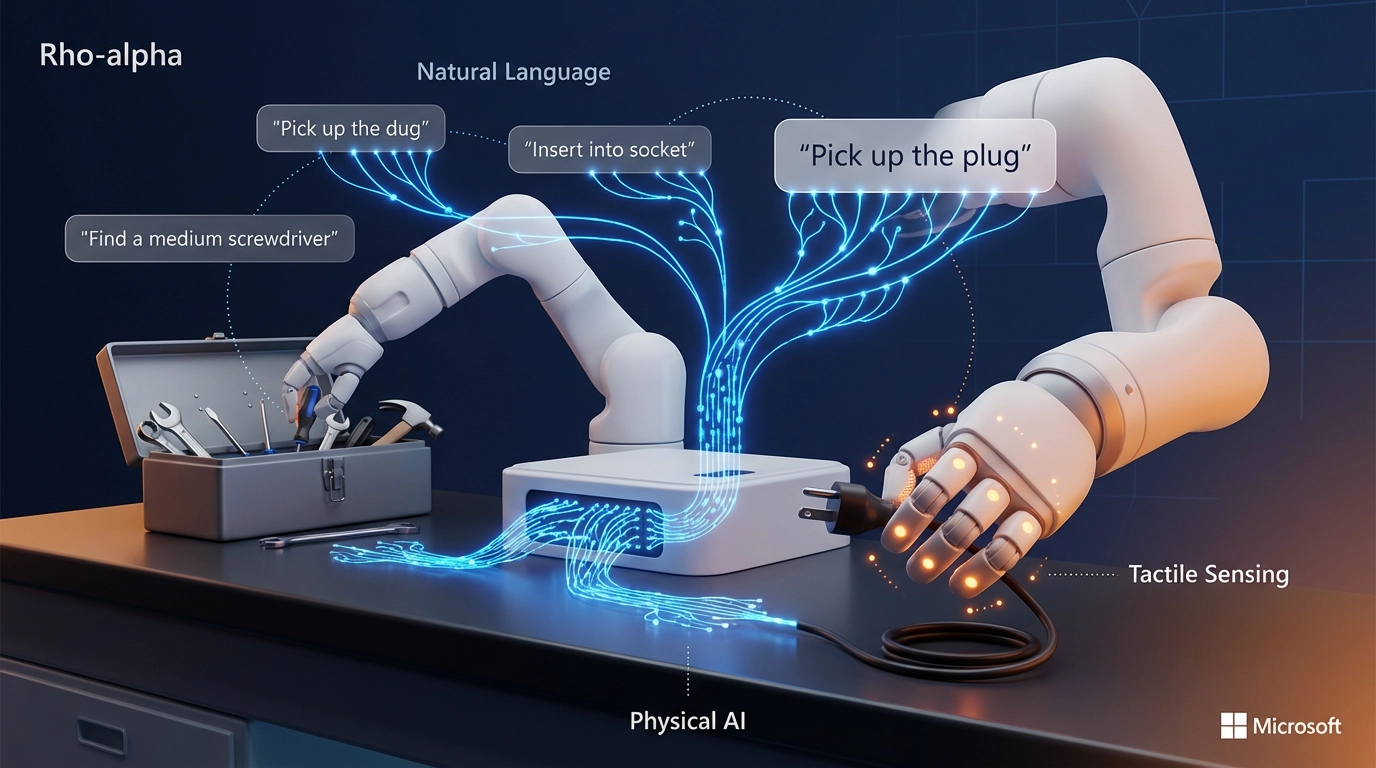

- Microsoft Research launched Rho-alpha, the company’s first robotics AI model derived from its Phi vision-language family

- The model translates natural language instructions directly into robot control signals for complex two-handed manipulation tasks

- Rho-alpha incorporates tactile sensing beyond traditional vision-based systems, enabling robots to adjust actions based on physical feedback

- Available through Microsoft’s Research Early Access Program, with broader release via Microsoft Foundry planned

- Represents Microsoft’s shift toward “physical AI“—intelligent systems that interact directly with the unpredictable real world

Background

For decades, robotics remained confined to factory floors, where every movement followed rigid scripts. Assembly line robots excel at repetition but crumble when faced with the messy unpredictability of real-world environments. (ℹ️ Microsoft Research)

The emergence of vision-language-action (VLA) models started changing this landscape, enabling robots to perceive and reason with growing autonomy. However, these systems still struggled with nuanced physical interactions—the kind humans handle effortlessly without thinking.

Microsoft’s latest innovation directly tackles this challenge, bringing together advanced AI, tactile sensing, and continuous learning to create robots that adapt rather than merely execute.

What Happened

Microsoft Research unveiled Rho-alpha (ρα) on January 21, 2026, marking the company’s first dedicated robotics foundation model. Built on Microsoft’s Phi series of vision-language models, Rho-alpha represents what the company calls a “VLA+ model”—expanding beyond standard vision-language-action capabilities. (ℹ️ Microsoft Research)

The system’s standout feature is its ability to understand plain English commands and convert them into precise robot movements. Tell a Rho-alpha-powered robot to “push the green button with the right gripper” or “insert the plug into the bottom socket,” and it executes the task using coordinated two-arm manipulation.

But Rho-alpha goes further than visual processing. The model integrates tactile sensing, allowing robots to feel their way through tasks and adjust in real-time when something doesn’t go as planned. During Microsoft’s demonstrations, when a robot struggled to insert a plug, human operators could guide it using intuitive devices like a 3D mouse—and the system learned from that correction.

Microsoft developed Rho-alpha’s training data through a hybrid approach. The team combined physical robot demonstrations with synthetic data generated using NVIDIA’s Isaac Sim framework running on Azure infrastructure. This combination helps overcome the scarcity of real-world robotics training data, especially for less common sensing modalities like tactile feedback.

Why It Matters

Real-world adaptability is a crucial aspect of robotics. Most current robots operate like highly specialized tools—brilliant at specific tasks but helpless when conditions change. Rho-alpha’s continuous learning approach represents a fundamental shift.

The model’s natural language interface democratizes robot programming. Instead of writing complex code, operators can simply describe what needs to happen. This accessibility could accelerate robotics adoption across industries where technical expertise is scarce.

For industries beyond manufacturing, such versatility matters immensely. Healthcare facilities, logistics warehouses, agricultural operations, and service industries need robots that adapt to messy, changing environments where no two days are identical. Rho-alpha’s design targets exactly these scenarios.

Microsoft is currently evaluating Rho-alpha on dual-arm robot setups and humanoid robots, with plans to publish detailed technical documentation in coming months.

What’s Next

Organizations interested in experimenting with Rho-alpha can apply to Microsoft’s Research Early Access Program. The company plans broader availability through Microsoft Foundry, though specific timing hasn’t been announced. (ℹ️ Microsoft Research)

Microsoft emphasizes that Rho-alpha represents foundational technology—a platform that robotics manufacturers, integrators, and end-users can customize for their specific robots and scenarios using their data.

The research team continues working on extending Rho-alpha’s sensing capabilities beyond tactile feedback to accommodate force sensing and other modalities. They’re also refining techniques so the model can continuously learn from human corrections during deployment.

Ashley Llorens, Corporate Vice President and Managing Director of Microsoft Research Accelerator, frames this development within a broader transformation: “Physical AI, where agentic AI meets physical systems, is poised to redefine robotics in the same way that generative models have transformed language and vision processing.” (ℹ️ Microsoft Research)

For anyone following AI’s evolution, Rho-alpha signals an exciting frontier—the moment when artificial intelligence truly steps into the physical world, learning not just from text and images but from touch, experience, and direct human guidance.

Source: Microsoft Research—Published on January 21, 2026

Original article: https://www.microsoft.com/en-us/research/story/advancing-ai-for-the-physical-world/

About the Author

Alex Rivera is a creative technologist dedicated to making AI accessible and exciting for everyone. With a passion for demystifying complex technologies, Alex specializes in helping non-technical users discover practical, creative applications for artificial intelligence in their daily lives and work. Through clear, beginner-friendly guides and inspiring examples, Alex shows that AI isn’t just for experts—it’s a powerful creative tool anyone can learn to use.