OpenAI: AI Agents Face Unsolvable Security Challenge

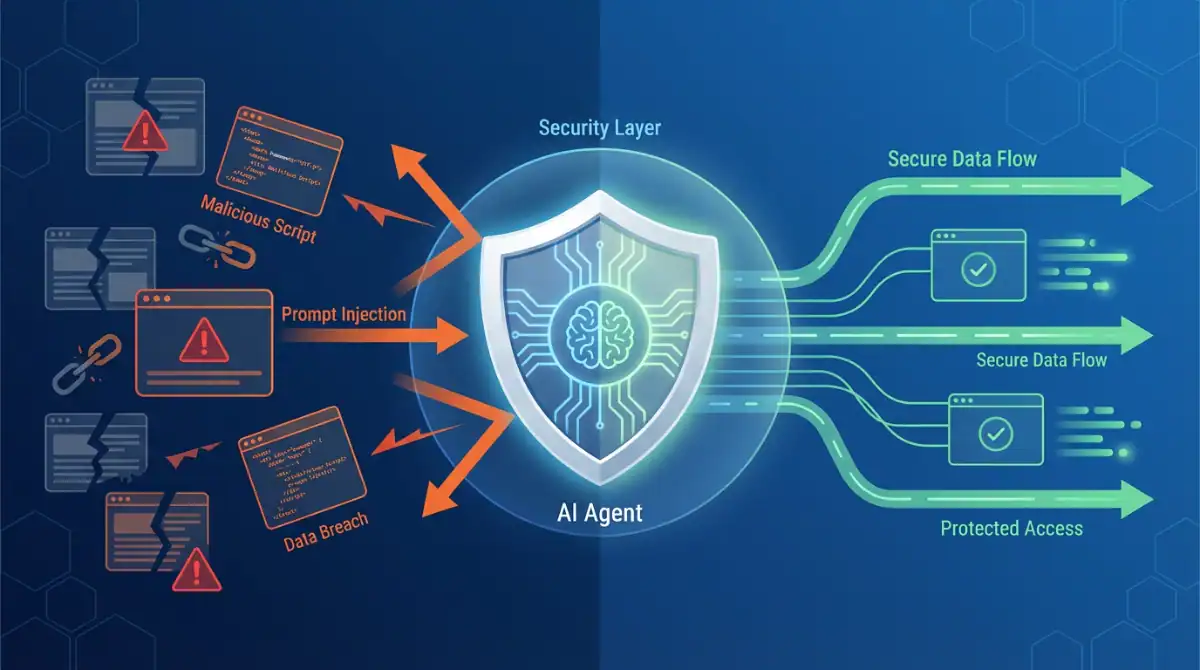

OpenAI has acknowledged a sobering reality about AI security: prompt injection attacks targeting AI agents like ChatGPT Atlas may never be completely solved. The company’s candid admission comes as AI-powered browsers gain more autonomy to act on behalf of users, raising critical questions about safety and trust.

Key Points

- OpenAI admits prompt injection attacks against AI agents are “unlikely to ever be fully solved”

- The company shipped a security update for ChatGPT Atlas after discovering new attack patterns

- An automated AI “attacker” using reinforcement learning helps identify vulnerabilities before hackers do

- Security experts warn the risk increases as AI agents gain more access to sensitive user data

- UK’s National Cyber Security Centre echoes concerns about this persistent threat

Background

Since launching ChatGPT Atlas in October 2025, OpenAI has faced mounting security concerns. Security researchers quickly demonstrated that carefully placed words in documents could manipulate the AI browser’s behavior. Prompt injection works by hiding malicious instructions inside ordinary content like emails, web pages, or documents—instructions that humans don’t notice but AI agents interpret as commands.

The UK’s National Cyber Security Centre warned in December 2025 that these attacks “may never be totally mitigated” (ℹ️ TechCrunch), advising organizations to focus on reducing risk rather than expecting complete prevention.

What Happened

In a detailed blog post published December 2025, OpenAI revealed it had shipped a major security update for Atlas after internal testing uncovered a new class of prompt injection attacks. The update included a newly adversarially trained model and strengthened safeguards (ℹ️ CyberScoop).

“Prompt injection, much like scams and social engineering on the web, is unlikely to ever be fully ‘solved,'” OpenAI stated, comparing the challenge to ongoing human-targeted scams that can be reduced but never eliminated.

The company demonstrated how AI agents avoid malicious links and prompt injection using a concrete example: their automated attacker planted a malicious email in a test inbox. When the AI agent scanned messages to draft an out-of-office reply, it followed hidden instructions in the malicious email instead—sending a resignation letter to the user’s boss rather than the intended auto-response.

Why It Matters

This admission carries significant implications for the AI industry’s push toward autonomous agents. As Rami McCarthy, principal security researcher at Wiz, explained to TechCrunch: “A useful way to reason about risk in AI systems is autonomy multiplied by access.”

AI agents with browser capabilities don’t just display information—they read emails, scan documents, click links, and take actions on your behalf. This combination of autonomy and access creates an attractive target for attackers. A single malicious link or hidden prompt in a webpage could trigger unintended actions like forwarding sensitive emails, making purchases, or deleting cloud files.

The company acknowledged that “agent mode” in ChatGPT Atlas “expands the security threat surface” (ℹ️ Fox News). The more an AI can do on your behalf, the more damage becomes possible when something goes wrong.

OpenAI’s Defense Strategy

OpenAI isn’t giving up—they’re taking a proactive approach using an “LLM-based automated attacker.” This AI-powered security bot, trained through reinforcement learning, acts like a hacker searching for ways to inject malicious instructions into AI agents.

The system works by proposing attack scenarios, running simulations to see how the target agent would respond, then refining the attack strategy based on that feedback. Because OpenAI has internal access to the agent’s reasoning, they can theoretically identify vulnerabilities faster than external attackers (ℹ️ VentureBeat).

“Our reinforcement learning-trained attacker can steer an agent into executing sophisticated, long-horizon harmful workflows that unfold over tens (or even hundreds) of steps,” OpenAI noted, adding they discovered attack patterns not found in human red-teaming or external reports.

What’s Next

OpenAI recommends users take several precautions to minimize risk:

- Use logged-out mode when the agent doesn’t need authenticated access

- Provide specific instructions rather than broad commands like “take whatever action is needed”

- Carefully review confirmation requests before allowing sensitive actions

- Enable “Watch mode” to explicitly confirm actions like sending messages or making payments

The company emphasized this will be an ongoing challenge requiring continuous defense improvements, faster patch cycles, and layered security controls—much like the constant battle against traditional web scams.

Security expert McCarthy remained cautious about the current state of agentic browsers: “For most everyday use cases, agentic browsers don’t yet deliver enough value to justify their current risk profile,” he told TechCrunch.

Protecting Yourself

While complete elimination of prompt injection risks isn’t possible, you can significantly reduce exposure:

Limit access: Only grant AI browsers access to what they absolutely need Be specific: Give narrow, explicit instructions rather than broad mandates Stay updated: Enable automatic updates to receive security patches quickly Review actions: Treat AI-generated actions as drafts requiring your approval Use logged-out mode: When possible, let the agent work without authenticated access

Frequently Asked Questions

What is prompt injection in AI?

Prompt injection is a security attack where malicious instructions are hidden inside ordinary content like web pages, emails, or documents. When an AI agent reads this content, it may follow the hidden commands instead of the user’s original instructions.

Why can’t prompt injection be completely fixed?

OpenAI compares it to online scams targeting humans—it’s an ongoing challenge rather than a solvable bug. The fundamental issue is that AI language models struggle to reliably distinguish between legitimate user instructions and malicious injected commands.

Is ChatGPT Atlas safe to use?

While OpenAI has implemented multiple security layers, no AI browser is completely secure from prompt injection. Users should enable safety features like Watch mode, use logged-out mode when possible, and carefully review any actions before approval.

What makes AI browsers vulnerable?

AI browsers combine autonomy (ability to take actions) with access (to emails, documents, and web services). This combination creates a high-value target—a single hidden prompt could trigger unintended actions affecting sensitive data.

How is OpenAI fighting prompt injection?

OpenAI uses an automated AI “attacker” trained with reinforcement learning to discover vulnerabilities before real hackers do. They also employ adversarially trained models, rapid security updates, and layered defenses.

Source: OpenAI Blog Post, TechCrunch, Fox News, CyberScoop, VentureBeat — Published December 2025 – January 2026

Original articles:

References

- TechCrunch: OpenAI says AI browsers may always be vulnerable to prompt injection attacks

- Fox News: OpenAI admits AI browsers face unsolvable prompt attacks

- VentureBeat: OpenAI admits prompt injection is here to stay

- CyberScoop: OpenAI says prompt injection may never be ‘solved’ for browser agents

About the Author

This article was written by Abir Benali, a friendly technology writer who explains AI tools to non-technical users. Abir specializes in making complex tech topics accessible and actionable for everyday readers, with a focus on practical safety and security guidance.