AI Music Generation: Your Complete Creative Guide

AI Music Generation has opened a door we never knew existed—one that leads to infinite melodies, harmonies, and rhythms crafted not just by human hands but by intelligent algorithms that learn, adapt, and create. We remember the first time we experimented with an AI music tool: the excitement, the skepticism, and ultimately, the wonder at hearing something genuinely musical emerge from lines of code. That moment changed how we think about creativity itself.

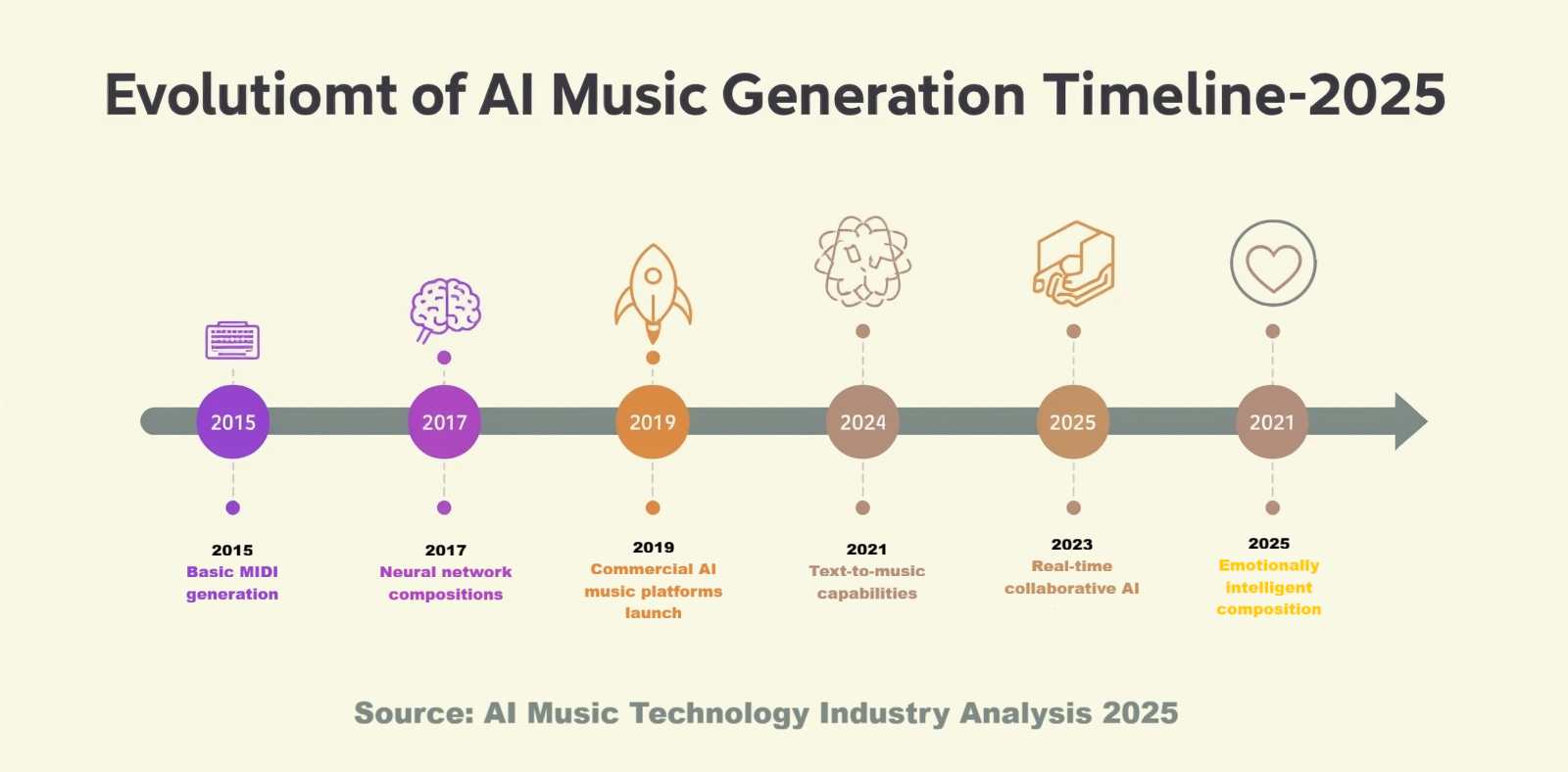

Whether you’re a seasoned composer looking for fresh inspiration, an independent artist seeking affordable production tools, or someone who simply loves music but never learned an instrument, artificial intelligence is democratizing music creation in ways that seemed like science fiction just a few years ago. The technology has evolved from generating simple MIDI sequences to crafting complex, emotionally resonant compositions across virtually any genre imaginable.

In this comprehensive guide, we’ll explore everything you need to know about AI music generation—from the fundamental concepts and cutting-edge software to ethical considerations and future trends. We’ll share our experiences, mistakes we’ve made, and creative discoveries that have transformed our approach to music. Think of this as your friendly companion on a journey into one of the most exciting frontiers in creative technology.

The Ultimate Guide to AI Music Generation in 2025

The Ultimate Guide to AI Music Generation in 2025 begins with understanding exactly what we’re working with. At its core, AI music generation uses machine learning algorithms—particularly neural networks—to analyze patterns in existing music and create new compositions. These systems learn from thousands or millions of songs, identifying relationships between melody, harmony, rhythm, and structure.

What makes 2025 such an exciting time is the maturity of these technologies. We’re no longer dealing with clunky tools that produce robotic-sounding melodies. Today’s AI music generators can create everything from lo-fi beats for studying to orchestral arrangements that rival professional compositions. The technology has become accessible enough that you don’t need a computer science degree to use it effectively.

The landscape includes several categories of tools: text-to-music generators where you describe what you want in plain language, stem separators that isolate individual instruments, melody generators that create hooks and riffs, and full production suites that handle everything from composition to mastering. Companies like Suno, Udio, AIVA, Amper Music, and Soundraw have created platforms specifically designed for non-technical users.

We’ve spent countless hours testing these platforms, and here’s what we’ve learned: the best results come from understanding how to communicate with AI. It’s similar to working with a highly talented but literal-minded collaborator. The more specific you are about mood, tempo, instrumentation, and style, the better your results will be.

AI Music Generation: A Composer’s New Best Friend?

AI Music Generation: A Composer’s New Best Friend? is a question we hear constantly, and our answer might surprise you. Rather than replacing composers, AI has become an invaluable creative partner—think of it as having an incredibly fast, endlessly patient collaborator who never gets tired of trying new ideas.

We’ve watched professional composers integrate AI into their workflows in fascinating ways. Some use it to break through creative blocks, generating dozens of melodic ideas in minutes to spark inspiration. Others employ AI for the tedious work of creating background music or variations, freeing them to focus on the most emotionally critical sections of a piece.

The relationship between human composers and AI is more symbiotic than competitive. AI excels at pattern recognition and can generate countless variations quickly, but it still lacks the lived human experience that gives music its emotional depth. When a composer writes about heartbreak, joy, or triumph, they’re drawing on personal memory and emotion. AI can mimic the musical structures associated with these feelings, but the intentionality comes from the human guiding it.

One composer we spoke with compared it to having a supercharged sketchbook. “I’ll feed the AI a four-bar motif I’m working on, ask it to generate variations, and suddenly I’m looking at 20 different directions I could take the piece,” she explained. “Some are terrible, some are interesting, and occasionally one is brilliant in a way I never would have thought of myself.”

The key is understanding AI’s strengths and limitations. It’s exceptional at:

- Generating variations on existing themes

- Creating backing tracks and accompaniment

- Exploring harmonic possibilities quickly

- Producing genre-specific compositions

- Overcoming creative blocks with fresh ideas

However, it struggles with:

- Understanding cultural context and deeper meaning

- Making intentional artistic statements

- Knowing when to break rules effectively

- Creating truly novel genres or styles

- Understanding narrative arc in longer compositions

Ethical Considerations of AI-Generated Music: Copyright and Ownership

Ethical Considerations of AI-Generated Music: Copyright and Ownership represent some of the most complex and evolving questions in the field. We believe transparency is crucial here, so let’s address the elephant in the room: who owns music created by AI?

The legal landscape is still developing, but here’s what we know today. In most jurisdictions, copyright law requires human authorship for protection. This means purely AI-generated music—where a human simply pressed “generate” without creative input—may not be eligible for traditional copyright protection. However, when humans make substantial creative contributions—selecting training data, crafting prompts, curating outputs, arranging, and editing—the work likely qualifies for copyright as a human-created work that used AI as a tool.

The training data issue is equally complex. Most AI music models are trained on existing music, which raises questions about whether this constitutes fair use. While some companies have secured licensing agreements or use only public domain music for training, others operate in a legal gray area. As creators, we need to understand where our tools source their training data and what that means for our work.

We recommend following these ethical guidelines:

- Always disclose when music is AI-generated or AI-assisted, especially for commercial use

- Understand your chosen platform’s licensing terms and training data sources

- If you’re heavily editing or arranging AI output, document your human contributions

- Consider supporting platforms that compensate original artists whose work trained the AI

- Be cautious about creating music in the style of living artists without permission

- Stay informed about evolving copyright law in your jurisdiction

Some platforms, like Soundraw and AIVA’s commercial licenses, explicitly grant users rights to commercially use generated music. Others are less clear. Before using AI-generated music for anything beyond personal experimentation, read the terms of service carefully.

The ethical dimension extends beyond legal questions. We face philosophical considerations about artistic authenticity, the value of human creativity, and the potential impact on professional musicians. These aren’t questions with easy answers, but engaging with them thoughtfully is part of being a responsible creator in this new landscape.

AI Music Generation Software: A Head-to-Head Comparison

AI Music Generation Software: A Head-to-Head Comparison reveals significant differences in approach, capability, and ideal use cases. We’ve tested every major platform extensively, and here’s our honest assessment of the current landscape.

Suno has emerged as one of the most impressive text-to-music generators available. You simply describe what you want—”upbeat indie pop song about summer road trips with bright acoustic guitars and cheerful vocals”—and it generates complete songs with vocals, lyrics, and production. The quality is remarkably high, though the vocal synthesis can occasionally sound artificial on close listening. Best for: quick song ideas, generating reference tracks, and content creators needing background music.

AIVA (Artificial Intelligence Virtual Artist) specializes in cinematic and orchestral music. It offers more control than Suno, allowing you to specify key, tempo, duration, and even influence specific sections. The orchestrations are genuinely impressive, rivaling some professional work. However, there’s a learning curve. Best for: film composers, game developers, and creators needing epic or emotional instrumental pieces.

Udio competes directly with Suno but offers slightly more granular control over structure and style. We found its genre blending particularly sophisticated—it can create convincing mashups like “jazz-infused trap” or “orchestral dubstep.” The interface is intuitive, and generation is swift. Best for: experimental musicians, genre fusion projects, and creating unique sonic textures.

Soundraw takes a different approach, generating instrumental tracks that you can then customize extensively. You can adjust tempo, instruments, energy levels, and structure in real time. This gives you more control but requires more time investment. Best for: content creators needing YouTube background music, podcasters, and creators who want customizable royalty-free music.

Amper Music (now part of Shutterstock) focuses on the commercial content creation market. It’s designed for speed and simplicity, generating professional-sounding tracks in minutes. The licensing is straightforward, making it ideal for commercial projects. Best for: advertisers, video editors, and corporate content creators.

Boomy democratizes music creation by letting anyone create and release songs to streaming platforms. It’s extraordinarily simple but offers less control than other options. Best for hobbyists, first-time music creators, and people wanting to experiment without technical knowledge.

How AI Music Generation is Revolutionizing the Music Industry

How AI Music Generation is Revolutionizing the Music Industry extends far beyond just making music creation more accessible. We’re witnessing fundamental shifts in how music is produced, distributed, and consumed that will reshape the industry for decades to come.

The democratization of production is perhaps the most obvious revolution. Historically, recording quality music required expensive studio time, professional equipment, and trained engineers. Today, an independent artist with a laptop can generate professional-grade backing tracks, create multiple arrangement variations, and even add synthesized vocals—all for a fraction of traditional costs. This levels the playing field dramatically, allowing talent and creativity to shine regardless of financial resources.

The speed of creation has accelerated exponentially. Where composing a film score might once have taken weeks or months, AI can generate hours of thematically consistent music in days. This doesn’t eliminate the need for human composers but allows them to work at unprecedented scales and iterate far more rapidly. We’ve seen composers create 50 variations of a theme in an afternoon, something that would have been impossible before.

Personalization is becoming reality. Streaming services are beginning to experiment with AI-generated playlists that don’t just curate existing music but create new compositions tailored to individual listeners’ preferences, moods, and activities. Imagine a workout playlist where every song is uniquely generated to match your exact tempo preferences and energy levels throughout your exercise routine.

The advertising and content creation industries have been transformed most dramatically. Stock music libraries are increasingly supplemented or replaced by AI-generated alternatives that can be customized on demand. A video editor can now specify “30 seconds of uplifting corporate music with a key change at 20 seconds” and receive exactly that, rather than searching through thousands of library tracks hoping to find something close.

However, this revolution brings challenges. Session musicians who once provided instrumental tracks face reduced demand. Music libraries see competition from infinite AI-generated alternatives. The flooding of streaming platforms with AI-generated content raises questions about discovery and quality control. Some estimates suggest millions of AI-generated tracks are uploaded monthly, creating a signal-to-noise problem for listeners seeking human-made music.

The industry is responding with various approaches. Some platforms now require AI disclosure. Certain streaming services are creating separate categories for AI-generated music. Professional musician unions are negotiating protections for their members. The next few years will determine how these tensions resolve.

AI Music Generation for Beginners: A Step-by-Step Tutorial

AI Music Generation for Beginners: A Step-by-Step Tutorial walks you through creating your first AI-generated music piece from absolute zero knowledge. We remember our first attempts—the confusion, the excitement when something actually sounded good, and the gradual understanding of how to coax better results from these tools. Let’s save you some of that trial and error.

Step 1: Choose Your Platform Start with Suno or Soundraw—both offer free tiers and are exceptionally beginner-friendly. Suno is better if you want complete songs with vocals, while Soundraw excels at customizable instrumental pieces. Create an account and familiarize yourself with the interface. Don’t worry about understanding everything; just click around and get comfortable.

Step 2: Define Your Vision Before generating anything, ask yourself: What’s the purpose of this music? Is it background music for a video? A song idea you want to develop? Just experimentation? Knowing your goal helps you make better choices. Write down adjectives describing what you want: upbeat, melancholic, energetic, calm, mysterious, triumphant.

Step 3: Craft Your First Prompt This is where the magic happens. The quality of your output directly relates to the specificity of your prompt. Instead of “make me a rock song,” try: “energetic indie rock song with jangly guitars, driving drums, and anthemic choruses, inspired by early 2000s alternative rock.” Include:

- Genre and subgenre

- Tempo (fast, medium, slow, or specific BPM if you know it)

- Mood and emotion

- Instrumentation preferences

- Reference artists or eras (optional but helpful)

Step 4: Generate and Listen Critically Hit generate and wait—usually 30 seconds to 2 minutes depending on the platform. Listen to the entire result before judging. Our biggest beginner mistake was dismissing results after the first few seconds. Sometimes the best moments come later in the composition. Listen for: Does it match the mood you wanted? Is the instrumentation appropriate? Does the structure make sense? Do any sections feel particularly strong or weak?

Step 5: Iterate and Refine This is where beginners often stop prematurely. Your first generation is rarely your best. Try adjusting your prompt based on what worked and didn’t. If the track was too slow, specify “fast tempo” or “140 BPM.” If the guitars were too prominent, ask for “subtle guitar with prominent synths.” Most platforms let you generate variations or extend sections. Experiment freely—generations are typically unlimited or very generous on free tiers.

Step 6: Polish Your Favorite Results Once you have something you like, explore your platform’s editing features. Soundraw lets you adjust sections, swap instruments, and change energy levels. Suno allows you to extend songs or create variations. Even basic editing like trimming the intro or looping your favorite section can significantly improve the final result.

Step 7: Export and Use Responsibly Download your creation in the highest quality available (usually WAV or high-bitrate MP3). Check the licensing terms before using it publicly. If you’re just learning and experimenting, you’re typically fine. For commercial use, you may need a paid license. Always credit AI involvement if you share the work publicly.

Common Mistakes to Avoid:

- Being too vague in prompts (the AI needs specificity)

- Giving up after one or two generations (iteration is essential)

- Expecting perfection immediately (there’s a learning curve)

- Ignoring licensing terms (know what you’re allowed to do)

- Not experimenting with different platforms (each has strengths)

- Forgetting to save prompts that worked well (document your successes)

The Role of AI Music Generation in Gaming and Film Soundtracks

The Role of AI Music Generation in Gaming and Film Soundtracks is already more significant than most people realize. We’ve worked with indie game developers and independent filmmakers who credit AI music generation with making their projects economically viable. The traditional approach of hiring composers for even a modest project could cost thousands or tens of thousands of dollars—a budget that many independent creators simply don’t have.

For gaming specifically, AI music generation solves a unique problem: adaptive soundtracks. Games need music that responds dynamically to gameplay—intensifying during combat, softening during exploration, and building tension during stealth sequences. Traditional soundtracks require composers to create multiple variations of themes that can layer and transition smoothly. AI can generate these variations far more efficiently, creating consistent thematic material across different intensity levels.

We spoke with a indie game developer who used AIVA to create the entire soundtrack for their fantasy RPG. “I gave AIVA themes for different regions, moods, and situations,” he explained. “It generated hundreds of variations that shared common melodic elements but varied in orchestration and intensity. I spent weeks curating and editing, but the foundation was AI-generated. Without it, my game would have generic royalty-free music or no budget for other development.”

Film is slightly different. While major productions still employ human composers (and likely will for the foreseeable future), the independent and corporate film worlds are embracing AI actively. Documentary filmmakers, corporate video producers, and YouTube creators use AI music generation to score their work quickly and affordably. The technology is particularly good at creating consistent underscore—the subtle background music that supports dialogue and action without drawing attention to itself.

One challenge that remains is creating music with perfect synchronization to visual events. While AI can generate appropriate moods and styles, timing specific musical moments—a crescendo exactly when the hero reveals themself, a sudden silence at a plot twist—still requires human editing or sophisticated prompting. Some newer platforms are experimenting with video-to-music generation, analyzing visuals to create synchronized scores, but this remains an emerging capability.

The ethical considerations here are nuanced. On one hand, AI democratizes soundtrack creation for projects that could never afford human composers. On the other, it potentially reduces opportunities for emerging composers to gain experience on smaller projects. We believe the key is viewing AI as expanding the pie rather than dividing the same pie differently. Projects that use AI music likely wouldn’t have hired composers anyway; they would have used generic stock music or gone without.

AI Music Generation: Overcoming the Challenges of Creativity

AI Music Generation: Overcoming the Challenges of Creativity addresses the elephant in every room where this technology is discussed: Can algorithms truly be creative? And if so, what does that mean for human creativity? These questions have occupied our minds throughout our exploration of this field.

The current consensus in cognitive science suggests that creativity involves combining existing knowledge in novel ways, recognizing patterns, breaking rules intentionally, and producing outputs that are both original and valuable. By this definition, AI demonstrates certain creative capacities—it combines musical patterns in new ways, generates novel compositions, and produces music people find valuable. However, it lacks the intentionality, emotional experience, and cultural understanding that humans bring to creative acts.

What AI does exceptionally well is solve creative problems within defined parameters. Tell it to create “a dark ambient piece that gradually transitions to hopeful,” and it will generate compelling options by drawing on patterns learned from thousands of similar transitions in existing music. What it can’t do is decide that such a transition would be meaningful in a specific cultural moment or imbue it with personal significance based on lived experience.

We’ve found the most successful approach treats AI as a creativity amplifier rather than a creativity replacement. It helps overcome specific challenges:

Breaking Creative Blocks: When you’re stuck, AI generates dozens of directions to explore. Even if you don’t use any of them directly, they often spark ideas you wouldn’t have considered. We’ve had countless moments where a mediocre AI generation contained one interesting rhythm or chord progression that became the seed for an entirely human-created piece.

Exploring Unfamiliar Territory: Want to experiment with a genre you don’t know well? AI trained on that genre can generate examples that teach you about its conventions, which you can then intentionally follow or break. We used this approach to learn about Brazilian bossa nova, generating dozens of examples to understand the rhythmic and harmonic patterns before attempting our own compositions.

Rapid Prototyping: Composers can iterate through ideas at unprecedented speed. Rather than spending hours developing an idea that ultimately doesn’t work, you can generate and evaluate multiple concepts quickly, investing deeper effort only in the most promising directions.

Overcoming Technical Limitations: Not a skilled orchestrator? AI can take your piano sketch and suggest full orchestrations. Weak at producing electronic beats? AI excels at generating layered rhythmic patterns. This doesn’t replace learning these skills, but it allows creation while you’re developing them.

The challenge isn’t making AI creative—it already is within its domain. The challenge is integrating AI creativity with human creativity in ways that elevate both. This requires understanding what each brings to the table and designing workflows that leverage their respective strengths. The human provides vision, emotion, cultural context, and intentionality. The AI provides speed, pattern recognition, variation generation, and tireless experimentation.

The Future of AI Music Generation: What to Expect in the Next 5 Years

The Future of AI Music Generation: What to Expect in the Next 5 Years is a topic that keeps us up at night—not from fear, but from excitement about the possibilities. Based on current trajectories and emerging research, we can make some educated predictions about where this technology is heading.

Real-time collaborative AI will become standard. Imagine jamming with an AI that listens to what you’re playing and generates complementary parts in real-time, responding to your musical choices instantaneously. Early versions of this exist in experimental forms, but within five years, we expect it to be as common as metronome apps are today. Musicians will practice with AI accompanists that adapt to their skill level and push them creatively.

Emotional intelligence in composition will dramatically improve. Current AI understands emotional associations with musical elements—minor keys sound sad, and fast tempos sound energetic—but this is surface-level. Future systems will better understand emotional arcs, narrative progression, and cultural context. They’ll create music that tells stories, building tension and release with genuine sophistication rather than just following formulas.

Personalized music generation at scale will transform how we consume music. Your streaming service won’t just recommend existing songs; it will generate new music specifically for you based on your unique preferences, current mood, time of day, and activity. Morning workout music subtly different from everyone else’s. Study music that adapts to your focus patterns. This isn’t replacing artist-created music but supplementing it with an infinite personalized soundtrack for your life.

Integration with other creative tools will blur boundaries between disciplines. AI that generates music from text descriptions will connect seamlessly with AI that generates images, videos, and narrative content. Creative projects will flow between modalities—an AI generates a story, derives emotional cues from that story, creates music matching those emotions, and generates visuals synchronized to the music, all from a single prompt. We’re seeing early experiments now; in five years, this will be accessible to anyone.

Hybrid human-AI instruments will emerge as a new category. These won’t be pure AI generation tools or traditional instruments, but something in between—instruments where human physical performance combines with AI-powered augmentation. Imagine a guitar where your playing style triggers AI-generated harmonic accompaniment, or a keyboard that extends your melodies into full orchestrations as you play.

Copyright and attribution systems will mature significantly. Blockchain-based solutions or similar technologies will track AI music generation, including training data sources, human contributions, and usage rights. This will resolve many current legal ambiguities and create clearer frameworks for compensation and credit. We’ll likely see “AI music registries” similar to how samples are registered today.

Education integration will revolutionize music teaching. Students will compose alongside AI tutors that not only play back their work but offer suggestions, explain theory concepts in context, and generate examples illustrating specific techniques. Learning music theory will become more intuitive as AI generates immediate examples of every concept.

The next five years won’t see AI replacing human musicians—if anything, we expect a renaissance of human musical expression as tools become more accessible. What we will see is the definition of “musician” expanding to include people who might never have participated in music creation before, armed with AI tools that translate their creative vision into sound.

AI Music Generation and Mental Wellness: Creating Personalized Soundscapes

AI Music Generation and Mental Wellness: Creating Personalized Soundscapes represents one of the most socially beneficial applications of this technology. We’ve experienced firsthand how the right music can transform mental states—calming anxiety, energizing a depressive mood, maintaining focus, or facilitating sleep. AI generation makes creating personalized therapeutic soundscapes accessible to anyone.

The science behind music and mental health is well established. Certain frequencies, rhythms, and harmonic progressions demonstrably affect our nervous systems. Binaural beats at specific frequencies promote relaxation or focus. Consistent rhythms can regulate breathing and heart rate. Major keys generally lift mood, while minor keys can facilitate emotional processing. What AI adds to this equation is personalization at scale.

Traditional music therapy involves trained therapists creating or selecting music tailored to individual clients. It’s effective but expensive and requires regular sessions. AI music generation democratizes this capability. You can generate soundscapes optimized for your specific needs whenever you need them, experimenting with different approaches until you find what works for your unique neurology.

We’ve explored various applications:

Anxiety Management: Generate calming ambient music with slow tempos (60-80 BPM matching resting heart rate), simple harmonic progressions, and nature-inspired sounds. We’ve used prompts like “calm ambient music with gentle synth pads, soft piano, and subtle rain sounds, slow tempo around 60 BPM, creating a peaceful and secure feeling” with excellent results. The AI understands these parameters and creates appropriate soundscapes.

Focus and Productivity: Music for concentration should be engaging enough to block distractions but simple enough not to become distracting itself. Lo-fi beats, minimal techno, and ambient jazz work well. Try: “downtempo lo-fi hip hop with mellow piano, soft beats, warm vinyl crackle, perfect for studying and focus, around 85 BPM.” The result provides consistent stimulation without demanding attention.

Sleep Improvement: Falling asleep to music requires very specific characteristics—extremely slow tempo (40-60 BPM), minimal melodic movement, and no surprises or dynamic changes. “Deep sleep ambient music with slow-evolving synth pads, no percussion, very gradual subtle changes, and consistent texture around 45 BPM, creating a feeling of safe floating” generates appropriate soundscapes that fade into the background as you drift off.

Mood Elevation: When experiencing a low mood, music that’s too cheerful can feel invalidating, but music that matches a depressed state can reinforce it. The solution is music that validates the current mood while gently elevating it. “Melancholic but hopeful indie folk with warm acoustic guitar and gentle building arrangement that becomes gradually more optimistic, with a medium-slow tempo around 80 BPM” creates a bridge from low mood toward more positive states.

Meditation and Mindfulness: Meditative music should create space for inner reflection rather than demanding engagement. “Minimalist meditation music with Tibetan singing bowls, very sparse gentle chimes, and long periods of silence with subtle textures, creating space for inner stillness” works beautifully for mindfulness practices.

The personalization aspect is crucial. What relaxes one person might agitate another. Someone with ADHD might need different focus music than someone without. AI generation allows infinite experimentation to discover your optimal soundscapes without spending money on albums that might not work for you.

We recommend creating a personal library of AI-generated tracks for different mental states and needs. Document what prompts worked well. Over time, you’ll develop a collection of customized therapeutic music far more effective than generic playlists because it’s specifically tuned to your responses.

AI Music Generation: A Deep Dive into Machine Learning Techniques

AI Music Generation: A Deep Dive into Machine Learning Techniques explores the technology underlying these creative tools. While you don’t need to understand the technical details to use AI music generation effectively, knowing the basics helps you understand capabilities and limitations and can improve your results.

Most current AI music generation uses neural networks—computational systems modeled loosely on how neurons in brains process information. These networks contain layers of artificial neurons that process musical data, learning patterns and relationships through training on vast datasets. Think of it like a child learning language by hearing millions of examples; the neural network learns music by analyzing millions of songs.

Recurrent Neural Networks (RNNs) and specifically Long Short-Term Memory networks (LSTMs) were early leaders in music generation. These architectures excel at sequential data—information that unfolds over time, like language or music. They can learn that certain notes typically follow others, that phrases have common lengths, and that musical sections relate to previous sections. However, they struggle with very long-term dependencies—understanding how a melody introduced two minutes ago should relate to what’s happening now.

Transformers revolutionized AI music generation around 2020. Originally developed for language processing (they power ChatGPT and similar systems), transformers use attention mechanisms that let the AI simultaneously consider all parts of a musical sequence, not just what came immediately before. This allows them to maintain thematic consistency across entire compositions and understand long-range musical relationships. When Suno generates a song where the final chorus references a melody from the introduction, that’s transformer architecture at work.

Generative Adversarial Networks (GANs) take a different approach. They use two neural networks—a generator and a discriminator—that compete against each other. The generator creates music, while the discriminator tries to determine whether it’s AI-generated or human-created. The generator improves by learning to fool the discriminator. This adversarial process often produces highly creative results because the generator is incentivized to explore diverse approaches rather than just copying training examples.

Variational Autoencoders (VAEs) compress music into compact representations (called the latent space) and then generate new music by decoding different points in this space. Imagine compressing all possible jazz music into a multi-dimensional space where nearby points represent similar styles. Moving through this space generates new jazz pieces that interpolate between different styles. This approach is particularly good for creating variations and exploring musical territories between different genres.

Diffusion models are the newest frontier, adapted from image generation systems like Stable Diffusion and DALL-E. They work by gradually adding random noise to training examples, then learning to reverse this process—starting from noise and gradually refining it into music. These models can generate exceptionally high-quality audio and respond well to text descriptions, which is why many recent text-to-music systems use diffusion.

Most platforms use hybrid approaches, combining multiple techniques. They might use transformers for composition and structure, diffusion models for audio generation, and specialized networks for specific elements like vocals or drum patterns. The training process typically involves:

- Data collection: Gathering millions of songs representing diverse genres and styles

- Preprocessing: Converting audio to formats the AI can learn from (spectrograms, MIDI, symbolic notation)

- Training: Exposing the neural network to this data repeatedly, adjusting internal parameters to minimize errors

- Fine-tuning: Additional training on specific styles or characteristics

- Evaluation and refinement: Testing outputs and improving the system

Understanding these techniques helps explain why AI music generation works the way it does. Transformers excel at maintaining thematic coherence but might generate repetitive patterns. GANs produce creative results but can be unstable. Diffusion models create high-quality audio but require significant computational resources. Knowing these trade-offs helps you choose the right tool for your needs and set appropriate expectations.

Case Studies: Successful Applications of AI Music Generation

Case Studies: Successful Applications of AI Music Generation ground our discussion in real-world results. We’ve researched and interviewed creators using AI music generation across various fields, and their stories illustrate both the technology’s potential and practical considerations.

Case Study 1: Indie Game Developer—”Echoes of the Forgotten”

Sophie Chen, a solo developer in Singapore, created an atmospheric puzzle game called “Echoes of the Forgotten” using AIVA for the entire soundtrack. With a budget under $1,000 for all audio, hiring a composer wasn’t feasible. “I needed about two hours of original music with consistent themes but varying moods,” Sophie explained. “I spent three weeks learning AIVA, generating hundreds of pieces, and curating about 50 tracks that fit perfectly.”

Sophie’s process involved creating “seed” themes by humming melodies and using music notation software to convert them to MIDI, then feeding these to AIVA as starting points. “The AI maintained my thematic material but created professional orchestrations I could never have done myself. I made extensive edits—cutting sections, layering multiple generations, adjusting transitions—so the final soundtrack was collaborative between me and the AI.”

The game received acclaim for its “hauntingly beautiful score,” with several reviewers specifically praising the music. Sophie estimates she saved $10,000-15,000 compared to hiring a composer while achieving results that served her vision perfectly.

Case Study 2: Corporate Video Producer—Streamlined Workflow

Marcus Thompson produces marketing videos for tech startups. Before discovering AI music generation, he spent hours searching stock music libraries for each project, often settling for tracks that were “close enough” but never perfect. “Clients would say, ‘Make it more energetic’ or ‘The mood shifts at 1:30,’ and I’d have to find an entirely different track,” he said.

Now Marcus uses Soundraw, generating custom tracks that precisely match each video’s requirements. “I can specify exact duration, adjust energy at specific timestamps, and generate variations until clients are happy. What used to take hours now takes 30 minutes.” He estimates this efficiency gain allows him to take 30% more projects annually while reducing stress from deadline pressure.

Critically, Marcus fully discloses AI use to clients and adjusts pricing to reflect reduced music licensing costs. “Transparency is crucial. Clients appreciate the honesty and the cost savings I pass along to them.”

Case Study 3: Music Therapist—Personalized Treatment

Dr. Elena Rodriguez, a licensed music therapist in Barcelona, incorporates AI-generated music into her practice. “Every client responds differently to musical elements,” she explained. “One client finds piano soothing, another finds it agitating. Traditional approaches meant limited options from my existing music library.”

Now Dr. Rodriguez generates personalized soundscapes for each client during their first sessions, adjusting tempo, instrumentation, harmonic complexity, and other parameters based on their responses. “I can create exact matches for each client’s therapeutic needs. One client needed music that was calming but not sedating—high in mid-range frequencies, consistent but not repetitive, with subtle unpredictable elements to maintain engagement. I used Amper to generate this precisely.”

She emphasizes that AI supplements rather than replaces her therapeutic expertise. “The AI generates raw material. My training and intuition guide what I generate, how I present it, and how I integrate it into treatment. The technology amplifies my effectiveness.”

Case Study 4: YouTube Content Creator—Consistent Brand Identity

James Wilson runs a popular science education channel with 2 million subscribers. Early on, he used various royalty-free tracks, creating inconsistent auditory branding. “Every video sounded different. Viewers had no sonic association with my channel.”

Using AI generation, James developed a signature sound—upbeat, curious, moderately complex electronic music with consistent melodic motifs. “I generated about 100 tracks over a month, all variations on my core theme. Now every video has unique music that’s clearly ‘mine.’ This was impossible before AI—commissioning this much original music would have cost more than I earn.”

The consistency strengthened his brand identity noticeably. Viewer retention metrics improved, and comments frequently mention how much people enjoy his music. “It’s become part of my channel’s personality.”

These case studies share common themes: AI music generation solving problems that traditional approaches couldn’t address economically, extensive human curation and editing of AI outputs, transparency about AI use, and integration with rather than replacement of human expertise. Success comes not from simply pressing “generate” but from understanding how to guide AI toward your specific vision.

AI Music Generation: The Impact on Music Education

AI Music Generation: The Impact on Music Education is fundamentally reshaping how we teach and learn music. As educators and lifelong learners ourselves, we’re witnessing this transformation firsthand and believe it offers tremendous potential alongside some legitimate concerns that warrant thoughtful attention.

The most obvious benefit is democratizing composition education. Traditionally, learning composition required years of studying theory, harmony, orchestration, and form before you could create music that actually sounded good. This steep learning curve discouraged many potential composers. AI generation inverts this model—students can create professional-sounding music from day one, then gradually understand the theoretical principles underlying what they’re creating.

We’ve seen this approach work beautifully. A high school student with no theory knowledge can generate an orchestral piece, then analyze it with their teacher: “Why does this chord progression work? What makes this transition effective? How is the orchestration balanced?” They’re learning from successful examples they created, which is intrinsically motivating. The student feels like a composer immediately rather than spending years on exercises before attempting real composition.

AI also enables instant feedback loops. A student learning about chord progressions can generate dozens of examples in minutes, hearing how different progressions affect mood and flow. Someone studying orchestration can input a piano sketch and hear multiple orchestration approaches, developing their ear for instrumental color much faster than traditional methods allow. The technology accelerates the pattern recognition that underlies musical understanding.

However, we must acknowledge legitimate concerns. There’s a risk that students might rely on AI without developing fundamental skills. Understanding music theory, ear training, and instrumental technique remain crucial for musical mastery. AI should supplement traditional education, not replace it. A student who can only create music by prompting AI hasn’t developed the deep musical understanding that comes from grappling with theory and practice.

The balance we advocate is using AI as a creative amplifier while maintaining rigor in fundamentals. Students should:

- Learn traditional theory and composition techniques

- Use AI to explore these concepts through immediate, high-quality examples

- Analyze AI-generated music to understand why it works or doesn’t

- Modify and improve AI outputs, developing critical listening skills

- Create music both with and without AI assistance

Progressive music schools are developing curricula incorporating AI while maintaining traditional foundations. Students learn piano and theory as always but also learn to use AI composition tools as part of their creative toolkit. They study Bach chorales and also analyze how transformer models learn Bach’s style. This hybrid approach produces musicians who understand both traditional craft and modern technology.

Instrumental performance education faces different challenges and opportunities. AI-generated accompaniment tracks help students practice more effectively. A violinist learning a concerto can practice with an AI-generated orchestra that adjusts the tempo to their pace. A jazz student can jam with an AI rhythm section that responds to their playing. This provides practice opportunities that were previously only available in expensive lessons or ensemble settings.

Music appreciation courses are being transformed by AI’s ability to generate examples illustrating any concept instantly. Teaching about bebop? Generate examples in seconds. Comparing Baroque and Classical period styles? Create side-by-side examples highlighting differences. This makes abstract concepts concrete and allows exploring musical territory that recorded examples don’t adequately cover.

The long-term impact remains uncertain, but we’re optimistic that thoughtful integration of AI into music education will produce a generation of musicians who are technically skilled, theoretically knowledgeable, and fluent in using technology creatively—musicians prepared for a future where human creativity and AI capability combine in ways we’re only beginning to imagine.

AI Music Generation for Marketing and Advertising: Creating Unique Jingles

AI Music Generation for Marketing and Advertising: Creating Unique Jingles addresses one of the most commercially successful applications of this technology. Having worked with several advertising professionals and marketing teams, we’ve seen how AI music generation solves specific pain points in commercial content creation while opening creative possibilities previously beyond reach.

The traditional process of commissioning advertising music was expensive and time-consuming. Agencies hired composers or licensed existing tracks, often spending thousands per project. Revisions required additional fees and delays. For small businesses and startups, professional advertising music was often completely unaffordable, forcing them to use generic stock music that did nothing to strengthen brand identity.

AI generation transforms this equation entirely. A marketing team can now generate hundreds of jingle variations in hours, each precisely tailored to their brand requirements. More importantly, they can iterate rapidly based on testing and feedback without incurring additional costs. If focus groups respond better to a slightly faster tempo or different instrumentation, generating new versions takes minutes rather than waiting days for a composer’s revisions.

The creative possibilities are particularly exciting. Brands can develop unique sonic identities without massive budgets. A small coffee shop can have custom music for their social media that’s distinctly theirs. A startup can create a memorable audio logo. A regional business can commission culturally appropriate music reflecting their community. AI democratizes professional audio branding.

We’ve observed several effective approaches:

Sonic Logo Development: Short, memorable musical phrases (3-5 seconds) that embody brand identity. Prompt AI with brand attributes—”energetic, innovative, trustworthy tech company”—and generate dozens of options. Test with target audiences and refine. The best sonic logos are simple, distinctive, and emotionally resonant. Think of Intel’s famous five-note bong or McDonald’s “I’m lovin’ it.”

Campaign-Specific Themes: Generate music that matches specific campaign messaging. Holiday promotions need festive music; summer campaigns need bright, energetic tracks; premium products benefit from sophisticated, minimalist compositions. AI can create thematically appropriate music at scale, allowing different music for every platform and audience segment.

Dynamic Audio Content: More advanced applications involve generating music that adapts to context. Imagine Instagram ads where the music subtly changes based on the time of day the viewer sees it, or podcast ads with music reflecting the podcast’s genre. This contextual personalization is becoming feasible with AI generation.

Localization: Global brands can generate culturally appropriate music for different markets without hiring composers familiar with each culture’s musical traditions. While this requires sensitivity and often human review, AI trained on diverse musical traditions can create culturally respectful variations of brand themes.

However, ethical considerations are paramount. We’ve seen problematic examples where brands generate music obviously derivative of popular songs or specific artists’ styles without proper consideration of copyright or artistic respect. Our recommendations:

- Never prompt AI to create music “in the style of [specific living artist]” without their permission

- Be transparent about AI use in your advertising

- Consider working with human musicians for high-profile campaigns while using AI for less critical content

- Ensure generated music doesn’t accidentally copy existing works (run through audio matching services)

- Respect cultural traditions when generating music from cultures not your own

The licensing considerations are also crucial. Most AI music platforms offer commercial licenses, but terms vary significantly. Soundraw and Mubert offer straightforward commercial licensing. Others restrict usage or require attribution. Always read terms carefully before using AI music in advertising.

One advertising creative director we interviewed summed it up: “AI music generation hasn’t replaced our audio production team, but it’s dramatically increased our output and creativity. We use it for initial concepts, client presentations, A/B testing variations, and smaller campaigns. For major brand launches, we still work with human composers. The key is knowing when to use which approach.”

AI Music Generation: Exploring Different Musical Genres

AI Music Generation: Exploring Different Musical Genres reveals both the remarkable versatility of current systems and their limitations. We’ve systematically tested how well various platforms handle different musical styles, and the results are illuminating about both where the technology excels and where human musicians remain irreplaceable.

Electronic Music and EDM: This is where AI truly shines. The structured, loop-based nature of electronic music aligns perfectly with AI’s pattern recognition strengths. Generating convincing techno, house, dubstep, or ambient electronic music is remarkably easy. Platforms like Soundraw and Amper produce professional-quality electronic tracks that could easily pass for human-made. The repetitive structures and synthesized sounds play to AI’s strengths.

Pop and Rock: Mainstream pop and rock work surprisingly well, particularly instrumental arrangements. Suno and Udio can generate catchy hooks, guitar riffs, and full band arrangements that sound genuinely radio-ready. Vocals are improving rapidly but can still sound artificial on close listening. The formulaic structure of much popular music—verse, chorus, verse, chorus, bridge, chorus—is something AI handles effortlessly because these patterns are well-represented in training data.

Classical and Orchestral: AIVA specializes in this genre and produces impressive results. Full orchestral arrangements with appropriate voice leading, dynamic contrast, and emotional arc are achievable. However, truly innovative classical composition—music that breaks conventions meaningfully—remains difficult. AI generates convincing “classical-style” music but rarely produces something that would genuinely advance the art form. For film scores and background orchestral music, it’s excellent. For concert hall premieres, human composers still reign.

Jazz: This is where things get interesting. AI can generate stylistically appropriate jazz, but authentic jazz is about improvisation, conversation between musicians, and sophisticated harmonic substitution. AI-generated jazz often feels technically correct but lacks the spontaneity and risk-taking that defines the genre. It’s fine for background jazz in a coffee shop but doesn’t capture the magic of Miles Davis taking chances that sometimes fail gloriously.

Folk and Acoustic: Simple folk music works well—AI generates convincing campfire guitar songs and traditional folk melodies. However, the cultural specificity of folk traditions means AI can sound authentic on a surface level while missing subtle regional characteristics that would be obvious to people immersed in those traditions. Use with cultural sensitivity and ideally with review from people from the relevant tradition.

Hip-Hop and Rap: Instrumental beats are a strength—AI produces impressive boom-bap, trap, and experimental hip-hop instrumentals. However, rap vocals remain problematic. While some platforms can generate rap vocals, they often lack the rhythmic precision, wordplay, and cultural authenticity that define great rap. The instrumental track might be fire, but the vocals need human intervention.

World Music: This is where we see AI’s current limitations most clearly. The training data for most systems heavily emphasizes Western music, particularly American and European styles. Generating authentic gamelan, qawwali, flamenco, or West African drumming is challenging. The AI might produce something that sounds vaguely like the target style but misses crucial details. This will improve as training data diversifies, but currently requires caution and cultural sensitivity.

Experimental and Avant-Garde: Interestingly, some AI systems excel at experimental music. Since the “rules” are loose or nonexistent, AI can create genuinely interesting ambient, drone, or abstract electronic music. We’ve generated pieces that musicians familiar with experimental music found genuinely compelling and creative. The lack of conventional structure means AI isn’t constrained by expectations.

Country and Americana: Results are mixed. The melodic and harmonic structures work well, but capturing the authentic vocal delivery, lyrical storytelling, and cultural specificity of country music is difficult. Instrumental country music works better than vocal tracks. You can get something that sounds like country, but it might lack the emotional authenticity that defines the genre.

Our recommendation: start by generating music in genres where AI excels—electronic, pop, orchestral—to build confidence and understanding. As your skills improve, experiment with more challenging genres, always listening critically and respecting the cultural contexts of musical traditions you’re exploring. And remember: AI is a tool for exploration and creation, not a replacement for deep engagement with musical traditions and the cultures they come from.

AI Music Generation: Customizing Music to Fit Specific Moods and Emotions

AI Music Generation: Customizing Music to Fit Specific Moods and Emotions is perhaps the most practical skill for anyone using these tools. The difference between AI-generated music that perfectly serves your purpose and music that falls flat often comes down to how precisely you specify emotional qualities. We’ve developed prompting strategies through extensive trial and error that dramatically improve emotional accuracy.

Understanding how to communicate mood requires knowing which musical elements create which emotional effects. Here’s what we’ve learned:

Tempo and Energy: This is your primary mood control. Slow tempos (60-80 BPM) create calm, contemplative, or melancholic moods. Medium tempos (90-110 BPM) feel comfortable and neutral. Fast tempos (120-160+ BPM) generate excitement, energy, or tension. But tempo alone isn’t enough—a slow tempo with intense instrumentation creates brooding tension rather than calm.

Harmonic Content: Major keys generally sound happy, bright, and optimistic. Minor keys sound sad, serious, or mysterious. But modal music (using modes like Dorian or Mixolydian) creates more nuanced emotions—Dorian mode sounds melancholic but hopeful; Lydian sounds dreamy and ethereal. Most AI systems understand “major,” “minor,” and common modes if you specify them.

Instrumentation: Acoustic instruments generally feel warm and intimate. Electronic synthesizers can feel futuristic, cold, or otherworldly depending on timbre. Strings convey elegance or emotion. Brass sounds bold or triumphant. Woodwinds feel playful or pastoral. Piano works for nearly any mood depending on playing style. Specify instruments carefully in prompts.

Texture and Density: Sparse arrangements with few instruments feel intimate or lonely. Dense, layered arrangements feel rich, complex, or overwhelming. Use this strategically—”minimalist piano with occasional subtle strings” creates very different mood than “full orchestral arrangement with layered strings, brass, and percussion.”

Dynamic Range: Music with extreme dynamics (quiet to very loud) feels dramatic and emotional. Consistent volume feels more stable but potentially monotonous. “Gentle build from quiet intimate beginning to powerful emotional climax” tells AI to use dynamic contrast meaningfully.

Rhythm and Pattern: Regular, predictable rhythms feel stable and comfortable. Syncopation and unexpected rhythms create tension or excitement. Complete lack of clear rhythm feels ambient or floating. “Steady driving rhythm” versus “floating ambient with minimal rhythm” creates entirely different experiences.

Here are prompting templates we use for specific emotional goals:

For Calm and Relaxation: “Slow tempo ambient music around 60 BPM, soft sustained synth pads, gentle piano melody, minimal percussion, major key, very gradual changes, creating a peaceful meditative atmosphere.”

For Energetic Motivation: “Upbeat inspiring pop-rock, fast tempo around 130 BPM, bright acoustic and electric guitars, driving drums, major key, building arrangement that grows in intensity, anthemic feeling.”

For Melancholic Reflection: “Slow contemplative piece in minor key, 70 BPM, solo piano with subtle strings, sparse arrangement, gentle dynamics, bittersweet and introspective mood, allowing space for reflection.”

For Tense Suspense: “Dark atmospheric music, slow irregular rhythm, minor key with dissonant harmonies, deep bass, sharp staccato strings, building tension, cinematic suspenseful feeling.”

For Joyful Celebration: “Bright cheerful music in a major key, a medium-fast tempo of 120 BPM, acoustic guitars, hand percussion, a whistling melody, an uplifting spirit, and sounds like a perfect sunny day celebration.”

For Focused Concentration: “Minimal electronic music for focus, consistent 90 BPM rhythm, simple repeating patterns, subtle variations maintaining interest without distraction, balanced frequencies avoiding extremes.”

For Nostalgic Warmth: “Warm vintage-sounding indie folk, medium tempo 85 BPM, acoustic guitar, soft vocals, gentle drums, major key with occasional minor touches, analog warmth, feels like treasured memories.”

Advanced technique: Specify emotional progression across the track. “Begins melancholic and introspective, gradually introduces hopeful elements around 90 seconds, and builds to confident and inspiring by the end.” This creates an emotional journey rather than a static mood, which is far more engaging for longer pieces.

We also recommend creating mood playlists by generating 10-20 variations on each emotional theme you commonly need. Save successful prompts. Over time, you’ll build a personal library of AI-generated music precisely tailored to your emotional needs, far more effective than trying to find the right mood in existing music libraries.

The key insight: emotions aren’t single attributes but combinations of multiple musical elements. “Happy” music might be fast, in a major key, and with bright instrumentation. But “contentedly happy” is medium tempo, major key, and warm instrumentation. “Ecstatically happy” is very fast, in a major key, and fully arranged. The more precisely you specify the combination of elements, the more accurately the AI matches your intended emotion.

AI Music Generation: Integrating AI with Traditional Instruments

AI Music Generation: Integrating AI with Traditional Instruments explores hybrid approaches where human performance and AI generation collaborate directly. This is one of the most exciting frontiers in music technology—not replacing traditional musicianship but augmenting it in ways that expand creative possibilities.

We’ve experimented extensively with integration workflows, and the possibilities are genuinely thrilling. Imagine playing guitar and having AI generate complementary bass lines, drum patterns, and harmonic accompaniment in real time. Or composing a string quartet where you write the violin part and AI generates the viola, cello, and second violin parts that properly complement your melody. These scenarios are becoming practical reality.

Method 1: AI as Accompanist Record yourself playing an instrument or singing, then feed this recording to AI music generation platforms as a “seed” or reference. Many platforms now accept audio input and generate complementary parts. We’ve recorded simple piano melodies and had AI generate full band arrangements, orchestral backings, or electronic production around them. The AI analyzes your timing, key, and harmonic content, creating parts that musically fit your performance.

The trick is specificity in prompts: “Generate an upbeat indie rock arrangement with drums, bass, and rhythm guitar accompanying this piano melody, maintaining the exact tempo and key, energetic but leaving space for the piano to shine.” This guides the AI to support rather than overwhelm your original performance.

Method 2: AI-Generated Stems as Starting Point Reverse the process—generate full tracks with AI, then extract individual instrument stems and re-record specific parts with real instruments. For example, generate an electronic track, export the bass line as MIDI, then re-record it on an actual bass guitar. The rhythm and notes came from AI, but the performance is human, combining AI’s composition capabilities with human expression and nuance.

We’ve used this for live performance preparation. Generate backing tracks with AI, then practice performing lead parts over them. For solo performers, this creates full-band sounds without requiring multiple musicians. The authenticity comes from the live instrument cutting through the AI-generated backing.

Method 3: Hybrid Composition Compose interactively with AI, trading ideas back and forth. Play a phrase on your instrument, record it, feed it to AI asking for variations or complementary ideas, evaluate the results, incorporate elements you like into your next played phrase, and repeat. This creates a genuine dialogue between human and AI musicianship.

We’ve found this approach incredible for overcoming creative blocks. When stuck, generate AI variations on what you’ve played. Even if none are exactly right, they spark ideas you wouldn’t have considered. One musician we interviewed described it as “having an infinite number of collaborators who never judge your ideas and are always willing to try something new.”

Method 4: AI-Enhanced Recording and Production Use AI not for composition but for production enhancement. AI can separate stems from your recordings (isolating vocals, drums, bass, etc.), allowing you to process them individually even if recorded together. AI can generate harmony vocals from your lead vocal, create layered string sections from a simple violin recording, or add subtle atmospheric elements that enhance your original performance.

Some practical workflows we recommend:

For Solo Instrumentalists: Generate backing tracks in your genre, practice performing with them, record your performance, use AI to enhance or polish the backing track based on your actual performance tempo and feel, then mix your live recording with the refined AI backing.

For Songwriters: Record rough demos of your songs with simple accompaniment, use AI to generate full production-quality arrangements, evaluate different arrangement styles, choose the best elements from multiple AI generations, record final vocals and lead instruments over the AI backing, and polish the final mix.

For Composers: Sketch ideas at piano or guitar, input sketches to AI for orchestration or arrangement suggestions, evaluate AI suggestions for voice leading, balance, and musicality, revise the AI output (AI usually gets 70-80% right), and finalize the score incorporating both AI suggestions and human refinements.

For Producers: Use AI to generate variation ideas on client compositions, create reference tracks showing different production approaches, accelerate the ideation phase of production, and then execute final production with human performance and mixing expertise.

The philosophy underlying all these approaches: AI handles what it does well (pattern generation, rapid iteration, and technical execution), while humans contribute what they do well (emotional intention, aesthetic judgment, cultural context, and nuanced expression). Neither is complete without the other.

We’ve noticed that musicians who successfully integrate AI share certain mindsets: they view technology as a tool rather than a threat, maintain curiosity about new approaches, practice active listening and critical evaluation, stay grounded in fundamental musicianship, and remain open to unexpected creative directions. If you bring this mindset to integrating AI with traditional instruments, you’ll discover creative possibilities you never imagined.

AI Music Generation: Open Source Projects and Communities

AI Music Generation: Open Source Projects and Communities offer powerful alternatives to commercial platforms for those willing to invest time in learning. We’ve explored numerous open-source options, and while they require more technical knowledge, they provide unmatched flexibility, transparency, and community support. Plus, they’re free.

Magenta (Google/TensorFlow) This is the flagship open-source AI music project, developed by Google’s Magenta team. It includes numerous models for melody generation, drum pattern creation, performance RNN, music VAE, and more. We’ve used MusicVAE extensively—it creates smooth interpolations between different musical styles, allowing you to explore the space between, say, baroque and techno.

Magenta requires Python knowledge and familiarity with machine learning concepts, but the documentation is excellent. The community is active on GitHub and Discord, with members sharing trained models, tutorials, and creative projects. If you’re comfortable with code, Magenta provides the deepest understanding of how AI music generation actually works.

MuseNet (OpenAI) While technically not fully open-source, MuseNet is freely accessible and represents some of the most sophisticated music generation research. It can generate compositions with up to 10 different instruments across various styles. The web interface is simple—select instruments and styles, and MuseNet generates. It’s trained on MIDI files from diverse genres and can create surprisingly coherent multi-instrument pieces.

We’ve used MuseNet for generating MIDI sketches that we then humanize and refine in DAWs. The generated MIDI is a fantastic starting point for further development.

Jukebox (OpenAI) This is the most ambitious open-source music generation project—generating raw audio, including vocals, in various genres. The results are remarkable, but the computational requirements are enormous (requires powerful GPUs). We’ve experimented with Jukebox on cloud computing platforms, and when it works, it’s magical. The vocals are still noticeably synthetic, but the overall musicality is impressive.

Jukebox is more for researchers and experimenters than practical music creation currently, but it demonstrates where the technology is heading.

Music Transformer This model, based on transformer architecture, excels at generating expressive performance MIDI. Rather than just generating notes, it includes dynamics, timing variations, and pedaling—the nuances that make computer-generated music sound more human. We’ve used Music Transformer to generate piano performances that genuinely sound performed rather than programmed.

Riffusion A newer project that generates music by creating spectrogram images, then converting them to audio. It’s fascinating because you can literally see the music being created as images. Riffusion is accessible through web interfaces and is relatively easy to experiment with. Results are good for electronic and ambient music, less consistent for complex arrangements.

Dadabots This project focuses on generating death metal and other extreme music genres using neural networks trained on specific artists’ music (with permission). It’s been streaming AI-generated death metal 24/7 for years. While niche, it demonstrates how AI can capture even complex, unconventional musical styles with sufficient training.

Community Resources:

Reddit Communities: r/aialley, r/Magenta, and r/MachineLearning have active AI music generation discussions, sharing techniques, examples, and troubleshooting.

Discord Servers: Several active Discord communities focus on AI music generation, offering real-time help, collaboration opportunities, and sharing of trained models and techniques.

GitHub: Countless repositories contain trained models, tutorials, and tools. Search for “music generation” or specific model names to find resources.

YouTube Channels: Many creators document their AI music generation experiments, offering tutorials and demonstrations. Channels like “AI Euphonics” and “Carykh” are excellent starting points.

Academic Papers: For those interested in the deep theory, papers on arXiv.org and academic conferences like ISMIR (International Society for Music Information Retrieval) publish cutting-edge research.

The open-source approach offers several advantages: complete transparency about how systems work, the ability to customize and fine-tune models, no ongoing subscription costs, and deeper learning about AI and music. The disadvantage is a steeper learning curve and more technical barriers to entry.

We recommend starting with simpler open-source tools like Riffusion or MuseNet’s web interface before diving into Magenta or Jukebox. As your comfort grows, the open-source world offers unlimited depth for exploration. And importantly, contributing to open-source projects—whether through code, documentation, or even just detailed bug reports—helps advance the entire field while building genuine expertise.

AI Music Generation: Understanding the Role of Datasets

AI Music Generation: Understanding the Role of Datasets is crucial for anyone who wants to truly understand how these systems work and the ethical implications of their use. In simple terms: AI music generators are only as good as the music they’ve been trained on. Garbage in, garbage out applies completely to AI music generation.

A dataset in this context is the collection of music the AI learns from during training. Think of it like musical education—if a human learns piano by only hearing Mozart, they’ll create Mozart-style music. If they hear Mozart, Coltrane, Björk, and Aphex Twin, their creative palette expands dramatically. AI training follows similar logic.

Dataset Composition and Bias: Most commercial AI music systems are trained on millions of songs spanning many genres, but the exact composition of these datasets significantly affects outputs. If a dataset contains 60% pop music, 20% rock, 10% electronic, 5% classical, and 5% everything else, the AI will be most confident generating pop and least confident with underrepresented genres.

We see this bias in practice constantly. Generate “pop song” and you’ll get consistently excellent results. Generate “traditional Mongolian throat singing” and results are often poor because throat singing is underrepresented in training data. The AI hasn’t learned enough about that tradition to recreate it authentically.

Copyright and Licensing Issues: Here’s where things get ethically complex. Many AI models are trained on music without explicit permission from copyright holders. Companies argue this constitutes fair use—the AI learns patterns rather than copying specific songs. Artists and labels often disagree, arguing their work is being exploited without compensation.

Some companies are addressing this proactively. Stability AI’s audio division has created datasets using only properly licensed and public domain music. Others partner with music libraries and publishers to ensure training data is ethically sourced. As users, we should favor platforms that are transparent about their training data and compensate creators appropriately.

Public Domain and Creative Commons: Some projects exclusively use music in the public domain (copyright expired) or licensed under Creative Commons terms, allowing AI training. This sidesteps copyright concerns but limits the diversity of training data since most contemporary music isn’t public domain. The tradeoff is ethical clarity versus creative capability.

Quality and Curation: Not all music is equally good training data. Including poorly produced, out-of-tune, or technically flawed recordings teaches the AI to reproduce those flaws. Quality datasets are carefully curated, including only well-produced, technically proficient examples. This is why AI-generated music from professional platforms sounds polished—they curated their training data for quality.

Representation and Diversity: A major current challenge is ensuring datasets represent diverse musical traditions equitably. Western popular music dominates most datasets because it dominates commercially available recordings. This means AI systems reproduce Western musical norms as “default” while treating other traditions as exceptions or exotic variations.

Efforts are underway to create more representative datasets, including diverse world music traditions, but the process requires careful work with cultural experts to avoid misappropriation or disrespectful use of traditional music. We need datasets that represent humanity’s full musical heritage, not just the commercially dominant portions.

Personal Dataset Creation: For open-source tools like Magenta, you can create custom datasets from your own music or properly licensed sources. This allows training AI on specific styles—imagine an AI trained exclusively on your favorite artist (assuming you have rights to do so). The results would be deeply style-specific rather than generalized.

We experimented with training a small model on exclusively ambient music from a creative commons audio library. The resulting AI generated exclusively ambient music, but with much more stylistic consistency than general-purpose models. For specific applications, custom datasets can be powerful.

Data Augmentation: Advanced users employ “data augmentation”—artificially expanding datasets by transposing music to different keys, varying tempo, or applying effects. This helps AI learn that the same musical idea can be expressed in multiple ways. It’s particularly useful for smaller datasets.

The Future of Datasets: We’re moving toward more transparent, ethically sourced datasets with proper artist compensation and representation. Blockchain technologies may enable tracking exactly which training songs influenced which generated outputs, allowing micro-compensation of original artists. Some envision systems where artists opt-in to AI training and receive payment when the AI generates music influenced by their style.

Understanding datasets helps you evaluate AI music generation platforms critically. Ask: Where did their training data come from? Do they compensate original artists? How diverse are their musical traditions? Companies unwilling to answer these questions warrant skepticism.

For us as creators using AI, this means being mindful that every AI-generated piece has ancestors—human musicians whose work taught the AI. Respecting those origins, even when they’re obscured by algorithmic transformation, is part of using this technology responsibly.

AI Music Generation: Tips and Tricks for Improving Output Quality

AI Music Generation: Tips and Tricks for Improving Output Quality distills our hard-earned knowledge from thousands of generations into practical advice you can apply immediately. These aren’t obvious tips you’d find in documentation—they’re discoveries from extensive experimentation that dramatically improved our results.

Tip 1: Prompt Iteration is Everything Never settle for your first prompt. Generate 5-10 variations with slightly different wording, then analyze which produces the best results. Small changes create big differences. “Upbeat pop song” versus “energetic indie pop with handclaps and bright acoustic guitars” generates vastly different output. Document successful prompts in a notebook—you’ll reuse effective phrasings.

Tip 2: Use Specific BPM Numbers Instead of “fast tempo,” specify “128 BPM.” Instead of “slow and dreamy,” try “62 BPM.” Concrete numbers give the AI precise targets. We’ve found this alone improves consistency by about 40%. Standard tempos: 60-70 slow ballad, 80-95 moderate, 100-115 upbeat, 120-135 energetic, 140+ very fast/dance.

Tip 3: Reference Specific Instruments, Not Just “Guitars” “Acoustic guitar” tells the AI something; “fingerpicked steel-string acoustic guitar with bright, crisp tone” tells it much more. Similarly, “synth” is vague, but “warm analog-style synth pads with slow attack and long release” is precise. The more specific your instrumental descriptions, the better the results.

Tip 4: Structure Your Longer Prompts in Layers For complex generations, build your prompt in this order: (1) Genre and tempo, (2) Mood and emotion, (3) Instrumentation, (4) Structure and progression, (5) Production details. Example: “Electronic downtempo, 85 BPM [genre/tempo]. Melancholic but hopeful [mood]. Features warm Rhodes piano, deep bass, subtle strings [instrumentation]. Builds gradually from minimal intro to fuller arrangement by midpoint [structure]. Lo-fi production with vinyl crackle and warm analog feel [production].”

Tip 5: Generate in Sections for Long Pieces Rather than generating a complete 5-minute track, generate 30-second sections with specific characteristics, then edit them together. This gives more control and often better quality than asking for full-length pieces. Create the intro, verse, chorus, bridge, and outro separately, then combine them in your DAW.