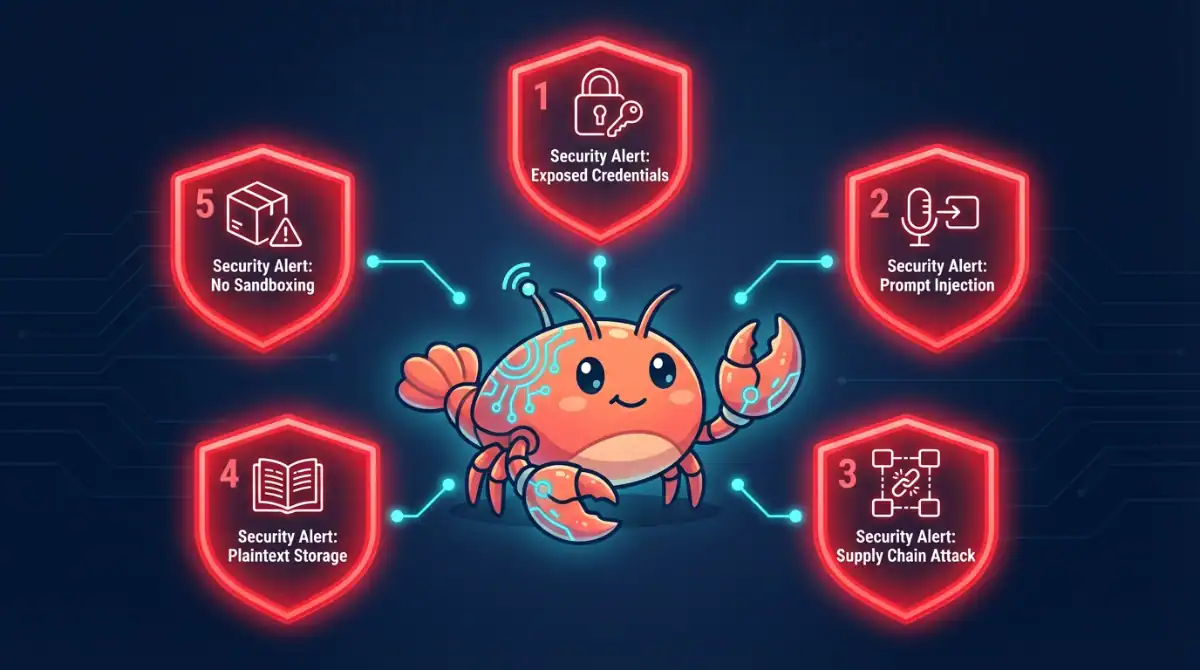

Viral AI Agent Moltbot: 5 Security Red Flags

Key Points

- AI agent Moltbot (formerly Clawdbot) has gained 85,000+ GitHub stars but faces severe security scrutiny from cybersecurity experts

- Security researchers discovered hundreds of exposed instances with credentials stored in plaintext format

- Supply chain attack proof-of-concept successfully demonstrated on Moltbot’s skill library

- Google Cloud and other major security firms are warning users not to install the viral AI assistant

- Enterprise environments show 22% of customers have employees using Moltbot without IT approval

Background

AI agent Moltbot, originally launched as Clawdbot in November 2025, is an open-source personal AI assistant created by Peter Steinberger that promises to be “the AI that actually does things.” The platform manages emails, schedules calendar events, browses the web, and executes system commands on behalf of users. Unlike cloud-based chatbots, Moltbot runs locally on user devices 24/7 with persistent memory that retains conversation context across weeks or even months (ℹ️ Palo Alto Networks).

The platform’s viral success comes from deep system integration with popular messaging apps, including WhatsApp, Telegram, Discord, and Slack. Within just one week, it collected over 85,000 GitHub stars and 11,500 forks. The project was recently rebranded from Clawdbot to Moltbot following trademark concerns raised by Anthropic, though the name change hasn’t addressed fundamental security issues (ℹ️ The Register).

What Happened

Multiple security researchers have identified critical vulnerabilities in AI agent Moltbot that expose users to severe risks. Jamieson O’Reilly, founder of red-teaming company Dvuln, conducted Shodan scans and discovered hundreds of internet-facing Moltbot instances with weak or missing authentication. Of the instances he examined manually, eight were completely open with no authentication, exposing full access to run commands and view configuration data. These misconfigurations could allow attackers to access months of private messages, API keys, and account credentials (ℹ️ The Register).

Hudson Rock’s security researchers discovered that Moltbot stores sensitive secrets in plaintext Markdown and JSON files on users’ local file systems. This design makes the data vulnerable to commodity infostealer malware families like RedLine, Lumma, and Vidar, which are already implementing capabilities to target local-first directory structures (ℹ️ BleepingComputer).

Perhaps most alarming, O’Reilly successfully demonstrated a proof-of-concept supply chain attack. He uploaded a benign but instrumented “skill” to the ClawdHub library and artificially inflated its download count to over 4,000. Developers from seven countries downloaded the poisoned package, proving that malicious actors could steal SSH keys, AWS credentials, and entire codebases before victims realize anything is wrong (ℹ️ The Register).

In enterprise environments, Token Security reported that 22% of their customers have employees actively using Moltbot without IT approval, creating significant corporate data leakage risks (ℹ️ BleepingComputer).

Why It Matters

The security concerns surrounding AI agent Moltbot have prompted strong warnings from industry leaders. Heather Adkins, VP of security engineering at Google Cloud, publicly urged people to avoid installing it, citing a security researcher who called it “an infostealer malware disguised as an AI personal assistant. ” Principal security consultant Yassine Aboukir questioned, “How could someone trust that thing with full system access?” (ℹ️ The Register).

Palo Alto Networks security researchers conducted a comprehensive analysis and mapped Moltbot’s vulnerabilities to the complete OWASP Top 10 for Agentic Applications. The platform fails on all ten criteria, including prompt injection, insecure tool invocation, excessive autonomy, missing human-in-the-loop controls, and lack of runtime monitoring. The researchers stated, “Moltbot is not designed to be used in an enterprise ecosystem.”

The platform’s persistent memory feature creates what researchers call “delayed multi-turn attack chains.” Malicious instructions hidden in forwarded WhatsApp or Signal messages can remain dormant in the system for weeks before execution, bypassing traditional security guardrails that can’t detect or block such attacks (ℹ️ Palo Alto Networks).

What’s Next

Security experts recommend that current Moltbot users disconnect the platform immediately and revoke all connected service integrations, particularly email, social media, and services with access to sensitive data. For those who choose to continue using the platform despite the risks, researchers advise several hardening measures: implement strict firewall rules to restrict access to trusted IP addresses only, rotate all API keys and passwords that may have been exposed, and run the AI agent in isolated virtual machines rather than directly on host operating systems with root access (ℹ️ Intruder).

The broader AI industry is now grappling with how to build autonomous agents that balance functionality with security. Palo Alto Networks emphasized that “the future of AI assistants is not just about smarter agents; it’s about secure agents that can be governed and are built with an understanding of when not to act.” The Moltbot case highlights that security in agentic AI cannot be an afterthought—it must be designed in from the beginning (ℹ️ Palo Alto Networks).

Source: Multiple verified cybersecurity sources—Published January 31, 2026

Original articles:

- The Register: https://www.theregister.com/2026/01/27/clawdbot_moltbot_security_concerns/

- Palo Alto Networks: https://www.paloaltonetworks.com/blog/network-security/why-moltbot-may-signal-ai-crisis/

- BleepingComputer: https://www.bleepingcomputer.com/news/security/viral-moltbot-ai-assistant-raises-concerns-over-data-security/

About the Author

This article was written by Nadia Chen, an expert in AI ethics and digital safety who helps non-technical users navigate AI tools safely and responsibly. Nadia focuses on empowering users to leverage AI technology while maintaining strong privacy and security practices.