Introduction to AI Ethics: Core Principles and Values

When I started working with artificial intelligence tools, I thought ethics was something only philosophers and policymakers needed to worry about. I was wrong. The moment I used AI to help make decisions that affected real people—from content moderation to resume screening—I realized that every person using AI carries ethical responsibility. Introduction to AI Ethics isn’t just an academic exercise; it’s a practical framework that helps us navigate the confusing moral landscape of technology that’s reshaping our world.

As someone who’s spent years studying AI ethics and digital safety, I’ve seen firsthand how ethical considerations can mean the difference between AI that empowers people and AI that harms them. Whether you’re a business owner using AI chatbots, a student experimenting with AI writing tools, or simply someone curious about technology’s role in society, understanding ethical principles isn’t optional anymore—it’s essential.

What Is AI Ethics, and Why Does It Matter?

Introduction to AI Ethics refers to the moral principles and values that guide how we develop, deploy, and use artificial intelligence systems. Think of it as a compass that helps us ask the right questions: Should we build this? How should we build it? Who benefits? Who might be harmed? What are our responsibilities?

At its core, AI ethics addresses a fundamental tension: artificial intelligence can be incredibly powerful and beneficial, but that same power can cause significant harm if not wielded responsibly. Unlike traditional software that follows explicit rules, AI systems learn from data and make decisions in ways that can be opaque, biased, or unpredictable.

I remember working with a small nonprofit that wanted to use AI to help distribute resources to communities in need. They were excited about the efficiency gains but hadn’t considered that their training data might reflect historical inequities. Without an ethical framework, they would have automated discrimination. This is why AI ethics matters—it helps us see the hidden impacts of our technological choices.

The Real-World Stakes

The consequences of unethical AI aren’t abstract. They affect people’s lives in concrete ways:

- Hiring algorithms that screen out qualified candidates based on biased patterns in historical data

- Facial recognition systems that misidentify people of color at higher rates, leading to wrongful arrests

- Credit-scoring AI denies loans to people from certain neighborhoods, perpetuating economic inequality.

- Healthcare algorithms that provide different quality recommendations based on demographic factors

- Content recommendation systems that amplify misinformation or extremist content

These aren’t hypothetical scenarios—they’re documented cases that have already happened. Understanding ethical AI principles helps us prevent these harms and build systems that respect human dignity and rights.

The Four Pillars of AI Ethics

While different organizations and scholars propose various frameworks, four core principles consistently emerge as foundational to AI ethics. I consider these to be the pillars that support responsible AI development and deployment.

Fairness: Ensuring Equal Treatment and Opportunity

Fairness in AI means that systems should not discriminate against individuals or groups based on protected characteristics like race, gender, age, disability, or other sensitive attributes. But fairness is more nuanced than simply treating everyone identically.

There are actually multiple definitions of fairness, and they sometimes conflict:

Demographic parity means different groups receive positive outcomes at similar rates. For instance, an AI hiring tool should proportionally recommend candidates from different demographic groups.

Equal opportunity focuses on ensuring that qualified individuals have equal chances of positive outcomes, regardless of their group membership.

Individual fairness suggests that similar individuals should receive similar treatment—people with comparable qualifications should attain comparable results.

I learned the complexity of fairness when consulting for an educational technology company. Their AI tutoring system was equally accurate across different student groups (one type of fairness), but it provided less informative explanations to students from under-resourced schools because it had less training data from those contexts (a different fairness problem). We had to redesign the system to actively address these gaps.

Practical steps for fairness:

Testing your AI systems with diverse data representing different demographic groups is crucial. I always recommend creating a fairness checklist that includes questions like Have we examined our training data for historical biases? Have we tested our system’s performance across different subgroups? Have we consulted with communities that might be affected?

Involve diverse stakeholders in the development process from the beginning, not just at the end. Different perspectives help identify potential fairness issues you might miss.

Be transparent about tradeoffs. Sometimes different fairness metrics conflict, and you need to make explicit choices about which definition matters most for your specific context.

Accountability: Taking Responsibility for AI Decisions

Accountability means that there should always be humans who are responsible for AI system outcomes—even when the AI makes autonomous decisions. You can’t just blame the algorithm when something goes wrong.

This principle addresses a critical challenge: as AI systems become more complex and autonomous, it becomes easier to diffuse responsibility. The data scientist says, “I just built what the product manager requested.” The product manager says, “I was just meeting business requirements.” The business leader says, “I was just trying to stay competitive.” Meanwhile, no one takes responsibility for the harm caused.

I witnessed this accountability gap when working with a healthcare provider that used AI to prioritize patient appointments. When the system began giving lower priority to elderly patients with complex conditions (because they had longer appointment histories that the AI misinterpreted), it took weeks to identify the problem because no single person felt responsible for monitoring the system’s real-world impact.

Building accountability into AI systems:

Establish clear ownership. Before deploying any AI system, designate specific individuals or teams responsible for monitoring its performance, investigating problems, and making corrections. Document these responsibilities in writing.

Create audit trails. AI systems should log their decisions in ways that humans can review and understand later. If your AI denies someone a loan or flags content for removal, there should be a record of what data influenced that decision.

Implement human oversight mechanisms. For high-stakes decisions—those affecting people’s livelihoods, safety, or rights—require human review before AI recommendations are implemented. This doesn’t mean humans need to review everything, but there should be clear escalation paths for uncertain or contentious cases.

Design appeal processes. People affected by AI decisions should have ways to challenge them and request human review. This isn’t just ethically important; it also creates feedback loops that help you identify and fix problems with your systems.

Transparency: Opening the Black Box

Transparency in AI ethics means that people should be able to understand how AI systems work and how they make decisions—at least to the extent necessary to assess their reliability and appropriateness. This doesn’t mean everyone needs to understand the mathematical details, but it does mean systems shouldn’t be inscrutable black boxes.

Transparency operates at multiple levels. There’s transparency about when AI is being used (disclosure), transparency about how the AI works generally (explainability), and transparency about why specific decisions were made (interpretability). Each level serves different purposes and audiences.

I appreciate transparency most when it helps people make informed choices. When I use an AI writing assistant, I want to know: Is this generating entirely new text or adapting existing content? What sources is it drawing from? How reliable is the output? Without this information, I can’t use the tool responsibly.

Making AI systems more transparent:

Disclose AI use clearly. When people interact with AI systems, they should know they’re interacting with AI, not a human. This factor is especially important for chatbots, automated customer service, and content generation. Simple labels like “This conversation is with an AI assistant” or “This content was AI-generated” help set appropriate expectations.

Provide system cards or model cards. These are documents that explain what an AI system does, what data it was trained on, its known limitations, and how it should and shouldn’t be used. Think of it as a nutrition label for AI—giving users the information they need to make informed decisions.

Explain decisions when they matter. For consequential decisions, provide explanations that help people understand the outcome. This might be as simple as “Your loan application was declined primarily due to insufficient income relative to the loan amount” or as complex as showing which factors most influenced a medical diagnosis recommendation.

Be honest about limitations. Transparency includes being clear about what your AI system can’t do well. If your facial recognition works poorly in low light, say so. If your language model sometimes generates false information, warn users. This kind of honesty builds trust and helps people use AI appropriately.

Privacy: Protecting Personal Information and Dignity

Privacy in the context of AI ethics means respecting individuals’ rights to control their personal information and protecting it from misuse. AI systems often require vast amounts of data to function effectively, creating tension between functionality and privacy protection.

This principle has become increasingly critical as AI systems can infer sensitive information from seemingly innocuous data. Your AI system might not collect health information directly, but if it analyzes someone’s search history, purchase patterns, and location data, it might be able to infer health conditions with alarming accuracy.

I’m particularly cautious about privacy because I’ve seen how easily it can be violated unintentionally. A company I worked with was using AI to personalize educational content for students. They thought they were being privacy-conscious by not asking for names, but their system could still identify individual students based on behavioral patterns and learning styles. That level of individual tracking, even without names attached, raised serious privacy concerns.

Protecting privacy in AI systems:

Collect only necessary data. Before gathering data for your AI system, ask: Do we really need this information? Can we accomplish our goals with less intrusive data? Often, you can build effective AI with aggregated or anonymized data rather than detailed individual information.

Implement data minimization and retention limits. Don’t keep data forever just because you can. Establish clear policies about how long you’ll retain personal information and stick to them. Regularly purge data that’s no longer needed.

Use privacy-preserving techniques. Technologies like differential privacy (adding carefully calibrated noise to data to protect individuals while preserving overall patterns), federated learning (training AI models on distributed devices without centralizing data), and homomorphic encryption (performing computations on encrypted data) can help you build effective AI while protecting privacy.

Obtain meaningful consent. If you’re collecting personal data for AI training, make sure people understand what they’re consenting to. “We’ll use your data to improve our services” is too vague. Explain specifically how the data will be used, who will have access to it, and what controls individuals have over their information.

Allow people to opt out. Whenever possible, provide people choices about whether and how their data is used for AI training and deployment. This respects autonomy and helps build trust.

The Philosophical Foundations of AI Ethics

Understanding the practical principles is important, but it’s also valuable to explore where these ideas come from philosophically. AI ethics doesn’t exist in a vacuum—it builds on centuries of moral philosophy and ethical thinking.

Consequentialism: Judging by Outcomes

Consequentialist ethics, particularly utilitarianism, judges actions based on their outcomes. From this perspective, an AI system is ethical if it produces the greatest beneficial effects for the greatest number of people. This approach has intuitive appeal—shouldn’t we want technology that maximizes human well-being?

Many AI ethics frameworks implicitly adopt consequentialist thinking when they focus on measuring and maximizing beneficial outcomes while minimizing harms. Risk assessment, impact evaluation, and cost-benefit analysis all reflect consequentialist reasoning.

However, pure consequentialism has limitations. It can justify harming minorities if it benefits the majority. It requires us to predict outcomes that may be uncertain or unknowable. And it doesn’t account for how outcomes are distributed—whether benefits and harms are shared fairly.

Deontology: Following Universal Rules

Deontological ethics, associated with philosopher Immanuel Kant, argues that certain actions are inherently right or wrong regardless of their consequences. From this perspective, there are moral rules we should always follow—don’t lie, don’t use people merely as means to ends, and respect human dignity and autonomy.

This philosophy influences AI ethics through concepts like informed consent (respecting autonomy), transparency (honesty about AI capabilities and limitations), and human rights protections (treating people with dignity regardless of utilitarian calculations).

The challenge with purely deontological approaches is that moral rules sometimes conflict. What if being transparent about an AI system’s capabilities would help malicious actors misuse it? What if respecting privacy means less effective public health interventions? We need ways to navigate these tensions.

Virtue Ethics: Cultivating Good Character

Virtue ethics focuses less on specific actions or outcomes and more on the character and motivations of moral agents. It asks: What kind of people should AI developers be? What virtues—honesty, compassion, wisdom, and justice—should guide our work with AI?

This perspective reminds us that ethical AI development isn’t just about following checklists or calculating outcomes. It’s about cultivating professional cultures that value honesty, integrity, and concern for others. It’s about hiring people who demonstrate good judgment and moral sensitivity.

I find virtue ethics particularly relevant when facing novel ethical dilemmas—situations where we don’t have clear rules or can’t fully predict consequences. In those moments, we need people with the judgment and character to make wise choices.

Care Ethics: Emphasizing Relationships and Context

Care ethics, developed particularly by feminist philosophers, emphasizes relationships, context, and responsiveness to particular needs rather than abstract universal principles. It asks, “Who is vulnerable here?” What are the specific relationships and dependencies? How can we respond to concrete needs?

This approach enriches AI ethics by directing attention to power dynamics, historical context, and the particular circumstances of affected communities. It reminds us that ethical AI development isn’t just about following principles but about genuinely caring for the people our systems affect and being responsive to their concerns.

Applying AI Ethics in Practice: Real Scenarios

Understanding principles philosophically is one thing; applying them in messy real-world situations is another. Let me walk you through some scenarios that illustrate how these principles work in practice.

Scenario 1: The Hiring Algorithm Dilemma

A company develops an AI system to screen job applications, training it on ten years of hiring data. The system becomes very efficient at identifying candidates who match successful past hires. However, an audit reveals that the AI is less likely to recommend women for technical positions.

Ethical analysis:

From a fairness perspective, this is clearly problematic. The AI has learned historical gender biases present in past hiring decisions. Even if individual hiring managers weren’t consciously discriminating, patterns in the data have been amplified by the AI.

Accountability requires identifying who’s responsible for this bias and for fixing it. Is it the data scientists who built the model? Is it the HR team that supplied the training data? The executives who approved the system? In practice, all of these parties share responsibility.

Transparency would have helped catch this problem earlier. If the company had disclosed how the system worked and tested it across demographic groups before deployment, they might have identified the bias. Going forward, they need to be transparent with applicants about AI use in hiring.

Privacy is also relevant. The company needs to ensure that the detailed information collected for AI screening is protected and used only for legitimate hiring purposes.

The solution: The company needed to retrain the model using bias mitigation techniques, expand their training data to include more diverse successful employees, implement human oversight for hiring decisions, and regularly audit the system’s performance across different demographic groups.

Scenario 2: The Health Monitoring App

A health technology startup develops an AI-powered app that monitors users’ physical activity, sleep patterns, and heart rate to provide personalized health recommendations. The AI identifies patterns that might indicate health risks and encourages users to see doctors when appropriate.

Ethical considerations:

Privacy is paramount. The app collects extremely sensitive health information. Users need clear information about what data is collected, how it’s protected, and who has access to it. The company should use privacy-preserving techniques and avoid sharing detailed individual data with third parties.

Transparency matters because health decisions have serious consequences. Users should understand that the AI provides suggestions, not diagnoses. They should know what patterns the AI is looking for and what limitations it has.

Fairness requires ensuring the AI works well across different populations. If the AI was primarily trained on data from young, healthy users, it might not provide accurate recommendations for elderly users or people with chronic conditions.

Accountability means having medical professionals involved in system design and creating clear escalation paths when the AI identifies serious health concerns. There should be humans responsible for monitoring the system’s accuracy and responding to user concerns.

Scenario 3: The Content Moderation System

A social media platform uses AI to automatically detect and remove harmful content, including hate speech, misinformation, and graphic violence. The AI processes millions of posts per day, flagging content for human review or automatically removing clear violations.

Ethical tensions:

This scenario illustrates how ethical principles can conflict. Privacy might suggest limiting data collection about users and their posts. But fairness and safety require understanding context to moderate content appropriately. Transparency about moderation rules could help bad actors evade detection.

Accountability is complicated because content moderation decisions affect free expression—a fundamental right. Who should be accountable when the AI makes mistakes? How should the platform balance different stakeholders: users posting content, users viewing content, advertisers, regulators, and broader society?

The approach: The most ethical systems combine AI efficiency with human judgment, particularly for borderline cases. They’re transparent about general policies while protecting specific detection methods. They implement appeals processes. They audit for fairness to ensure that moderation doesn’t disproportionately silence marginalized voices. And they accept responsibility for both over-moderation (censorship) and under-moderation (allowing harm).

Common Ethical Pitfalls and How to Avoid Them

Through my work in AI ethics, I’ve noticed patterns in how projects go wrong ethically. Here are common pitfalls and strategies to avoid them.

Pitfall 1: Ethics as an Afterthought

Many organizations treat ethics as something to think about after they’ve already built an AI system. They develop the technology, deploy it, and only consider ethical implications when problems arise or critics complain.

How to avoid it: Integrate ethical AI principles from the very beginning of your project. During the planning phase, conduct an ethical impact assessment asking: Who will this affect? How might it cause harm? What are our responsibilities to different stakeholders? Include ethicists or people with ethics training on your development team. Make ethics a regular discussion point in project meetings, not a one-time checklist.

Pitfall 2: Confusing Legal Compliance with Ethics

Following the law is necessary but not sufficient for ethical AI. Legal requirements represent minimum standards and often lag behind technological developments. Something can be legal but still unethical.

How to avoid it: View legal compliance as the floor, not the ceiling. Ask not just “Is this practice legal?” but “Is this right? Does this respect human dignity? Would we be comfortable if everyone knew how this system works?” Seek to do better than legal requirements, not just meet them.

Pitfall 3: Assuming Automation Equals Objectivity

There’s a dangerous myth that replacing human decision-making with AI automatically makes processes more objective and fair. In reality, AI systems can amplify and scale human biases present in training data or system design choices.

How to avoid it: Approach AI systems with healthy skepticism. Test rigorously for bias. Remember that algorithms reflect the values, assumptions, and blind spots of their creators. Just because a decision is automated doesn’t mean it’s neutral or fair.

Pitfall 4: Ignoring Power Dynamics

AI systems don’t operate in neutral contexts. They exist within relationships of power—between companies and users, governments and citizens, and employers and employees. Ethical analysis that ignores these power dynamics misses important considerations.

How to avoid it: Ask who benefits from your AI system and who bears risks. Consider how your system might affect already vulnerable or marginalized groups. Involve affected communities in design and evaluation. Be especially cautious when deploying AI systems that affect people who have little choice about whether to interact with them.

Pitfall 5: Prioritizing Innovation Over Impact

The tech industry often celebrates innovation for its own sake, rushing to deploy new AI capabilities without fully considering their implications. “Move fast and break things” is a terrible motto when the things being broken are people’s lives.

How to avoid it: Balance innovation with responsibility. Before deploying AI systems, especially in high-stakes domains, invest time in testing, evaluation, and impact assessment. Sometimes moving slower initially allows you to move faster later by avoiding expensive mistakes and rebuilding trust.

Building an Ethical AI Practice: Where to Start

If you’re convinced that AI ethics matters—and I hope you are—you might be wondering how to actually implement these principles in your work or organization. Here’s my practical advice based on what I’ve seen work.

Start with Values Clarification

Before you can build ethical AI, you need to articulate what values you’re trying to uphold. Have explicit conversations about: What does fairness means for our specific use case? What level of transparency is appropriate? How do we balance different stakeholder interests?

Document these values and the reasoning behind them. This creates a reference point for making difficult tradeoffs later.

Create Ethical Guidelines and Processes

Translate your values into concrete guidelines and decision-making processes. This might include:

- An ethical AI review checklist that teams complete before deploying systems

- Red lines—things you commit not to do regardless of business pressure

- Required assessments for high-risk applications

- Defined roles and responsibilities for ethical oversight

- Processes for investigating and responding to ethical concerns

Make these guidelines living documents that evolve as you learn from experience.

Invest in Education and Training

AI ethics requires knowledge and skills that many technical professionals haven’t traditionally been trained in. Invest in education about:

- Ethical frameworks and principles

- Bias detection and mitigation techniques

- Privacy-preserving technologies

- Stakeholder engagement methods

- Impact assessment approaches

Make ethics training ongoing, not a one-time workshop. As AI capabilities and risks evolve, so must your team’s understanding.

Build Diverse and Inclusive Teams

Homogeneous teams have collective blind spots. They miss ethical issues that would be obvious to people with different backgrounds and experiences. Actively recruit people with diverse perspectives—different genders, races, ages, disciplines, and life experiences.

Create team cultures where it’s safe and encouraged to raise ethical concerns. Reward people who identify problems, not just those who ship products quickly.

Engage with Affected Communities

The people most affected by your AI systems are experts in their own experiences and needs. Engage with them early and often. This might mean:

- User research that specifically explores ethical concerns and values

- Advisory boards that include community representatives

- Public comment periods for high-impact systems

- Partnerships with advocacy organizations

Listen genuinely and be willing to change your plans based on what you learn.

Measure What Matters

You can’t manage what you don’t measure. Develop metrics for ethical performance:

- Fairness metrics across different demographic groups

- Accuracy of explanations provided by your system

- Response times for addressing ethical concerns

- Diversity statistics for your team and data

- Privacy incident reports and response effectiveness

Review these metrics regularly and use them to drive improvement.

Plan for Things Going Wrong

Despite best efforts, mistakes happen. Have plans in place for:

- Monitoring deployed systems for unexpected behaviors

- Investigating ethical concerns and complaints

- Communicating with affected individuals and the public

- Making corrections quickly

- Learning from incidents to prevent recurrence

How you respond when things go wrong reveals your real commitment to ethical AI principles.

Frequently Asked Questions About AI Ethics

The Future of AI Ethics: Emerging Challenges

As AI capabilities expand, new ethical challenges emerge that we’re only beginning to grapple with. While I won’t predict exactly how these will unfold, I can identify areas requiring continued attention and development of our ethical frameworks for AI.

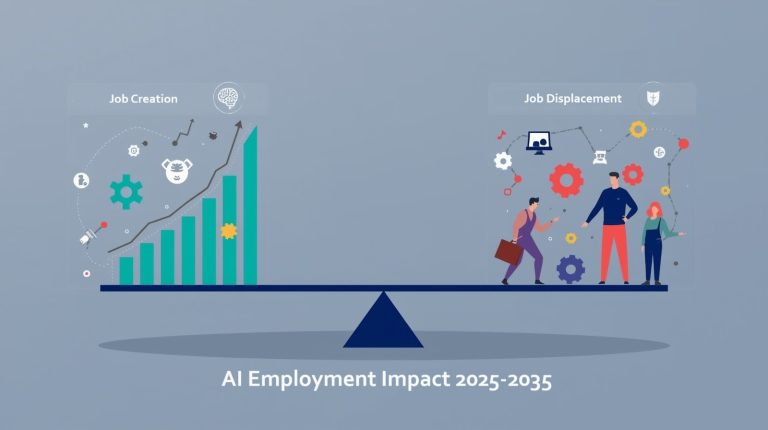

Autonomous systems and agency: As AI systems become more autonomous—self-driving vehicles, autonomous weapons, robotic caregivers—questions about agency and responsibility become more complex. If an autonomous vehicle causes an accident, who is morally and legally responsible? How do we maintain meaningful human control over systems that operate faster than humans can monitor?

Artificial general intelligence considerations: While we haven’t achieved human-level general AI, considering the ethical implications now helps us prepare. What rights, if any, might advanced AI systems deserve? How do we ensure that increasingly capable AI remains aligned with human values? What governance structures are needed for technology that might be transformative?

Global and cultural perspectives: Western philosophical frameworks and Silicon Valley values have dominated AI ethics. As AI becomes truly global, we need to integrate diverse cultural perspectives on fairness, privacy, community, and human flourishing. What seems ethical in one cultural context might not in another. How do we create AI that respects this diversity?

Environmental and sustainability considerations: Training large AI models requires enormous computational resources and energy. The environmental footprint of AI is an ethical consideration we’re only beginning to take seriously. How do we balance AI’s benefits with its environmental costs?

Labor and economic impacts: AI automation affects employment, skills requirements, and economic inequality. These aren’t just economic issues—they’re deeply ethical questions about human dignity, purpose, and flourishing. What responsibilities do AI developers and deployers have to workers whose jobs are displaced?

Taking Your Next Steps in AI Ethics

Understanding Introduction to AI Ethics is just the beginning. The principles and frameworks I’ve outlined here require active engagement and continual learning. Here’s how you can continue developing your ethical AI practice.

Keep learning. AI capabilities and their implications evolve rapidly. Stay current by following reputable sources on AI ethics, attending webinars or conferences, reading case studies of ethical AI successes and failures, and engaging with diverse perspectives on technology ethics.

Practice ethical reasoning. When you encounter AI systems in your daily life or work, pause to think through the ethical dimensions. Ask yourself: Is the outcome fair? Is this process transparent? Who benefits? Who might be harmed? What alternatives exist? Regular practice develops your ethical intuition and analytical skills.

Speak up. If you notice ethical problems with AI systems you use or develop, say something. Ethics thrives when people feel empowered to raise concerns. Your voice matters, whether you’re a developer who can change a system, a user who can report problems, or a citizen who can advocate for better standards.

Engage with others. AI ethics isn’t something you figure out alone. Join communities of practice, participate in discussions, seek out mentors, and share what you learn. Collective wisdom and diverse perspectives lead to better ethical outcomes than any individual can achieve alone.

Remember the humans. Behind every dataset, every algorithm, and every automated decision are real people with lives, hopes, and vulnerabilities. Keep those humans at the center of your thinking. Technology should serve human flourishing, not the other way around.

The field of AI ethics can sometimes feel abstract or overwhelming, but it ultimately comes down to something simple: treating people with dignity and respect, even when that respect must be mediated through technological systems. You don’t need to be a philosopher or a technical expert to contribute to more ethical AI. You just need to care about how technology affects people and be willing to do the work of thinking through those implications carefully.

As AI becomes more prevalent in our lives, the ethical choices we make—collectively and individually—will shape the kind of world we live in. I hope this introduction provides you the foundation to engage with these crucial questions thoughtfully and to advocate for AI that reflects our best values rather than our worst impulses. The future of AI ethics isn’t predetermined. It’s something we’re creating right now, with every choice we make about how to develop, deploy, and use these powerful technologies.

About the Author

Nadia Chen is an expert in AI ethics and digital safety with over a decade of experience helping organizations and individuals navigate the confusing intersection of technology and human values. She specializes in making ethical frameworks accessible to non-technical audiences and developing practical approaches to responsible AI development. Nadia has consulted for nonprofits, healthcare providers, educational institutions, and technology companies, always with a focus on protecting human dignity and rights in the age of artificial intelligence. Through her writing and workshops, she empowers people to use AI thoughtfully and to advocate for technology that serves humanity’s best interests.