What is Artificial Intelligence? A Comprehensive Beginner’s Guide

What is Artificial Intelligence? It’s the question on everyone’s mind as we navigate a world increasingly shaped by smart technologies. If you’ve ever wondered how your phone recognizes your face, how streaming services seem to know exactly what you want to watch next, or how virtual assistants understand your voice commands, you’re already experiencing artificial intelligence in action. But beyond these everyday encounters, AI represents something much more profound: our attempt to create machines that can think, learn, and solve problems in ways that mirror human intelligence.

I’m Nadia Chen, and throughout my work in AI ethics and digital safety, I’ve seen firsthand how transformative—and sometimes concerning—these technologies can be. I want to teach you about AI and how to use it safely. Whether you’re a student, professional, parent, or simply someone curious about the technology shaping our future, this guide will walk you through everything you need to know about artificial intelligence, from its basic definition to its real-world applications and ethical implications.

The truth is, AI isn’t some distant science fiction concept anymore. It’s here, it’s growing, and understanding it has become essential for anyone who wants to navigate the modern world confidently. But here’s the good news: you don’t need a computer science degree to grasp the fundamentals. Let’s demystify AI together and explore how you can use these powerful tools safely and effectively.

Understanding Artificial Intelligence: The Simple Definition

At its core, artificial intelligence refers to computer systems designed to perform tasks that typically require human intelligence. These tasks include learning from experience, recognizing patterns, understanding language, making decisions, and solving complex problems. Think of AI as teaching machines to “think” in ways that resemble human cognitive processes, though it’s important to understand that machines don’t actually think or feel the way humans do.

When we talk about machine learning, we’re describing one of the primary methods through which AI systems acquire their capabilities. Instead of being explicitly programmed with rules for every possible scenario, machine learning algorithms analyze vast amounts of data, identify patterns, and improve their performance over time without human intervention for every decision. It’s similar to how you learned to recognize a cat: you didn’t memorize a rulebook; you saw many examples until you could identify cats automatically.

The distinction between traditional computer programming and AI is crucial. Traditional programs follow strict, predetermined instructions: “If this happens, do that.” AI systems, particularly those using machine learning, develop their own strategies based on data and experience. A traditional program might be told, “If the email contains these specific words, mark it as spam.” An AI system learns what spam looks like by analyzing millions of emails, developing its own understanding of spam characteristics that even its creators might not have explicitly programmed.

This learning capability makes AI incredibly powerful for handling nuanced, complex tasks where writing explicit rules would be impractical or impossible. However, it also introduces questions about transparency and accountability—issues we’ll explore more deeply later in this guide.

The Evolution of AI: A Brief Historical Perspective

Understanding AI history helps contextualize where we are today and where we might be heading. The concept of artificial intelligence isn’t as new as many people think. The seeds were planted in 1950 when British mathematician Alan Turing published his groundbreaking paper asking, “Can machines think?” He proposed what became known as the Turing Test: if a machine could engage in conversation indistinguishably from a human, could we consider it intelligent?

The term “artificial intelligence” was officially coined in 1956 at the Dartmouth Conference, where pioneering researchers gathered with the optimistic belief that they could create thinking machines within a generation. Those early years, from the 1950s through the 1970s, were marked by tremendous enthusiasm and some notable achievements, including early chatbots and problem-solving programs. However, they were also characterized by overpromising and underdelivering, leading to periods called “AI winters” when funding and interest dried up due to unmet expectations.

The 1980s and 1990s saw AI finding practical applications in expert systems—programs that captured human expertise in specific domains like medical diagnosis or financial planning. But the real transformation began in the 2010s with the convergence of three critical factors: vastly more powerful computing hardware, the availability of enormous datasets through the internet, and breakthrough algorithms in deep learning—a sophisticated form of machine learning inspired by the structure of the human brain.

This convergence enabled the AI revolution we’re experiencing today. Systems that once struggled with simple tasks like recognizing handwritten digits can now generate photorealistic images, engage in nuanced conversations, diagnose diseases from medical scans, and even create original music and art. Although the AI systems of 2025 have advanced significantly from just a decade ago, we are still in the early stages of comprehending their full potential and implications.

How Artificial Intelligence Actually Works

To understand how AI works, it helps to break down the key components and processes that power these systems. While the mathematics can get complex, the fundamental concepts are surprisingly accessible.

Data: The Foundation of AI

Every AI system starts with data. This could be text, images, numbers, audio, video, or any other information that can be digitized. The quality and quantity of this data directly impact how well the AI performs. An AI trained to recognize medical conditions needs thousands or millions of medical images; an AI that writes text needs vast amounts of written material to learn language patterns.

This dependency on data introduces important considerations about privacy and data security. When you use AI tools, understanding where your data goes and how it’s used becomes crucial. Reputable AI services should clearly explain their data practices, and you should always be cautious about sharing sensitive personal information with AI systems.

Algorithms: The Learning Process

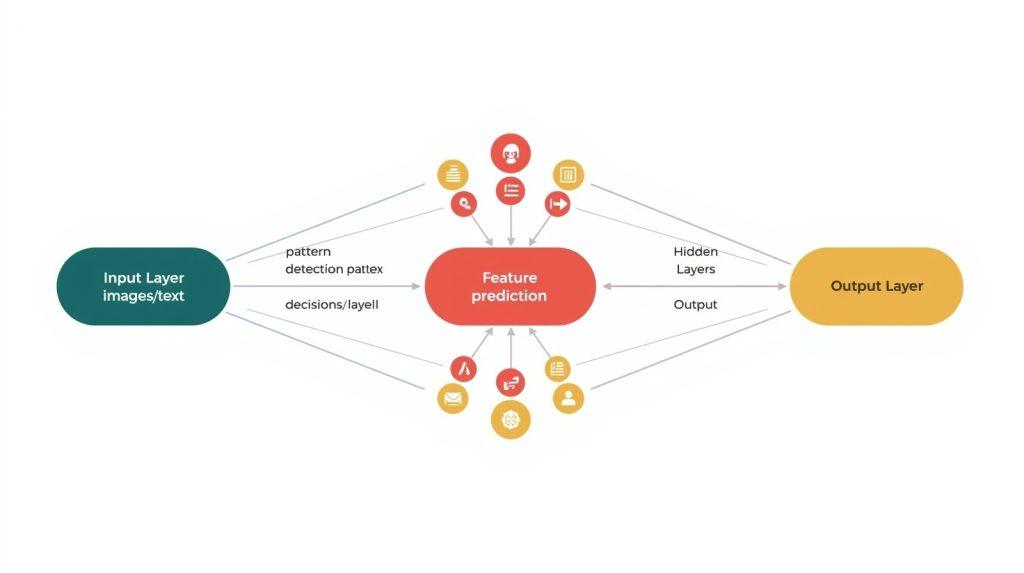

The algorithms are the mathematical frameworks that process data and enable learning. In neural networks—the architecture behind much of modern AI—the system consists of layers of interconnected nodes, somewhat analogous to neurons in a brain. Information flows through these layers, with each detecting increasingly complex patterns.

For example, when an AI learns to recognize faces, the first layers might detect simple edges and colors. Middle layers identify facial features like eyes, noses, and mouths. Final layers combine these features to recognize specific individuals. The remarkable aspect is that the AI discovers these patterns on its own through exposure to data, rather than being explicitly programmed with rules about what faces look like.

The learning process typically involves feeding the AI many examples along with the correct answers (this is called supervised learning), allowing the system to adjust its internal parameters until it reliably produces accurate results. Other learning approaches include unsupervised learning, where the AI finds patterns without being given correct answers, and reinforcement learning, where the AI learns through trial and error, receiving rewards for desirable behaviors.

Training and Improvement

Training an AI model can take days, weeks, or even months, consuming enormous computational resources. During training, the system processes data repeatedly, gradually refining its understanding. This aspect is why the most powerful AI systems are typically created by large organizations with substantial resources—though smaller, specialized AI tools are increasingly accessible to everyone.

Once trained, the AI model can be deployed to make predictions or generate outputs on new data it hasn’t seen before. The quality of these outputs depends on how well the training data represented the real-world scenarios the AI will encounter. This is why bias in AI is such a critical concern: if training data contains biases, the AI will learn and potentially amplify those biases in its decisions.

Types of Artificial Intelligence: From Narrow to General

Not all AI is created equal. Understanding the different types of AI helps clarify what current systems can actually do versus what remains in the realm of future possibilities.

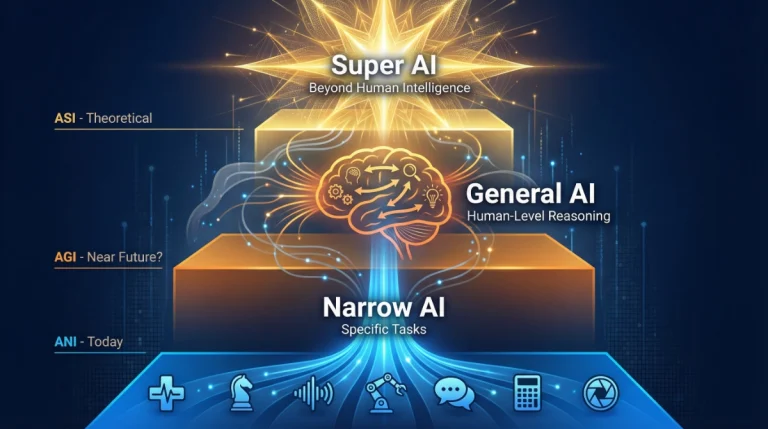

Narrow AI (Weak AI): Today’s Reality

Narrow AI, also called weak AI, refers to systems designed to perform specific tasks. This encompasses virtually all AI that exists today. Your smartphone’s voice assistant is narrow AI—excellent at understanding speech and retrieving information, but incapable of driving a car or diagnosing diseases. Similarly, AI that plays chess at superhuman levels can’t suddenly decide to write poetry or manage your schedule.

These systems excel within their specific domains, often surpassing human performance, but they lack the flexibility and general understanding that humans possess. Narrow AI includes:

- Image recognition systems that identify objects, faces, or medical conditions in photos

- Natural language processing tools that understand and generate text

- Recommendation algorithms that suggest products, movies, or content

- Autonomous systems like self-driving vehicle components

- Predictive analytics for forecasting weather, financial trends, or customer behavior

The narrow AI we interact with daily is already remarkably capable, but it’s important to recognize its limitations. These systems don’t truly “understand” content the way humans do; they recognize patterns and statistical relationships in data. This distinction matters when considering appropriate applications and potential risks.

General AI (Strong AI): The Future Goal

Artificial General Intelligence (AGI), or strong AI, represents the theoretical future where machines possess human-like cognitive abilities across any intellectual task. An AGI system could learn new skills, transfer knowledge between domains, understand context deeply, and adapt to entirely novel situations—all things humans do naturally but current AI cannot.

AGI remains firmly in the research phase. Despite impressive advances, we haven’t achieved anything close to true general intelligence in machines. The challenges are immense: consciousness, common sense reasoning, genuine understanding, creativity, and emotional intelligence all remain elusive for AI systems.

Most AI researchers believe AGI is decades away, though predictions vary wildly. Some think we might achieve it by 2040-2050; others believe it may take a century or more or might even be impossible with current approaches.

Superintelligence: Speculation and Concern

Beyond AGI lies the concept of artificial superintelligence—hypothetical AI that surpasses human intelligence across all domains. This prospect raises profound questions about control, safety, and humanity’s future. While superintelligence remains purely speculative, it drives important conversations about AI safety research and the need for ethical frameworks before such systems could exist.

For now, your focus should be on understanding and safely using narrow AI—the technology that’s actually available and impacting your life today.

Real-World Applications: AI in Daily Life

AI applications have woven themselves into the fabric of modern life, often working invisibly in the background. Recognizing these applications helps you appreciate AI’s current capabilities and make informed decisions about using these technologies.

Personal Technology

Your smartphone is an AI powerhouse. Voice assistants use natural language processing to understand your questions and respond appropriately. Facial recognition uses computer vision to unlock your device. Your photo app automatically organizes pictures by recognizing faces, objects, and scenes. Predictive text learns your writing style to suggest words as you type.

These features exemplify AI’s ability to enhance convenience, but they also raise privacy considerations. Each of these systems processes personal data about you—your voice patterns, facial features, photos, and writing habits. Understanding privacy settings and data permissions becomes crucial in this context.

Healthcare and Medicine

AI is revolutionizing healthcare in ways that directly benefit patients. Medical AI systems analyze medical images like X-rays, MRIs, and CT scans to detect diseases, sometimes identifying conditions human radiologists might miss. AI helps predict patient outcomes, recommend treatments based on vast medical literature, and even discover new drug candidates by analyzing molecular structures.

Wearable devices use AI to monitor health metrics continuously, alerting users and doctors to potential problems. During the COVID-19 pandemic, AI played critical roles in tracking disease spread, accelerating vaccine development, and managing hospital resources.

However, medical AI isn’t infallible. It works best as a tool to support healthcare professionals rather than replace them. Human oversight remains essential for ensuring accurate diagnoses and appropriate care.

Entertainment and Media

Streaming services like Netflix and Spotify use AI recommendation systems to suggest content based on your viewing and listening history. These algorithms analyze patterns across millions of users to predict what you might enjoy. While convenient, this can also create “filter bubbles” where you’re primarily exposed to content similar to what you’ve already consumed.

AI also powers content creation itself. Generative AI can create music, generate realistic images, write stories, and even produce video content. News organizations use AI to write simple news reports about sports scores or financial data. Video games employ AI to create intelligent non-player characters and dynamic storylines.

Business and Productivity

In the workplace, AI automates routine tasks, analyzes business data for insights, manages customer service through chatbots, and assists with everything from scheduling to decision-making. AI writing assistants help professionals draft emails, reports, and presentations. Translation tools break down language barriers. Meeting transcription services automatically record and summarize discussions.

These productivity enhancements can save enormous time, but they also require critical evaluation. AI-generated content should be reviewed for accuracy, bias, and appropriateness before being used in professional contexts.

Transportation

Autonomous vehicle technology relies heavily on AI to perceive the environment, predict other vehicles’ behavior, and make split-second driving decisions. While fully self-driving cars remain uncommon, AI already assists with features like adaptive cruise control, lane-keeping, automatic emergency braking, and parking assistance.

Navigation apps use AI to predict traffic patterns, suggest optimal routes, and estimate arrival times with remarkable accuracy. Ride-sharing platforms optimize driver-rider matching and pricing through sophisticated algorithms.

The Benefits and Limitations of AI

Understanding both what AI can accomplish and where it falls short helps you use these technologies appropriately and set realistic expectations.

Key Benefits of AI

Efficiency and speed stand as AI’s most obvious advantages. Tasks that would take humans hours, days, or even years can be completed in seconds. Analyzing millions of data points, processing thousands of images, or generating comprehensive reports—AI excels at scale and speed.

Consistency represents another significant benefit. Unlike humans who get tired, distracted, or have bad days, AI systems perform the same task with identical dedication whether it’s the first time or the millionth. This makes AI valuable for quality control, monitoring, and other tasks requiring unwavering attention.

AI extends human capabilities into realms previously impossible. It detects patterns in complex datasets that human analysts would never spot. It processes information across more dimensions than human cognition can manage simultaneously. Through augmentation, AI doesn’t replace human intelligence but amplifies it.

Accessibility improves as AI makes sophisticated capabilities available to more people. Translation tools enable communication across language barriers. Text-to-speech and speech-to-text help people with disabilities. Educational AI provides personalized tutoring to students who might not afford private instruction.

Important Limitations

Despite these benefits, AI has significant limitations that users must understand. AI lacks true understanding—it recognizes patterns without genuine comprehension. An AI might generate a medically accurate-sounding text about a disease while having no actual concept of health, illness, or human biology.

Context and common sense remain challenging. AI systems often struggle with situations slightly outside their training data or scenarios requiring the common-sense reasoning humans use effortlessly. This is why you’ll occasionally see AI make bizarre mistakes that no human would make—suggesting glue on pizza or providing dangerous advice because it detected a statistical pattern without understanding real-world implications.

Bias and fairness issues pervade AI systems. Because AI learns from human-generated data, it inherits human biases present in that data. Facial recognition performs worse on darker skin tones because training datasets were predominantly composed of lighter-skinned faces. Hiring algorithms may discriminate based on gender or race if trained on historical data reflecting discriminatory practices. Language models may generate stereotypical or offensive content reflecting problematic patterns in their training data.

Hallucinations and errors occur when AI generates plausible-sounding but incorrect information. This is particularly common with generative AI systems that create text, images, or other content. An AI might confidently cite nonexistent research papers, invent false facts, or generate misleading images—all while appearing authoritative.

Lack of accountability creates challenges. When AI makes a consequential decision—rejecting a loan application, suggesting a medical diagnosis, or filtering job candidates—determining responsibility becomes complex. The developers? The organization deploying it? The AI itself? This ambiguity complicates efforts to address harms.

Ethical Considerations and Responsible AI Use

As an advocate for AI ethics and digital safety, I believe understanding the ethical dimensions of AI is just as important as understanding the technology itself. These considerations affect everyone who uses AI, creates with it, or is impacted by its decisions.

Privacy and Data Protection

Every time you use an AI service, you’re potentially sharing data. Free AI tools often use your inputs to improve their systems, meaning your questions, uploaded documents, or generated images might become part of their training data. This has serious implications for privacy and confidentiality.

Best practices for protecting your privacy:

- Read privacy policies before using AI services, particularly the sections about data usage and retention

- Never input sensitive information like passwords, financial details, health records, or proprietary business information into AI systems unless you fully trust and understand their security measures

- Use privacy-focused alternatives when available—some AI services explicitly commit not to train on user data

- Consider anonymizing information before inputting it into AI systems

- Disable data sharing options in settings when possible

- Use separate accounts for personal versus professional AI use

- Regularly review permissions you’ve granted to AI applications

Understanding that convenience often comes at the cost of privacy helps you make informed decisions about which AI tools to use and how.

Bias, Fairness, and Discrimination

AI systems can perpetuate and amplify societal biases in ways that harm individuals and communities. Recognizing this isn’t about rejecting AI—it’s about using it more thoughtfully.

How to approach AI with awareness of bias:

- Question AI decisions, especially in high-stakes situations involving employment, credit, housing, or justice

- Seek diverse perspectives rather than relying solely on AI recommendations

- Test AI systems when possible to see if they perform differently across demographic groups

- Advocate for transparency from organizations using AI to make decisions about you

- Support diverse AI development teams that bring varied perspectives to technology creation

- Be skeptical of claims that AI is “objective” or “neutral”—all systems reflect their creators’ choices and training data

When you encounter AI that seems biased or produces discriminatory results, reporting these issues to developers helps improve systems for everyone.

Misinformation and Deepfakes

Generative AI has made creating convincing fake content easier than ever. Deepfakes—realistic but fabricated audio or video—can show people saying or doing things they never did. AI-generated text can spread misinformation at scale. Synthetic images can document events that never occurred.

Protecting yourself and others from AI-generated misinformation:

- Verify sources before believing or sharing content, especially on social media

- Look for multiple confirmations of important claims from reputable sources

- Be skeptical of content that seems designed to provoke strong emotional reactions

- Check for telltale signs of AI generation: unnatural expressions, inconsistent lighting, strange artifacts, or implausible details

- Use verification tools and reverse image search when something seems suspicious

- Educate others about the existence and capabilities of generative AI

- Think before sharing—spreading misinformation, even unintentionally, has consequences

In an era where “seeing is believing” no longer holds true, critical thinking and media literacy become essential skills.

Environmental Impact

Large AI systems require enormous computational resources, consuming significant energy and contributing to carbon emissions. Training a single large language model can produce as much carbon as five cars over their entire lifetimes. As AI becomes more prevalent, its environmental footprint grows.

Using AI more sustainably:

- Use AI purposefully rather than wastefully—every query consumes resources

- Prefer efficient models when available, particularly for simple tasks

- Support companies that prioritize sustainable AI development

- Consider the environmental cost when deploying AI solutions

- Advocate for green AI practices in your organization or community

Balancing AI’s benefits against its environmental costs represents an ongoing ethical challenge that will shape technology’s role in addressing climate change.

Transparency and Explainability

Many AI systems operate as “black boxes”—their decision-making processes are opaque even to their creators. This lack of transparency makes it difficult to understand why an AI made a particular decision, identify errors, or ensure fairness.

Demanding better AI transparency:

- Ask questions about how AI systems work when they affect your life

- Request explanations for AI-driven decisions, particularly negative ones

- Support legislation requiring AI transparency and explainability

- Choose services that provide clear information about their AI’s capabilities and limitations

- Participate in public discussions about AI governance and regulation

The more people demand transparency, the more pressure exists for developers to create more understandable and accountable systems.

Getting Started with AI: Practical Steps for Beginners

Understanding AI conceptually is valuable, but actually using these tools is how you’ll gain real comfort and competence. Here’s how to begin your AI journey safely and effectively.

Step 1: Start with Familiar Tools

Begin with AI features already built into technology you use daily. Experiment with your phone’s voice assistant, asking it increasingly complex questions to understand its capabilities and limitations. Try your email’s smart compose feature. Use predictive text consciously, noticing when it helps and when it suggests something completely wrong.

This low-stakes experimentation builds familiarity without requiring new accounts, subscriptions, or learning curves. You’ll develop intuition about what AI can and cannot do.

Step 2: Explore Free AI Tools

Numerous free AI services let you experiment with different capabilities:

- ChatGPT and similar conversational AI tools for writing assistance, brainstorming, and learning

- Google Lens or similar image recognition tools for identifying objects, translating text in photos, or finding information about things you photograph

- Grammarly or other writing assistants for improving your writing

- Canva’s AI features for graphic design assistance

- Free AI art generators to create images from text descriptions

When trying new tools, create accounts specifically for experimentation, using an email address that isn’t linked to sensitive personal information.

Step 3: Understand What You’re Inputting

Before entering information into any AI system, ask yourself:

- Is this information sensitive or confidential?

- Would I be comfortable if this became public?

- Does this AI service’s privacy policy allow them to use my inputs for training?

- Could this information identify me or others?

Develop the habit of pausing before clicking “submit” on AI queries. This moment of reflection protects your privacy and helps you use AI more thoughtfully.

Step 4: Verify AI Outputs

Never blindly trust AI-generated content. Every output should be verified:

- Fact-check claims against reliable sources

- Test code before deploying it

- Review writing for accuracy, tone, and appropriateness

- Examine images carefully for artifacts or inconsistencies

- Consult experts when AI provides advice on important matters

Think of AI as a collaborator that provides drafts, suggestions, or starting points—not finished products ready to use without human review.

Step 5: Learn Effective Prompting

How you communicate with AI significantly affects the quality of results. Prompt engineering—crafting effective instructions for AI—is a skill worth developing.

Principles of good prompting:

- Be specific about what you want: “Write a 300-word introduction to quantum physics for middle school students” works better than “Explain quantum physics”

- Provide context that helps the AI understand your needs: “I’m a small business owner creating marketing materials for eco-friendly products”

- Specify format when relevant: “Provide three bullet points” or “Create a numbered step-by-step guide”

- Include examples of the style or content you’re seeking

- Iterate and refine based on results—AI interactions are conversations, not single transactions

Step 6: Understand Limitations and Alternatives

AI excels at some tasks and fails at others. Knowing when AI isn’t the right tool is as important as knowing when it is.

When AI might not be appropriate:

- Tasks requiring nuanced human judgment or empathy

- Decisions with significant consequences for people’s lives

- Creative work where the process matters as much as the product

- Situations requiring accountability and liability

- Contexts where privacy and security are paramount

- Tasks involving verified, up-to-date factual information (AI’s training data has cutoff dates)

For these situations, human expertise, traditional tools, or alternative approaches may serve you better.

Step 7: Stay Informed About Developments

AI evolves rapidly. Capabilities that didn’t exist last year are commonplace today; today’s limitations may be overcome tomorrow. Following AI developments helps you use these tools effectively and advocate for responsible deployment.

Ways to stay informed:

- Follow reputable technology news sources that cover AI thoughtfully

- Join online communities discussing AI tools and best practices

- Take free online courses about AI fundamentals (many universities offer them)

- Experiment regularly with new AI capabilities as they emerge

- Participate in discussions about AI’s societal impact

- Share your experiences with others, particularly concerns about safety or ethics

Building a community of practice around AI helps everyone learn and use these technologies more responsibly.

Common Questions About Artificial Intelligence

Is AI dangerous?

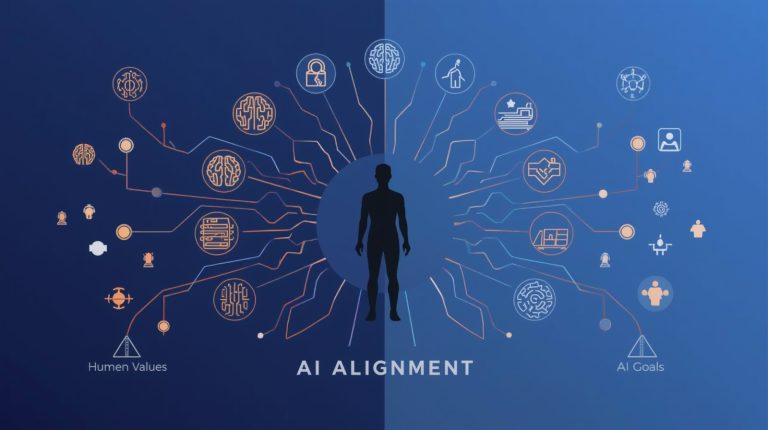

AI itself isn’t inherently dangerous, but like any powerful technology, it can be misused or cause harm through unintended consequences. Current narrow AI poses risks primarily through enabling new forms of fraud, spreading misinformation, perpetuating biases, and privacy violations. The key is using AI thoughtfully with appropriate safeguards. Future advanced AI systems could pose more significant risks, which is why researchers focus on AI safety and alignment—ensuring AI systems do what humans actually want them to do.

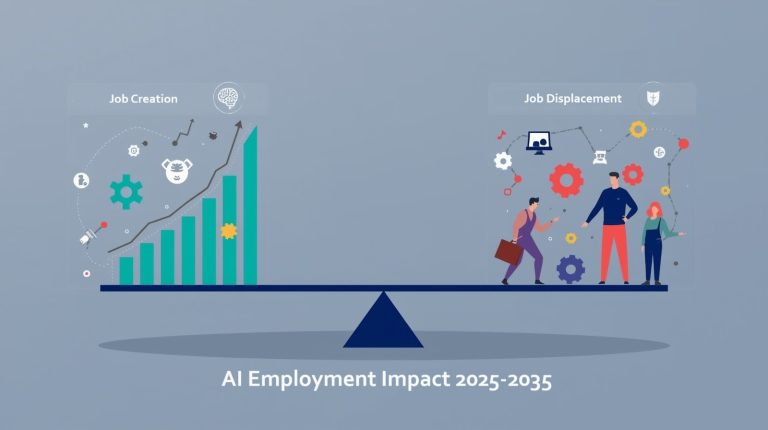

Will AI take my job?

AI will transform many jobs rather than simply eliminate them. Some roles will indeed become obsolete, while others will evolve to work alongside AI tools. History suggests technology creates new jobs even as it eliminates old ones, though transitions can be difficult for affected workers. The most productive approach is learning how to use AI as a tool that enhances your capabilities, making yourself more valuable by combining human strengths (creativity, empathy, complex reasoning, and ethical judgment) with AI’s strengths (speed, scale, and pattern recognition).

How accurate is AI?

AI accuracy varies enormously depending on the task, system quality, and training data. Some AI systems achieve superhuman performance in narrow domains like image recognition or game-playing. Others produce unreliable results, particularly when generating novel content or operating outside their training data. Never assume AI is accurate without verification. Always question AI outputs, especially in high-stakes situations, and use AI as a tool to augment human judgment rather than replace it.

Can AI be creative?

AI can generate novel outputs—combining existing elements in new ways—which appears creative. AI creates art, writes stories, composes music, and designs products. However, whether this constitutes true creativity remains philosophically debated. AI lacks intentionality, emotional experience, and genuine understanding that many consider fundamental to creativity. Regardless of definitions, AI is already a powerful creative tool that augments human creativity, particularly in generating ideas, providing variations, and handling technical execution.

Is my data safe with AI?

Data safety depends on which AI services you use and how you use them. Reputable AI companies implement security measures, but no system is perfectly secure. Free AI services often use your data to improve their models. Enterprise or privacy-focused services may offer stronger guarantees. The safest approach is assuming any data you input to AI might eventually become public and modifying your behavior accordingly—never sharing truly sensitive information unless you’ve carefully evaluated the risks and safeguards.

Do I need to learn programming to use AI?

No. While understanding programming helps you use certain AI tools and understand how they work, most modern AI applications are designed for non-technical users. Conversational AI, image generators, writing assistants, and other tools require no coding knowledge. However, developing some basic technical literacy—understanding concepts like algorithms, data, and how systems process information—will help you use AI more effectively and critically.

The Future of AI: What Comes Next

Predicting AI’s future is challenging given the field’s rapid pace, but certain trends seem clear. AI will become increasingly integrated into every aspect of life, from education and healthcare to entertainment and work. Systems will likely become more capable, more accessible, and hopefully more aligned with human values and safety considerations.

Emerging AI trends to watch:

Multimodal AI that processes and generates multiple types of content—text, images, audio, and video—simultaneously, enabling richer interactions and applications. We’re already seeing early versions, but integration will deepen.

Personalized AI that learns your preferences, adapts to your needs, and provides customized experiences across services. This promises tremendous convenience but raises significant privacy questions about how much data we’re comfortable sharing.

AI agents that can complete complex multi-step tasks autonomously, like planning and booking entire vacations, managing your schedule, or coordinating projects. These promise efficiency but require careful consideration of control and accountability.

Improved AI safety and alignment as researchers and organizations invest in ensuring AI systems behave as intended and remain under human control. This includes work on transparency, interpretability, and robust safeguards.

Regulatory frameworks as governments worldwide grapple with governing AI development and deployment. Expect laws addressing privacy, bias, transparency, and accountability for AI systems.

Democratization of AI as tools become more accessible to individuals and smaller organizations, not just tech giants. This could enable innovation but also creates challenges in preventing harmful uses.

The future of AI will be shaped not just by technical capabilities but by societal choices about how we want to use these technologies.

Taking Action: Your AI Journey Starts Now

Understanding artificial intelligence intellectually is valuable, but the real learning begins when you actively engage with these technologies. The gap between knowing about AI and actually using it effectively is where many people hesitate, often due to uncertainty or concern about making mistakes. Let me assure you: experimenting with AI in thoughtful, measured ways is how you’ll develop genuine competence and confidence.

Building Your AI Toolkit

Creating a curated collection of AI tools that serve your specific needs transforms AI from an abstract concept into practical assistance. Consider your daily activities and pain points—where do you spend time on repetitive tasks? Where could you use creative inspiration? What information do you wish you could access or process more easily?

For personal productivity, explore AI writing assistants that help draft emails, summarize long documents, or brainstorm ideas. These tools don’t replace your thinking; they accelerate the drafting process, letting you focus energy on refining and personalizing rather than starting from scratch.

For creative projects, investigate AI image generators, music composition tools, or video editing assistants. These technologies democratize creative capabilities that once required expensive software and years of training. A small business owner can create professional-looking marketing materials; a teacher can generate custom illustrations for lessons; a hobbyist can explore artistic ideas without technical barriers.

For learning and research, use AI to explain complex topics, translate foreign language materials, or generate study materials. AI tutoring systems can provide personalized instruction, though they work best supplementing rather than replacing traditional education.

Start with one or two tools aligned with your immediate needs rather than trying to master everything simultaneously. Deep familiarity with a few AI applications serves you better than superficial knowledge of many.

Developing Critical AI Literacy

As AI becomes ubiquitous, the ability to evaluate AI-generated content and understand when you’re interacting with AI systems becomes as fundamental as traditional literacy. AI literacy encompasses several interconnected skills that anyone can develop.

Recognition skills involve identifying when AI is being used—not always obvious given how seamlessly AI integrates into applications. Many websites use AI chatbots without clearly labeling them. Social media platforms employ AI algorithms to curate content without explicit disclosure. Developing awareness of AI’s pervasive presence helps you maintain appropriate skepticism about information sources and automated decisions.

Evaluation skills help you assess AI output quality. This includes recognizing hallucinations—plausible-sounding but false information AI systems generate. When an AI provides facts, dates, statistics, or citations, verify them independently. When AI offers advice, consider whether the recommendations make practical sense and align with expert guidance. When AI creates content, examine it for logical consistency, factual accuracy, and potential biases.

Questioning skills involve asking the right questions about AI systems that affect your life. Who created this AI? What data was it trained on? What is it optimizing for? Who benefits from its deployment? What happens to the data I provide? Who is accountable if it makes mistakes? These questions may not always have satisfactory answers, but asking them exerts pressure for greater transparency and accountability.

Adaptation skills help you adjust your behavior appropriately when using AI tools. This includes modifying how you phrase questions to get better results, recognizing when a task isn’t suitable for AI assistance, and combining AI capabilities with human judgment effectively.

These literacy skills aren’t innate—they develop through intentional practice and reflection on your AI interactions.

Protecting Your Digital Safety While Using AI

My work in AI ethics has shown me that many people unwittingly compromise their privacy and security through careless AI usage. Developing strong digital safety habits protects you as AI becomes more prevalent in daily life.

Data minimization represents your first line of defense. Before inputting information into AI systems, ask yourself, “What’s the minimum data I need to share to accomplish this task?” If you’re using an AI writing assistant to draft a business email, do you need to include the client’s full name and company details, or would pseudonyms serve equally well for getting feedback on structure and tone? If you’re having an AI help analyze a spreadsheet, can you remove identifying information first?

Service selection matters enormously for privacy. Not all AI tools treat your data identically. Some explicitly promise not to use your inputs for training their models. Others make your data available to their systems by default, with privacy protection requiring you to find and change settings. Enterprise or paid versions of AI tools often provide stronger privacy guarantees than free consumer versions.

When evaluating AI services, investigate:

- Where your data is stored and for how long

- Whether your inputs train future versions of the AI

- How the company shares data with third parties

- What happens to your data if you delete your account

- Whether the service encrypts your data in transit and at rest

- The company’s track record regarding data breaches and security

Account security becomes more critical as AI tools proliferate. Use strong, unique passwords for each AI service—password managers make this manageable. Enable two-factor authentication wherever available. Regularly review which services you’ve granted access to your accounts, removing unused authorizations.

Context separation helps contain potential breaches. Consider using different email addresses for different categories of AI tools—one for experimental services, another for productivity tools, and another for anything involving sensitive data. This way, if one account is compromised, the damage doesn’t extend to everything.

Regular audits of your AI tool usage help maintain security hygiene. Quarterly, review which AI services you’re using, which accounts are still active, what permissions you’ve granted, and whether you still need each service. Delete accounts you no longer use rather than letting them accumulate.

Advocating for Responsible AI Development

Individual users possess more power to shape AI’s trajectory than many realize. The collective choices we make about which AI systems to use, which companies to support, and which practices to accept influence how AI develops.

Voting with your usage sends signals to developers about what matters to users. When you choose privacy-respecting AI services over more invasive alternatives, you demonstrate market demand for ethical practices. When you provide feedback about bias, errors, or concerning outputs, you contribute to improving systems. When you refuse to use AI tools that don’t align with your values, you vote against problematic practices.

Participating in public discourse about AI helps ensure diverse perspectives shape policy and norms. This doesn’t require being an expert—your experiences as an AI user provide valuable insights. Share your concerns about AI systems that affect your life. Support legislation that aligns with your values regarding privacy, transparency, and accountability. Engage in community discussions about appropriate AI deployment.

Supporting ethical AI development can take many forms. This might mean choosing to work for or do business with companies demonstrating commitment to responsible AI. It might involve supporting nonprofit organizations working on AI safety and ethics. It might mean educating others in your community about AI’s capabilities, limitations, and risks.

Holding institutions accountable matters as AI increasingly mediates access to opportunities and resources. If you’re denied a loan, job, or service based on an algorithmic decision, you have the right to understand why and challenge unfair outcomes. Organizations using AI should provide clear explanations for automated decisions and meaningful appeal processes when AI makes mistakes.

The AI systems we’ll live with in ten or twenty years are being shaped right now by technical choices, business models, regulatory frameworks, and social norms. Your voice matters in these conversations.

Resources for Continued Learning

Deepening your understanding of AI is a journey, not a destination. The field evolves rapidly, making continuous learning essential for anyone wanting to use these technologies effectively and responsibly.

Online Courses and Tutorials

Numerous platforms offer accessible introductions to AI concepts:

- General introductory courses that explain AI fundamentals without requiring programming knowledge

- Specialized courses on specific topics like machine learning ethics, AI for business, or practical AI applications

- Platform-specific tutorials for popular AI tools teaching effective usage

- Video series explaining AI concepts through visualizations and analogies

Many universities provide free audit options for AI courses, allowing you to learn from leading researchers without financial barriers.

Communities and Forums

Connecting with others Learning about AI provides support, answers questions, and exposes you to diverse perspectives. Online communities focused on AI ethics, responsible AI usage, or specific AI tools offer valuable peer learning. Social media groups dedicated to AI literacy help you stay informed about new developments and best practices.

When participating in AI communities, approach discussions critically. Not all advice is sound, and not all enthusiastic claims about AI capabilities are accurate. Balance community learning with authoritative sources.

Books and Publications

Numerous excellent books make AI accessible to non-technical readers. Look for titles focusing on AI’s societal implications, ethical considerations, and practical applications rather than technical implementation details. Reputable technology magazines and journals often publish thoughtful analyses of AI developments.

Hands-On Experimentation

Ultimately, direct experience teaches more than passive learning. Set aside regular time—even just 30 minutes weekly—to experiment with AI tools. Try different approaches to the same task. Test AI’s limits. Make mistakes in low-stakes environments. Reflect on what works and what doesn’t.

Document your learning journey. Keep notes about which tools serve which purposes, what prompting strategies prove effective, and what limitations you’ve discovered. This personal knowledge base becomes increasingly valuable as you use AI more extensively.

Conclusion: Embracing AI With Eyes Wide Open

What is Artificial Intelligence? By now, you understand it’s far more than a simple technology—it’s a fundamental shift in how we interact with computers, process information, and augment human capabilities. AI represents both tremendous opportunity and significant responsibility.

The artificial intelligence systems available today excel at specific tasks, learn from vast datasets, recognize complex patterns, and generate novel outputs. They assist with everything from creative projects to medical diagnoses, from personal productivity to scientific research. Yet these same systems inherit biases from their training data, make inexplicable errors, generate convincing falsehoods, and raise profound questions about privacy, accountability, and human autonomy.

This duality—AI’s remarkable capabilities alongside its serious limitations and risks—defines the challenge we all face in using these technologies wisely. Neither uncritical enthusiasm nor fearful rejection serves us well. Instead, we need informed, thoughtful engagement that harnesses AI’s benefits while actively mitigating its harms.

As you move forward with AI, remember these key principles:

Maintain healthy skepticism about AI outputs while remaining open to their utility. Verify important information, question decisions, and combine AI assistance with human judgment.

Protect your privacy by being deliberate about what data you share with AI systems. Understand how services use your information and choose tools that align with your values.

Stay curious and keep learning as AI capabilities evolve. What’s impossible today may be commonplace tomorrow; what works well now may be superseded by better approaches.

Advocate for responsible development that prioritizes human well-being, fairness, transparency, and accountability. Your voice influences how AI evolves.

Help others learn by sharing your knowledge, experiences, and concerns. AI literacy shouldn’t be limited to technical experts—everyone affected by these technologies deserves to understand them.

The future of AI isn’t predetermined. It will be shaped by millions of individual choices—which tools we use, which practices we accept, which values we prioritize, and which institutions we hold accountable. By understanding artificial intelligence deeply and engaging with it thoughtfully, you participate in creating that future.

Your AI journey starts with small, deliberate steps: experimenting with tools that serve your needs, developing critical evaluation skills, protecting your privacy, and staying informed about developments. Don’t wait until you feel completely ready—nobody does. The only way to develop genuine AI competence is through hands-on experience guided by the principles we’ve explored.

The technologies we call artificial intelligence represent humanity’s attempt to extend our cognitive capabilities beyond biological limits. Whether this proves beneficial or harmful—whether AI enhances human flourishing or diminishes it—depends largely on how thoughtfully we deploy and govern these systems. That’s not just the responsibility of developers, policymakers, or ethicists. It’s all of ours.

I hope this guide has demystified AI enough that you feel empowered to engage with these technologies confidently while maintaining appropriate caution. You now understand what AI actually is, how it works, where it excels, where it fails, and how to use it responsibly. That knowledge equips you not just to use AI tools effectively but to be an informed participant in conversations about how AI should develop and what role it should play in society.

The artificial intelligence revolution isn’t something happening to you—it’s something you can actively shape through your choices, your advocacy, and your commitment to using these powerful tools wisely. Start small, experiment safely, question critically, and never stop learning.

Welcome to the AI era. You’re ready.

About the Author

Nadia Chen is an expert in AI ethics and digital safety with over a decade of experience helping individuals and organizations use artificial intelligence responsibly. With a background spanning computer science and philosophy, Nadia bridges the technical and human dimensions of AI, making complex technologies accessible to non-technical audiences. She has advised educational institutions, nonprofits, and technology companies on ethical AI deployment and has developed digital safety curricula used by thousands of learners worldwide.

Nadia’s work focuses on empowering people to use AI confidently while understanding its limitations and risks. She believes that AI literacy shouldn’t be confined to technical experts—everyone affected by these technologies deserves to understand how they work and how to use them safely. Through her writing, workshops, and advocacy, Nadia helps build a future where AI enhances human capabilities without compromising privacy, fairness, or autonomy.

When not writing about AI ethics, Nadia enjoys hiking, reading science fiction that explores human-technology relationships, and volunteering with organizations that promote digital literacy in underserved communities. She holds degrees in computer science and ethics from leading universities and continues to research how emerging technologies can be developed and deployed in ways that prioritize human well-being.